Intro

(intentionally less technical)

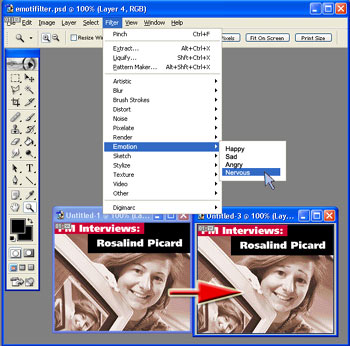

I noticed a while ago that if you distort a picture of a person's face just the right way it

seems to change his emotional expression.

I saw

this phenomenon when using a distort filter in Photoshop. I started to wonder if

I could automatically change the expression on someone's face. There is lots of work on facial expression generation, though most of

it relies heavily on human-in-the-loop scenarios and complex models such as the 3D

mesh models used in "Expression Cloning" [1]. I saw

this phenomenon when using a distort filter in Photoshop. I started to wonder if

I could automatically change the expression on someone's face. There is lots of work on facial expression generation, though most of

it relies heavily on human-in-the-loop scenarios and complex models such as the 3D

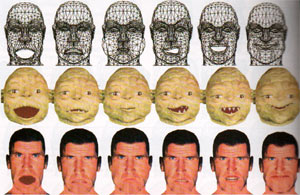

mesh models used in "Expression Cloning" [1]. The problem with the complex models is that they won't run in real-time, and

there are tons of problems with human-in-the-loop. The images generated (right)

using expression cloning are really neat, but they can't be generated

completely automatically. There was one really compelling paper [2] that started with a

human face, and then used geometric image warping to create new facial

The problem with the complex models is that they won't run in real-time, and

there are tons of problems with human-in-the-loop. The images generated (right)

using expression cloning are really neat, but they can't be generated

completely automatically. There was one really compelling paper [2] that started with a

human face, and then used geometric image warping to create new facial  expressions.

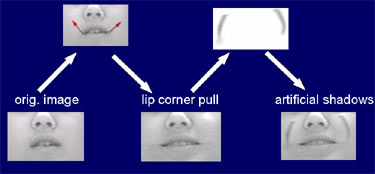

You can see the original image on the left, and the warped image is in the

middle. This is called expression mapping. To generate the image in the middle,

several training images of this girl's face are hand marked with a few dozen

control points. The paper also used expression ratio images (ERI). The ERIs

control for different lighting conditions to add shadows and wrinkles to the

facial expression, like in the right-most of the

three images. I really liked the results in this paper, so I set off to try to

create similar results, but using only a single image that was automatically expressions.

You can see the original image on the left, and the warped image is in the

middle. This is called expression mapping. To generate the image in the middle,

several training images of this girl's face are hand marked with a few dozen

control points. The paper also used expression ratio images (ERI). The ERIs

control for different lighting conditions to add shadows and wrinkles to the

facial expression, like in the right-most of the

three images. I really liked the results in this paper, so I set off to try to

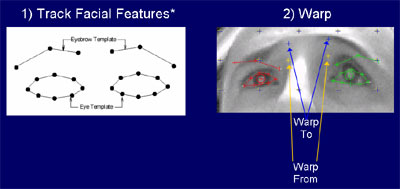

create similar results, but using only a single image that was automatically marked. I decided to use Ashish's fully automatic upper facial feature

tracking [3] to mark my images. Ashish's setup tracks the eyebrows and eyes of a

person in real-time video, so I thought I'd try to get my face warping to work

in real-time too. The biggest drawback to this system is that the setup

requires an infrared camera, which means that it can't be used on old video

taken with a regular camera. Otherwise, it works really well, and it

tracks the eyes and eyebrows of people who sit in front of the camera most of

the time. I used the tracking

marked. I decided to use Ashish's fully automatic upper facial feature

tracking [3] to mark my images. Ashish's setup tracks the eyebrows and eyes of a

person in real-time video, so I thought I'd try to get my face warping to work

in real-time too. The biggest drawback to this system is that the setup

requires an infrared camera, which means that it can't be used on old video

taken with a regular camera. Otherwise, it works really well, and it

tracks the eyes and eyebrows of people who sit in front of the camera most of

the time. I used the tracking

information

to try to warp (like the distorting Photoshop filters) the face into a new

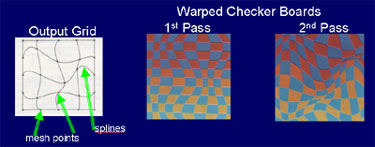

expression. I used a 2-pass mesh warping algorithm developed by Wolberg [4]. You

give it a bunch of input coordinates and corresponding output coordinates, and

it warps an image according to a mesh that it generates with the images. As I

tried to guage which features to warp and how far to warp them, I made

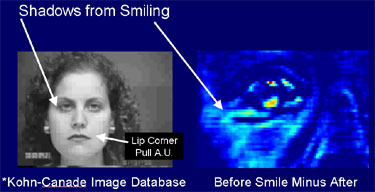

measurements on the Cohn-Kanade information

to try to warp (like the distorting Photoshop filters) the face into a new

expression. I used a 2-pass mesh warping algorithm developed by Wolberg [4]. You

give it a bunch of input coordinates and corresponding output coordinates, and

it warps an image according to a mesh that it generates with the images. As I

tried to guage which features to warp and how far to warp them, I made

measurements on the Cohn-Kanade database [5]. I measured how far people raised the corners of their mouths when

smiling, as well as how high people raised their eyebrows when displaying

certain emotions. Then I normalized the distances - I divided through by the

distance between the pupils since that's roughly standard across many different

people. These measurements helped me gauge the warping distances for my project.

I also tried to construct simple wrinkle templates by subtracting the image of a

smiling

database [5]. I measured how far people raised the corners of their mouths when

smiling, as well as how high people raised their eyebrows when displaying

certain emotions. Then I normalized the distances - I divided through by the

distance between the pupils since that's roughly standard across many different

people. These measurements helped me gauge the warping distances for my project.

I also tried to construct simple wrinkle templates by subtracting the image of a

smiling  person

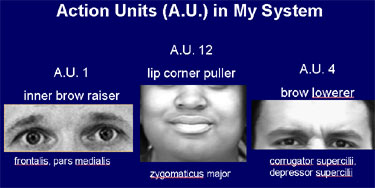

from a neutral image of the same person. I decided to try to warp three action

units: the inner brow raiser, the lip corner puller, and the brow lowerer. From

here on the images I show you are images generated by my E-DJ system. person

from a neutral image of the same person. I decided to try to warp three action

units: the inner brow raiser, the lip corner puller, and the brow lowerer. From

here on the images I show you are images generated by my E-DJ system.

Results & Analysis

(more technical)

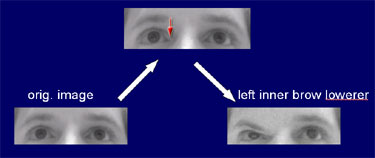

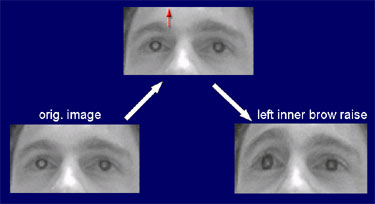

As you can see the brow raises and lowerers work really well. The lip corner

pull tended to look less realistic, but still had a good effect. Remember the

wrinkles/shadows added using the ERIs? I tried seeing what would happen if I

added in a cheek shadow from a different person when someone smiled on my

system, and it didn't look very realistic. It would probably be helpful to

segment lots of shadows from lots of people and use a matching scheme to see

which template fits which person best.

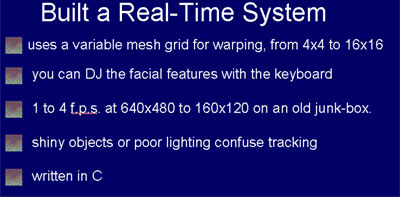

I created a real-time system in C, so that I could control the left and right

inner eyebrow, as well as the corners of the mouth using the keyboard. To warp,

I used C code based on Wolberg's original 2 pass mesh-warping algorithm. The

code is distributed with xmorph which uses it as the major substep to image

morphing. The warping code is open source. I used Ashish Kapoor's face tracking

code [3] to find facial features. The main challenges in getting the system to where

it is were the learning curves related to true C programming in Linux. I had

used C a long time ago under windows, but the environment was visual. One of my

biggest hang ups, for example, was a linking error in a MakeFile.

The tracking didn't always

work, but when it did the warping always produced a fun effect. The effect

wasn't, however, always realistic looking. I used a mesh grid for warping. I

varied the number of mesh points from 4 by 4 to 16 by 16, and I found that

numbers near 8 by 8 worked pretty well. A high number of mesh points tended to

give very accurate control over the warping, but didn't let you warp very far

before it looked bad due to mesh splines crossing each other. A low number of

mesh points gave you more freedom to warp the feature far, but not very

accurately, as unintended parts of the image tended to

warp along with the intended feature. I set the system up so you could control

the warping with the keyboard to allow real-time exploration of emotional

expressions. While I didn't run any scientific experiments with the system, I

did let a dozen or so people try the system while I observed. When the tracking

worked, everyone found it fun and tended to laugh or generally be amused at the funny faces E-DJ was

making. Some people played with it for a very long time (just over 5 minutes). But when the tracking failed consistently, people were frustrated. I

think the reason the tracking failed when it did was usually because there was

something reflective in the background. It helped a lot when I blocked out the

background with a black poster board. That way, the background wouldn't reflect

infrared light, which confuses the pupil detector. The detector was also far

more accurate in diffuse light.

The algorithm used to

warp the facial features was pretty simple. The mesh point closest to the facial

feature to be warped was chosen, and it was assigned the coordinate of the

facial feature. Then the corresponding output mesh point was

assigned the same coordinate with some offset. The maximum offset was

empirically determined by measuring a few images in the Cohn-Kanade database.

The percentage of the maximum offset to be used was determined by the user by

pushing the up and down arrow keys on the keyboard. The eyebrows maximum

offsets were typically about 40 pixels (assuming a 640x480 image), and the

mouth corner maximum offsets were typically between 50 and 60 pixels.

The system ran around 4 f.p.s. in the standard 160 x 120 image size that I

used. I wrote some simple code to downsample and upsample the images without any

interpolation for maximal speed. I think the computer is running a 1.4 GHz

processor, though I'll have to check on that later today. A new machine would

probably double the frame rate, creating a smoother video experience.

I think there are two major challenges for the future of this system. The

first is to create a more complex system for mapping mesh points, so that if a

feature is being warped, more than just one mesh point is affected. While the

splines do some of this work for you, it's not enough.

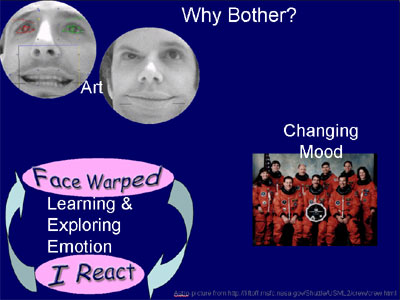

I like the project for its artistic appeal alone. For example, I can imagine

performing by pointing cameras at people and then E-DJ'ing their faces in funny ways while

projecting the output onto the wall. E-DJ also stands up fine just as a simple

toy with no explicit purpose.

But, perhaps more importantly, I think we can learn a lot from using and playing

with E-DJ. One fun idea is that

when we see our faces being warped it invokes the facial expression that we see

on the monitor. If that happens, then E-DJ will take the invoked response a

little bit further than it really is,

creating the beginning of a positive feedback loop by leading the facial

feature.

A more concrete way to use E-DJ for learning might be to use it in conjunction

with some facial expression detectors to purposely exaggerate a detected facial

expression. This could be used to train people who have a hard time

distinguishing emotional expressions, such as autistics. It's possible too that

people will learn about their emotions just by exploring their facial

expressions without explicit goals.

I can also imagine E-DJ being used to subtly change the look in a crowd of

people. If you changed each facial expression just a little bit, the overall

look of a picture might be noticeable, while the individual expressions may not

look noticeably different. This would be a good use of E-DJ since smaller

changes look more realistic.

The same principle could be applied to a video. A video with slightly different

facial expressions might be really great for affective research experiments.

Imagine if you could condition a person to be in a good or bad mood by showing

them exactly the same video with only slightly different facial expressions. The

similarities between the video would help ensure that no biases were introduced

haphazardly by showing two different videos.

What's Next?

I can imagine a couple different future paths that might bare fruit. The first

is to continue the project by adding control over more action units. Then the

action units could be coordinated to express complex emotional "words" like

surprised. The intensity of the emotional words could still be controlled by the

user, or emotional stories could be preprogrammed with a timeline editor not

unlike a simple music editor. To get all this to work properly, though, a better

mesh point assignment algorithm will need to be written. In order to make the

expressions convincing, some kind of wrinkle/shadow system will need to be

implemented. However, when a feature is warped it tends to "bunch up" wrinkles

or shadows that are already in existence giving the illusion of increased

wrinkling. There is a physical analogy to image warping that says to think of

the image as a rubber sheet that can be pulled and stretched. The face is

particularly well suited to this aspect of warping, because the face is quite

pliable and not too far from being like a rubber sheet.

Another direction that I would like to take this system would be to switch the

facial feature tracking software to one that works on regular video

(non-infrared) and images. The image above was marked automatically by face

tracking software licensed to Dr. Brazeal's group, and is available for use

throughout the Media Lab. This would allow me to create plug-ins for existing

image and video software, which is really sharing the technology since it's

giving it away for free in a form that people can use. It is enticing to imagine

my favorite (or least favorite) politician giving a supposedly sad speech with a

"joker smile" on her face.

Wrap Up

Even with just the 3 action units demonstrated in this project, it seems

promising that blind expression mapping can be done realistically in real-time.

The question is how to add more action units and how to gain better control over

the mesh points. The feature tracking is a separate module that can be improved

and swapped without too much severance.

Most Important Resources

[1] J. Y. Noh and U. Neumann. Expression cloning. In Proc. SIGGRAPH’01,

pages 277-288, 2001.

[2]

Z. Liu,Y. Shan,

and Z. Zhang. Expressive expression mapping with ratio

images. In Computer Graphics, Annual Conference Series, pages 271--276.

Siggraph, August 2001.

[3]

Kapoor, Ashish, & Picard, Rosalind, W. Real-Time, Fully Automatic Upper

Facial Feature Tracking. Proceedings of the 5th International Conference on

Automatic Face and Gesture Recognition 2002, Washington D.C.

[4]

Wolberg, G. (1990). Digital Image Warping. IEEE Computer Society Press, Los

Alamitos, CA.

[5] Kanade, T.,

Cohn, J.F., & Tian, Y. (March 2000). Comprehensive Database for Facial

Expression Analysis. Proceedings of the Fourth IEEE International Conference

on Automatic Face and Gesture Recognition. (FG’00) Grenoble, France.

|