Emotion Bottles

Alea Teeters and Akshay Mohan

The Problem:

To create a system that takes in user input and creates an output that corresponds to the user’s choice of affect.

To play off of Music Bottles [Ishii, 2004] and make a corresponding affective version.

Motivation:

Humbot [Alea Teeters and Akshay Mohan]: A humming robot that uses individual feedback to generate its own affective vocal output. The present system will help us understand the building blocks needed for development for such a system

Music bottles [Ishii, 2004]: The emotion bottles are a metaphor for how emotions bottle up inside us. The effect is not realized until the individual chooses to release them. The bottles provided a simple interface that promotes interaction and communication of affect.

What We Did:

User Scenario

Two people walk into the room mute. They look at each other and down at the bottles in between them. One person picks up the angry bottle, shakes it real hard, and releases the cap which flies across the room on a string, followed by a mad sequence of intensely angry words, nonsensical but intentional. As they fade, the person gives the bottle another shake that results in a quick, short outburst.

The second person picks up the depressed/submissive bottle and pulls up the spring loaded cap, letting out a smoky string of difuse words, quietly stating and fading into silence. She cocks the cap to the side and pours the bottle out, letting out a stream of flowing sadness, punctuated by sobs of intensity, and falling to the floor in self pity.

The first person takes the sad bottle and shakes it into the stream of the angry bottle, resulting in yelping sadness and sad words purged by angry overlays. The second person takes the happy bottle, closes the angry bottle, and lies the sad and happy bottles side by side, releasing the lids. Out comes a bubbling stream of mixed emotions and apologies, slowing after the initial flow. The first person again takes the angry bottle and places it next to the other two, opening its lid as well. The result is neutral speech, spiced by words of emotion but with low intensity. A relaxed conversation and occasionally a friendly argument.

The second person places the bottles upright and closes them one by one - angry, happy, sad - and the two people walk out of the room in silence.

System Design

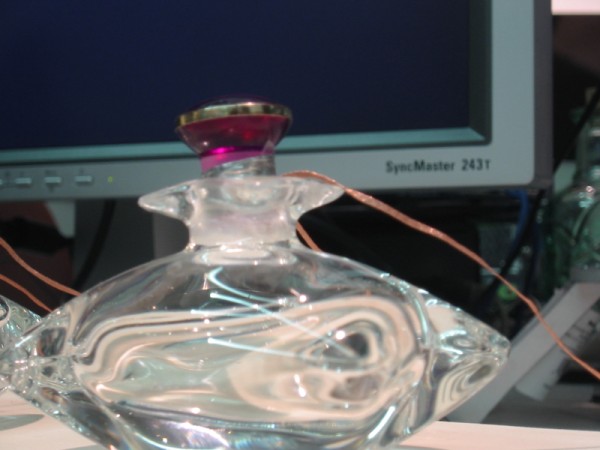

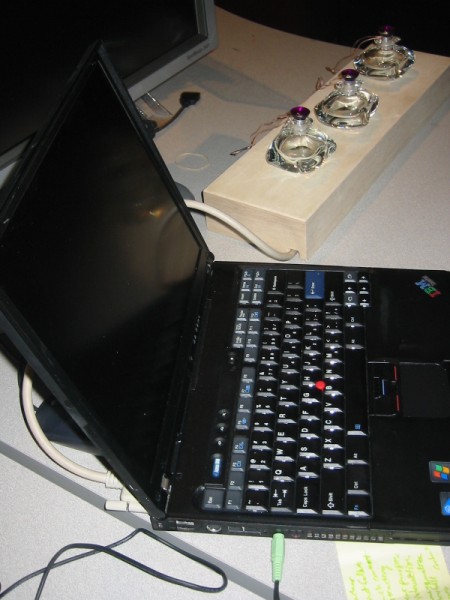

The Bottles

The system consists of three bottles that represent three emotions- Angry, Happy, and Sad. They are placed near each other and represent three possible emotional states of a person. These are emotions that can be ‘bottled up’ inside. We chose these three for the simplicity of combination of emotional states (8 possibilities). Rather than be completely representative of the possible emotional state of the person, we wanted to concentrate on the interface between emotions, the interaction and meaning between clear emotional states. Whenever a bottle is opened, a vocal affective output that corresponds to the emotion in the bottle is generated as if the emotion within the bottle is let out.

The Output

We each took a first pass at the output and stopped there. The Matlab code chooses sounds to string together according to a script written for each bottle state (each bottle can be open or closed independently). Within the each script, the sounds are accessed based on their affect. In the current implementation, the affect can be happy, sad, or angry, but has potential for more extensive and subtle classification. The output lasts a few seconds before it reiterates, to allow for a new detection of the state of the bottles in a timely manner and create an ever-changing series of sound. In the scripts, the sounds are then combined through a number of simple transformations. These include concatenation of two words, change in loudness, speed of articulation, creation of silence, and selection of a subset of the sound file. Our aim was to use simple tools to automatically combine and use the existing affect contained in the sound library to express affect without considering the context of specific content.

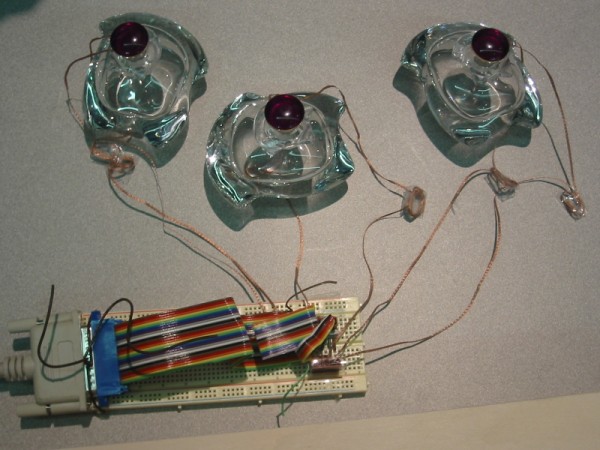

The Sensors

The initial goal was to sense the opening-closing, shaking and tilting of the bottles. Different sensors were considered in order to detect tilt and shaking of the bottles for a more interactive experience. Currently, the bottles use only simple switches to detect if the bottle is open or closed. The switch mechanism is a hand built connection that fits into the top of the bottles, uses aluminum tape and de-soldering wire.

The bottle state is transmitted to the computer through a parallel port interface. The control pins of the parallel port are used as input lines to transmit the state of the bottle to our software written in MATLAB.

The Library

Prior to use, the software can be used to upload sound files into an indexing matrix and allow classification of the sounds by valence, arousal, and dominance. During initialization, the system sorts the matrix into lists of sounds with similar affect: happy, sad, angry and neutral. The same code can also be used to sort the sounds based on valence, arousal and dominance.

The Sounds

We started by reading Raul Fernandez's thesis [Fernandez, 2004] for automatic classification of voice by affect. This makes sense when trying to parse the affect contained in the message of a speaker, but we were more interested in a subset of what he was looking at, the affect a short segment alone communicated to a listener. We decided to hand classivy a small library of sounds according to perceived affect. We chose high affect sounds from available open source files from the internet archive (www.archive.org) and other sources. The files were divided manually into segments long enough to prevent choppiness and as short as possible while still retaining affect.

Results:

The purpose of our project changed dramatically as we simplified the design. Though the system built was operational, the simplification in the design of the system highlights many weaknesses.

Despite our efforts to remove content by chopping the sound files into small segments that contained affect, the content is strongly present and gets in the way of the presentation and understanding of the affect of the piece. Some of the files are still too long, even by our final standards - sentences are better at conveying clear affect but also contain clear content.

The input sound library is small and very simplified - just happy, sad, angry - and, therefore, very constrained output. The classification was done directly for the three chosen emotions and does not actually represent valence, arousal, and dominance as intended. Due to this, the multi-bottle output is not clearly expressed when a classification based on valence, arousal and dominance would have provided us with an output that can better combine multiple emotions.

The on/off direct feedback to the user is reasonably good, but no other manipulations are provided. This limits the interaction of the user with the emotion bottles. The user has almost no control over the output, with the choice between the different outputs that represent the three emotions and their combinations. There is a long way to go before Steven Hawking could use it to express emotion in his text to speech, and Kelly Dobson's Screambody would be more useful in empathizing with users (or creators) at this point.

The testing for the system has been very limited. After getting the code to work, we build the physical interface and each made a first pass at the affective output. We have barely had a chance to play with it ourselves and have had 2 people try the interface.

Failure modes –

The bottles remain ‘closed’ when shorted. The hardware implementation of the parallel port is fragile and uses a breadboard. If the connector falls out at any junction, the switches default open.

Conclusions:

The number of degrees of freedom are critical to a truly interactive emotion system. The user needs enough to assert control over the output, but not so many that it is confusing or difficult to understand.

The content of speech prevents full appreciation of the affect in the voice when the speech is taken out of context. The content captures the attention of a listener and engages a cognitive process that overtakes the emotional reaction in most speech fragments. The quality of the sound and volume can also prevent the user from fully appreciating the affect as the user tries instead to figure out what is being said.

Words by themselves are difficult to use for affect expression. Words are not surrounded by natural pauses and do not contain a complete thought in affect or content. This may be improved by filtering, but the short length makes it difficult to interpret affect in most cases.

Vocal emotion in every day speech is very subtle and makes it difficult to represent strong emotions. Recognition of affect in a single word or phrase does not translate to a string of words – isolation seems to amplify perceived affect.

The best system is a system that works. The sooner you can get a system up the better, no matter how simplified. Our system needs a few more iterations in order to achieve our aims.

Future Work:

Due to the simplified design, considerable work can be done to improve the system. Some of the proposed improvements and directions the system can take are as follows:

- In a natural interaction, it may be difficult for a person to have control over their emotions if become sufficiently intense. The bottles can emulate this behavior by controlling the time-period during which the build-up of the emotions within the bottles continue to increase as long as the bottles are closed. After a sufficient time-period, the sounds explode automatically. This will also help to draw the attention of the observers and encourage interaction with the bottle.

- Development of an algorithm that can remove content while retaining affect in the input and/or output.

- The sound quality of the library needs to be consistent throughout the library. The sound quality and volume of the different sound files makes output inconsistant. Normalization functions that can average the volume and noise over the library can reduce the distortion of such variation on affect.

- The library needs to be better populated and classified according to other features like valence, arousal and dominance. The library will be helpful to create a more convincing output by determining which possible emotions can exist concurrently.

- The library can be later expanded through a web interface and possibly automatic download and affect classification of content from radio. Automatic affect classification may be achieved by analysis of the prosodic content of the speech as proposed by Fernandez [Fernandez, 2004]

References:

- Fernandez, R. [2004]. A Computational Model for the Automatic Recognition of Affect in Speech MIT PhD Thesis, February 2004.

PDF

- R. W. Picard (1997), Affective Computing, MIT Press, Cambridge, 1997, The bibliography from this book is available online.

- Breazeal and R. Brooks (2004). "Robot Emotion: A Functional Perspective," In J.-M. Fellous and M. Arbib (eds.) Who Needs Emotions: The Brain Meets the Robot, MIT Press (forthcoming 2004).

PDF

- Ishii, H. (2004). Bottles: A Transparent Interface as a Tribute to Mark Weisner. IEICE Transactions on Information and Systems,, Vol. E87-D, No. 6, pages 1299-1311, June 2004.

musicbottles 2001 PDF

- Sound Source 1 : archive.org

- Sound Source 2 : Call Home English DB. Provided by Rosalind Picard.