Intro

“2H2K: LawyeR” is a multimedia project exploring the fate of legal work in a future of artificial labor and ubiquitous interactive machine learning.

This project arose out of 2H2K, my ongoing collaboration with John Powers where we’re trying to use science fiction, urbanism, futurism, cinema, and visual effects to imagine what life could be like in the second half of the 21st century. One of the major themes to emerge in the 2H2K project is something we’ve taken to calling “artificial labor”. While we’re skeptical of the claims of artificial intelligence, we do imagine ever-more sophisticated forms of automation transforming the landscape of work and economics. Or, as John puts it, robots are Marxist.

Due to our focus on urbanism and the built-environment, John’s stories so far have mainly explored the impact of artificial labor on physical work: building construction, forestry, etc. For this project, I wanted to look at how automation will affect white collar work.

Having known a number of lawyers who worked at large New York firms such as Skadden

and Kirkland and Ellis, one form of white collar work that seemed especially ripe for automation jumped out to me: document evaluation for legal discovery. As I’ll explain in more detail below, discovery is the most labor-intensive component of large corporate lawsuits and it seems especially amenable to automation through machine learning. Even the widespread application of technologies that already exist today would radically reduce the large number of high-paid lawyers and paralegals that currently do this work.

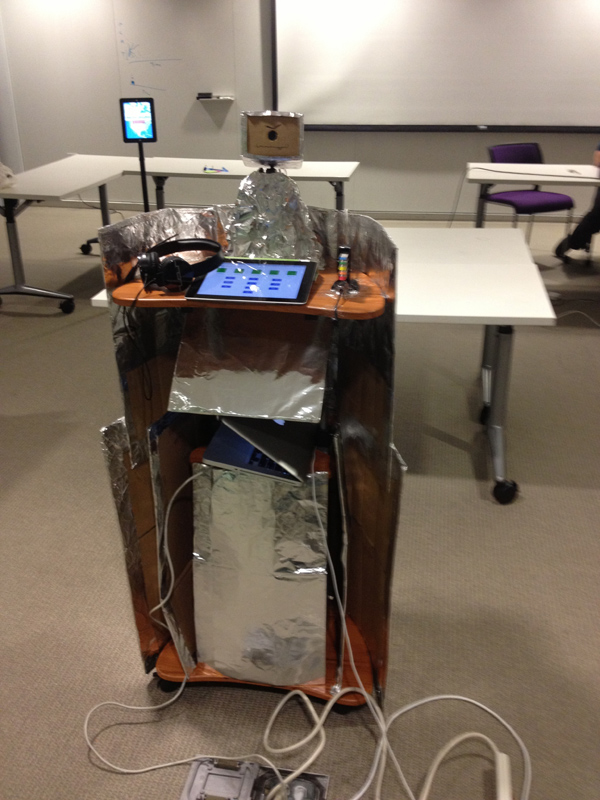

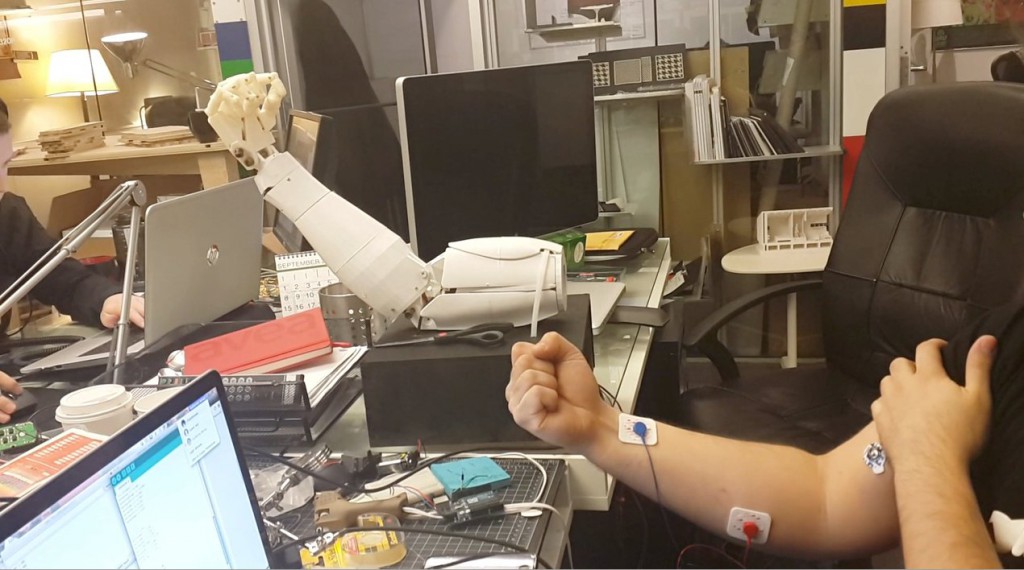

In the spirit of both 2H2K and the MIT Media Lab class, Science Fiction to Science Fabrication (for which this project acted as a final), I set out to explore the potential impact of machine learning on the legal profession through three inter-related approaches:

- Prototyping a real interactive machine learning system for legal discovery.

- Writing and illustrating a sci-fi comic telling the story of how it might feel to work in a law firm of 2050 that’s been transformed by this new technology.

- Designing the branding for an imaginary firm working in this field.

For the rest of this post, I’ll discuss these parts of the project one-by-one and describe what I learned from each. These discussions will range from practical things I learned about machine learning and natural language processing to interface design issues to the relationship between legal discovery and voyeurism.

Before beginning, though, I want to mention one of the most powerful and surprising things I learned in the course of this project. Using science fiction as the basis of a design process has lead me to think that design fiction is incredibly broken. Most design fiction starts off with rank speculation about the future, imagining a futuristic device or situation out of whole cloth. Only then does it engage prototyping and visual effects technologies in order to communicate the consequences of the imagined device through “diegetic prototypes”, i.e. videos or other loosely narrative formats that depict the imagined technology in use.

This now seems perfectly backwards to me. For this project, by contrast, I started with a real but relatively cutting edge technology (machine learning for document recall). I then engaged with it as a programmer and technologist until I could build a system that worked well enough to give me (with my highly specialized technical knowledge) the experience of what it would be like to really use such a system in the real world. Having learned those lessons, I then set out to communicate them using a traditional storytelling medium (in this case, comics). I used my technical know-how to gain early-access to the legendarily unevenly distributed future and then I used my storytelling ability to relay what I learned.

Design fiction uses imagination to predict the future and prototyping to tell stories. Imagination sucks at resolving the complex causes that drive real world technology development and uptake. Prototyping sucks at producing the personal identification necessary to communicate a situation’s emotional effect. This new process – call it Science Fiction Design, maybe? – reverses this mistake. It uses prototyping and technological research to predict the future and storytelling media to tell stories.

(Much of the content of this post is reproduced in the third episode of the 2H2K podcast where John and I discuss this project. The 2H2K podcast consists of semi-regular conversations between the two of us about the stories and technologies that make up the project. Topics covered include urbanism, labor, automation, robots, interactive machine learning, cross-training, cybernetics, and craft. You can subscribe here.)

What is Discovery?

According to wikipedia:

Discovery, in the law of the United States, is the pre-trial phase in a lawsuit in which each party, through the law of civil procedure, can obtain evidence from the opposing party by means of discovery devices including requests for answers to interrogatories, requests for production of documents, requests for admissions and depositions.

In other words, when you’re engaged in a lawsuit, the other side can request internal documents and other information from your company that might help them prove their case or defend against yours. This can include internal emails and memos, call records, financial documents, and all manner of other things. In large corporate lawsuits the quantity of documents involved can be staggering. For example, during the US government’s lawsuit against Big Tabacco six million documents were discovered totaling more than 35 million pages.

Each of these documents needs to be reviewed for information that is relevant to the case. This is not simply a matter of searching for the presence of absence of particular words, but making a legal judgment based on the content of the document. Does it discus a particular topic? Is it evidence of a particular kind of relationship between two people? Does it represent an order or instruction from one party to another?

In large cases this review is normally performed by hordes of first year associates, staff attorneys, and paralegals at large law firms. Before the crash of 2008, large law firms, which do the bulk of this kind of work and employ hundreds or even thousands of such workers, hired more than 30% of new law school graduates (see What’s New About the New Normal: The Evolving Market for New Lawyers in the 21st Century by Bernard A. Burk of UNC Chapel Hill).

As you can imagine, this process is wildly expensive both for law firms and their clients.

Legal Discovery and Machine Learning

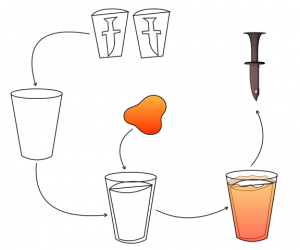

Legal discovery is a perfect candidate for automation using recent advances in machine learning. From a machine learning perspective discovery is a classification task: each document must be labeled as either relevant or irrelevant to the case. Since the legal issues, people involved, and topics discussed vary widely between cases, discovery is a prime candidate for supervised learning, a machine learning approach where humans provide labels for a small subset of documents and then the machine learning system attempts to generalize to the full set.

Machine learning differs from traditional information retrieval systems such as full-text search exactly because of this ability to generalize. Machine learning systems represent their documents as combinations of “features”: the presence or absence of certain words, when a message was sent, who sent it, who received it, whether or not it includes a dollar amount or a reference to stock ticker symbol, etc. (Feature selection is the single most critical aspect of machine learning engineering; more about it below when I describe the development of my system.) Supervised machine learning algorithms learn the patterns that are present in these features amongst the labeled examples they are given. They learn what types of combinations of features characterize documents that are relevant vs irrelevant and then they classify a new unseen document by comparing its features.

Information retrieval systems are currently in widespread use throughout the legal field. One of the landmark information retrieval systems, IBM’s STAIRS system was even originally developed in order to reduce the expense of defending against an antitrust lawsuit in 1969 before being commercialized in 1973.

However, there is little public sign that machine learning techniques are in widespread use at all. (It’s impossible to know how widely these techniques are used within proprietary systems inside of firms, of course.) One of the most visible proponents of machine learning for legal discovery is former Bell Labs researcher, David Lewis. Lewis’s Purdue lecture, Machine Learning for Discovery in Legal Cases represents probably the best public survey of the field.

This seems on the verge of changing. In a March 2011 story in the New York Times, Armies of Expensive Lawyers, Replaced by Cheaper Software John Markoff reported on burgeoning set of companies beginning to compete in this field including Clearwell Systems, Cataphora, Blackstone Discovery, and Autonomy, which has since been acquired by HP. Strikingly, Bill Herr, one of the lawyers interviewed for Markoff’s story, used one of these new e-discovery systems to review a case his firm had worked in the 80s and 90s and learned that the lawyers had only been 60 percent accurate, only “slightly better than a coin toss”.

Prototyping an Interactive Machine Learning System for E-Discovery

Having reviewed this history, I set out to prototype a machine learning system for legal discovery.

The first thing I needed in order to proceed was a body of documents from a legal case against which I could train and test a classifier. Thankfully in Brad Knox’s Interactive Machine Learning class this semester, I’d been exposed to the existence of the Enron corpus. Published by Andrew McCallum of CMU in 2004, the Enron corpus collects over 650,000 emails from 150 users obtained during the Federal Energy Regulatory Commission’s investigation of Enron and made public as part of the federal case against the company. The Enron emails make the perfect basis for working on this problem because they represent real in situ emails from a situation where there were actual legal issues at stake.

After obtaining the emails, I consulted with a lawyer in order to understand some of the legal issues involved in the case (I chose my favorite criminal defense attorney: my dad). The government’s case against Enron was huge, sprawling, and tied up with many technicalities of securities and energy law. We focused on insider trading, situations where Enron employees had access to information not available to the wider public, which they used for their own gain or to avoid losses. In the case of Enron this meant both knowledge about the commodities traded by the company and the company’s own stock price, especially in the time period of the later’s precipitous collapse and the government’s investigation.

The World of Martin Cuilla

With this knowledge in hand, I was ready to label a set of Enron emails in order to start the process of training a classifier. And that’s when things started getting personal. In order to label emails as relevant or irrelevant to the question of insider training I’d obviously need to read them.

So, unexpectedly I found myself spending a few hours reading 1028 emails sent and received by Martin Cuilla, a trader on the Western Canada Energy Desk at Enron. To get started labeling, I chose one directory within the dataset, a folder named “cuilla-m”. I wasn’t prepared for the intimate look inside someone’s life that awaited me as I conducted this technical task.

Of the 1028 emails belonging to Mr. Cuilla, about a third of them relate to the Enron fantasy football league, which he administered:

A chunk of them from early in the dataset reveal the planning details of Cuilla’s engagement and wedding.

They include fascinating personal trivia like this exchange where Cuilla buys a shotgun from a dealer in Houston:

In the later period of the dataset, they include conversations with other Enron employees who are drunk and evidence of Cuila’s drinking and marital problems:

As well as evidence of an escalating gambling problem (not a complete shocker in a day trader):

And, amongst all of this personal drama, there are emails that may actually be material to the case where Cuilla discusses predictions of gas prices:

orders trades:

and offers to review his father’s stock portfolio to avoid anticipated losses (notice that his father also has an Enron email address):

In talking to friends who’ve worked at large law firms, I learned that this experience is common: large cases always become soap operas. Apparently, it’s normal when reading the previously private correspondence of any company to come across evidence of at least a few affairs, betrayals, and other such dramatic material. Part of working amongst hundreds of other lawyers, paralegals, and staff on such a case is the experience of becoming a collective audience for the soap opera that the documents reveal, gossiping about what you each have discovered in your reading.

As I learned in the course of building this prototype: this is an experience that will survive into a world of machine learning-based discovery. However, it will likely be transformed from the collective experience of large firms to a far more private and voyeuristic one as individuals (or distributed remote workers) do this work alone. This was an important revelation for me about the emotional texture of what this work might feel like in the future and (as you’ll see below) it became a major part of what I tried to communicate with the comic.

Feature Engineering and Algorithm Selection

Now that I’d labeled Martin Cuilla’s emails, I could begin the process of building a machine learning system that could successfully predict these labels. While I’ve worked with machine learning before, it’s always been in the context of computer vision, never natural language.

As mentioned above, the process of designing machine learning systems have two chief components: features engineering and learning algorithm selection. Feature engineering covers what information you extract from each document to represent it. The learning algorithm is how you use those features (and your labels) to build a classifier that can predict labels (such as relevant/irrelevant) for new documents. Most of the prestige and publicity in the field goes to the creation of learning algorithms. However, in practice, feature engineering is much more important for solving real world problems. The best learning algorithm will produce terrible results with the wrong features. And, given, good feature design, the best algorithms will only incrementally outperform the other options.

So, in this case, my lack of experience with feature engineering for natural language was a real problem. I barged forwards nonetheless.

For my first prototype, I extracted three different kinds of features: named entities, extracted addresses, and date-sent. My intuition was that named entities (i.e. stock symbols, company names, place names, etc) would represent the topics discussed, the people sending and receiving the messages would represent the command structure within Enron, and the date sent would relate to the progress of the government’s case and the collapse of the company.

I started by dividing Martin Cuilla’s emails into training and testing sets. I developed my system against the training set and then tested its results against the test set. I used CoreNLP, an open source natural language processing library from Stanford to extract named entities from the training set. You can see the results in the github repo for this project here, (Note: all of the code for this project is available in my github repo: atduskgreg/disco and the code from this stage of the experiment is contained in this directory). I treated this list as a “Bag of Words”, creating a set of binary features corresponding to each entity with the value of 1 given when an email included the entity and 0 when it did not. I then did something similar for the email addresses in the training set, which I also treated as a bag of words. Finally, to include the date, I transformed the date into a single feature: a float which was scaled to the timespan covered by the corpus. In other words, a 0.0 for this feature would mean an email was sent at the very start of the corpus and a 1.0 that it was the last email sent. The idea being that emails sent close together in time would have similar values.

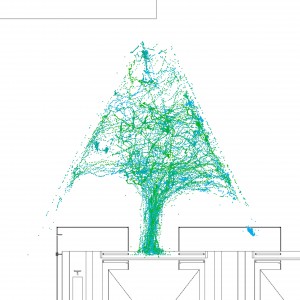

For the learning algorithm, I selected Random Decision Forest. Along with Support Vector Machines, Random Decision Forests are amongst the most effective widely-deployed machine learning algorithms. Unlike SVMs though, Random Decision Forests have a high potential for transparency. Due to the nature of the algorithm, most Random Decision Forest implementations provide an extraordinary amount of information about the final state of the classifier and how it derived from the training data (see my analysis of Random Decision Forrest’s interaction affordances for more). I thought this would make it a superior choice for an interactive e-discovery system since it would allow the system to explain the reasons for its classifications to the user, increasing their confidence and improving their ability to explore the data, add labels, tweak parameters, and improve the results.

Since I maintain the OpenCV wrapper for Processing and am currently in the process of integrating OpenCV’s rich machine learning libraries, I decided to use OpenCV’s Random Decision Forest implementation for this prototype.

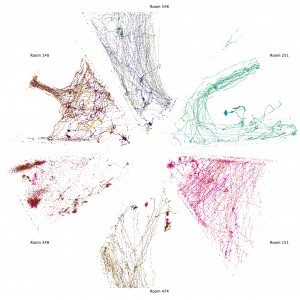

Results of the First Prototype: Accuracy vs Recall

The results of this first prototype were disappointing but informative. By the nature of legal discovery, it will always be a small minority of documents that are relevant to the question under investigation. In the case of Martin Cuilla’s emails, I’d labeled about 10% of them as relevant. This being the case, it is extremely easy to produce a classifier that has a high rate of accuracy, i.e. that produce the correct label for a high percentage of examples. A classifier that labels every email as irrelevant will have an accuracy rate around 90%. And, as you can see from the console output in the image above, that’s exactly what my system achieved.

While this might sound impressive on paper, it is actually perfectly useless in practice. What we care about in the case of e-discovery is not accuracy, but recall. Where accuracy measures how many of our predicted labels were correct, recall measures how many of the total relevant messages we found. Whereas accuracy is penalized for false positives as well as false negatives, recall only cares about avoiding false negatives: not missing any relevant messages. It is quite easy for a human to go through a few thousand messages to eliminate any false positives. However, once a truly relevant message has been missed it will stay missed.

With the initial approach, our classifier only ever predicted that messages were irrelevant. Hence, the 90+% accuracy rate was accompanied by a recall rate of 0. Unacceptable.

Improving Recall: Lightside and Feature Engineering for Text

In order to learn how to improve on these results, I consulted with Karthik Dinakar, a PhD candidate at the lab who works with Affective Computing and Software Agents and is an expert in machine learning with text. Karthik gave some advice about what kinds of features I should try and pointed me towards Lightside.

Based on research done at CMU, Lightside is a machine learning environment specifically tailored to working with text. It’s built on top of Weka, a widely-used GUI tool for experimenting with and comparing machine learning algorithms. Lightside adds a suite of tools specifically to facilitate working with text documents.

Diving into Lightside, I set out to put Karthik’s advice into action. Karthik had recommended a different set of features than I’d previously tried. Specifically, he recommended unigrams and bigrams instead of named entities. Unigrams and bigrams one- and two-word sequences, respectively. Their use is widespread throughout computational linguistics.

I converted the emails and my labels to CSV and imported them into Lightside. Its interface made it easy to try out these features, automatically calculating them from the columns I indicated. Lightside also made it easy to experiment with other computed features such as regular expressions. I ended up adding a couple of regexes designed to detect the presence of dollar amounts in the emails.

Lightside also provides a lot of additional useful information for evaluating classifier results. For example, it can calculate “feature weights”, how much each feature contributed to the classifier’s predictions.

Here’s a screenshot showing the highest-weighted features at one point in the process:

The first line is one of my regexes designed to detect dollar amounts. Other entries are equally intriguing: “trades”, “deal”, “restricted”, “stock”, and “ene” (Enron’s stock ticker symbol). Upon seeing these, I quickly realized that they would make an excellent addition to final user interface. They provide insight into aspects of the emails the system has identified as relevant and potentially powerful user interface hooks for navigating through the emails to add additional labels and improve the system’s results (more about this below when I discuss the design and implementation of the interface).

In addition to tools for feature engineering, Lightside makes it easy to compare multiple machine learning algorithms. I tested out a number of options, but Random Decision Forest and SVN performed the best. Here were some of their results early on:

As you can see, we’re now finally getting somewhere. The confusion matrices compare the models’ predictions for each value (0 being irrelevant and 1 being relevant) with reality, letting you easily see false negatives, false positives, true negatives, and true positives. The bottom row of each matrix is the one that we care about. That row represents the relevant emails and shows the proportions with which the model predicted 0 or 1. We’re finally getting predictions of 1 for about half of the relevant emails.

Notice also, the accuracy rates. At 0.946 the Random Decision Forest is more accurate than the SVM at 0.887. However, if we look again at the confusion matrix, we can see that the SVM detected 11 more relevant emails. This is a huge improvement in recall so, despite Random Forest’s greater potential for transparency, I selected SVM as the preferred learning algorithm. As we learned above, recall matters above all else for legal discovery.

Building a Web Interface for Labeling and Document Exploration

So, now that I had a classifier well-suited to detecting relevant documents I set out to build an interface that would allow someone with legal expertise to use it for discovery. As in many other interactive machine learning contexts, designing such an interface is a problem of balancing the rich information and options provided by the machine learning algorithms with the limited machine learning knowledge and specific task focus of the user. In this case I wanted to make an interface that would help legal experts do their work as efficiently as possible while exposing them to as little machine learning and natural language processing jargon as possible.

(An aside about technical process: the interface is built as a web application in Ruby and Javascript using Sinatra, DataMapper, and JQuery. I imported the Enron emails into a Postgres database and setup a workflow to communicate bidirectionally with Lightside via CSVs (sending labels to Lightside and receiving lists of weighted features and predicted labels from Lightside). An obvious next iteration would be to use Lightside’s web server example to provide classification prediction and re-labeling as an HTTP API. I did some of the preliminary work on this and received much help from David Adamson of the Lightside project in debugging some of the problems I hit, but was unable to finish the work within the scope of this prototype. I plan to publish a simple Lightside API example in the future to document what I’ve learned and help others who’d like to improve on my work.)

The interface I eventually arrived at looks a lot like Gmail. This shouldn’t be too surprisingly since, at base, the user task is quite similar to Gmail’s users: browse, read, search, triage.

In addition to providing a streamlined interface for browsing and reading emails, the basic interface also displays the system’s predictions: highlighting in pink messages predicted as relevant. Further, it allows users to label messages as relevant or irrelevant in order to improve the classifier.

Beyond basic browsing and labeling, the interface provides a series of views into the machine learning system designed to help the user understand and improve the classifier. Simplest amongst these is a view that shows the system’s current predictions grouped by whether they’re predicted to be relevant or irrelevant. This view is designed to give the user an overview of what kind of messages are being caught and missed and a convenient place to correct these results by adding further labels.

The messages that have already been labeled show up in a sidebar on all pages. Individual labels can be removed if they were applied mistakenly.

The second such view exposes some technical machine learning jargon but also provides the user with quite a lot of power. This view shows the features extracted by Lightside, organized by whether they correlate with relevant or irrelevant emails. As you can see in the screenshot above, these features are quite informative about what message content is found in common amongst relevant emails.

Further, each feature is a link to a full-text search of the message database for that word or phrase. This may be the single most-powerful aspect of the entire interface. One of the lessons of the Google-era seems to be a new corollary to Clarke’s Third Law: any sufficiently advanced artificial intelligence is indistinguishable from search. These searches quite often turn up additional messages where the user can improve the results by applying their judgment to marginal cases by labeling them as relevant or irrelevant.

One major lesson from using this interface is that a single classifier is not flexible enough to capture all of the subtleties of a complex legal issue like insider trading. I can imagine dramatically improving on this current interface by adding an additional layer on top of what’s currently there that would allow the user to create multiple different “saved searches” each of which trained an independent classifier and which were composable in some way (for example through interface option that would automatically add the messages matching highly negatively correlated terms from one search to the relevant group of another). The work of Saleema Amershi from Microsoft Research is full of relevant ideas here, especially her ReGroup paper about on-demand group-creation in social networks and her work on interactive concept learning.

Further, building this interface lead me to imagine other uses for it beyond e-discovery. For example, I can imagine the leaders of a large company wanting versions of these saved-search classifiers run against their employees’ communications in real time. Whether as a preventative measure against potential lawsuits, in order to capture internal ‘business intelligence’, or simply out of innate human curiousity it’s difficult to imagine such tools, after they come into existence, not getting used for additional purposes. To extend William Gibson’s famous phrase into a law of corporate IT: the management finds its own uses for things.

This leads me to the next part of the project: making a sci-fi comic telling the story of how it might feel to work in a 2050 law firm that’s been transformed by these e-discovery tools.

The Comic: Sci-Fi Storytelling

When I first presented this project in class, everyone nodded along to the technical parts, easily seeing how machine learning would better solve the practical problem. But the part that really got them was when I told the story of reading and labeling Martin Cuilla’s emails. They were drawn into Cuilla’s story along with me and also intrigued by my experience of unexpected voyeurism.

As I laid out in the beginning of this post, the goal of this project was to use a “Science Fiction Design” process – using the process of prototyping to find the feelings and stories in this new technology and then using a narrative medium to communicate those.

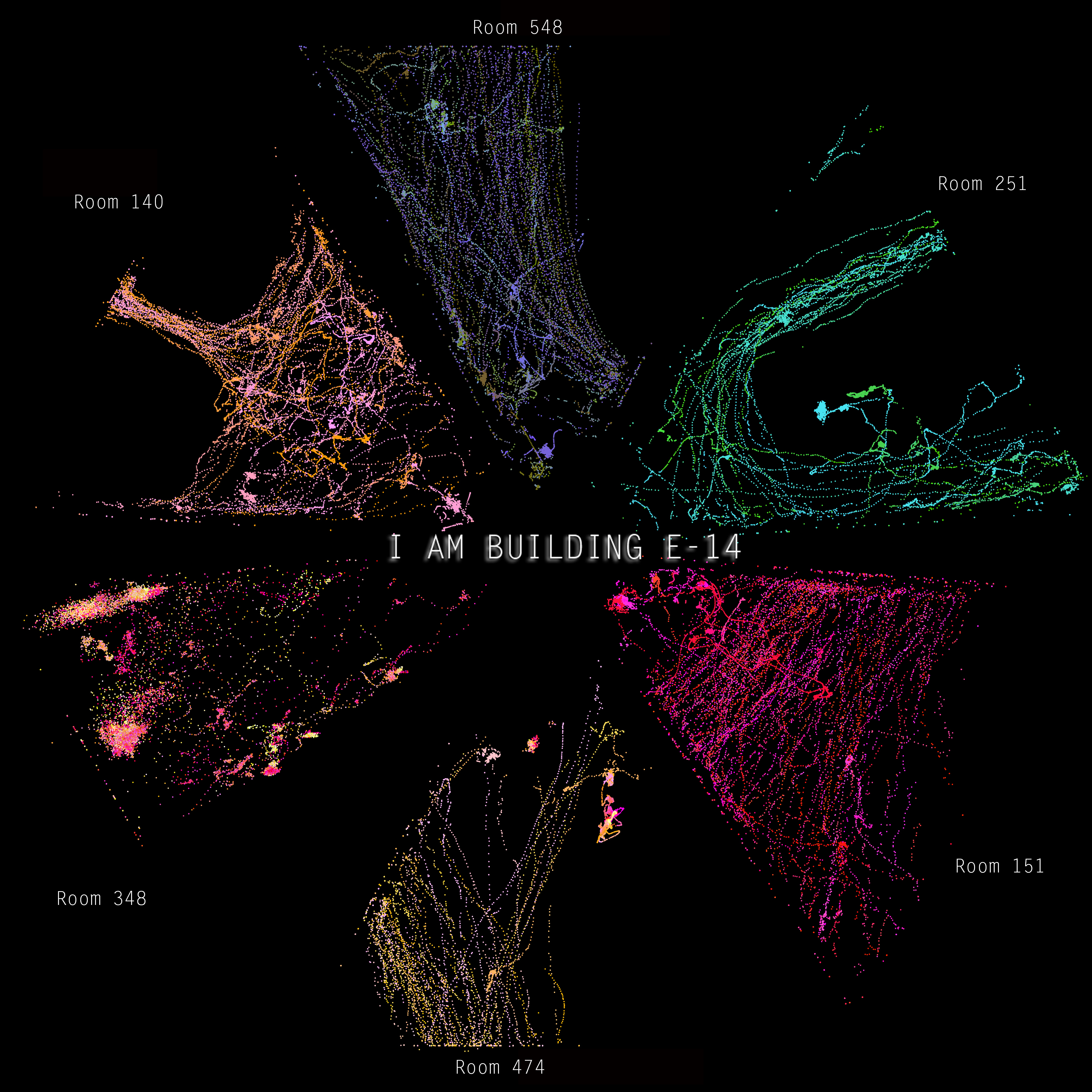

In parallel with the technical prototype, I’ve been working on a short comic to do just this. Since I’m a slow writer of fiction and an even slower comics artist, the comic is still unfinished. I’ve completed a script and I have three pages with finished art, only one of which (shown at the top of this section) I’ve also lettered and completed post-production. In this section, I’ll outline some of the discoveries from the prototype that have translated into the comic, shaping its story and presenting emotional and aesthetic issues for exploration. I’ll also show some in-progress pages to illustrate.

The voyeurism inherent in the supervised learning process is the first example of this. When I experienced it, I knew it was something that could be communicated through a character in my comic story. In fact, it helped create the character: someone who’s isolated, working a job in front of a computer without social interaction, but intrigued by the human stories that filter in through that computer interface, hungering to get drawn into them. This is a character who’s ripe for a mystery, an accidental detective. The finished and lettered page at the top of this section shows some of this in action. It uses actual screenshots of the prototype’s interface as part of a section of the story where the character explains his job and the system he uses to do it.

But where does such a character work? What world surrounds him, in what milieu does e-discovery take place? Well, thinking about the structure of my machine learning prototype, I realized that it was unlikely that current corporate law firms would do this work themselves. Instead, I imagined that this work would be done by the specialized IT firms I already encountered doing it (like Cataphora and Blackstone Discovery).

Firms with IT and machine learning expertise would have an easier time adding legal expertise by hiring a small group of lawyers than law firms would booting up sophisticated technical expertise from scratch. Imagine the sales pitch an IT firm with these services could offer to a big corporate client: “In addition to securely managing your messaging and hosting which we already do, now we can also provide defensive legal services that dramatically lower your costs in case of a lawsuit and reduce or eliminate your dependence on your super-expensive external law firm.” It’s a classic Clayton Christensen-esque case of disruption.

So, instead of large corporate law firms ever fully recovering from their circa–2008 collapse, I imagined that 2050 will see the rise of a new species of firm to replace them: hybrid legal-IT firms with heavy technological expertise in securely hosting large amounts of data and making it discoverable with machine learning. Today’s rooms full of paralegals and first-year associates will be replaced with tomorrow’s handful of sysadmins.

This is where my character works: at a tech company where a handful of people operate enormous data centers, instantly search and categorize entire corporate archives, and generally do the work previously done by thousands of prestigious and high-paid corporate lawyers.

And, as I mentioned in the last section, I don’t imagine that the services provided by such firms will stay limited to legal discovery. To paraphrase Chekov, if in the first act you have created way of surveilling employees, then in the following one you will surveil your own employees. In other words, once tools are built that use machine learning to detect messages that are related to any topic of inquiry, they’ll be used by managers of firms for preemptive prevention of legal issues, to capture internal business intelligence, and, eventually, to spy on their employees for trivial personal and political purposes.

Hence, in my comic’s story twists comes when it turns out that the firm’s client has used their tools inappropriately and when, inevitably, the firm itself is also using them to spy on my main character. While he enjoys his private voyeuristic view into the lives of others, someone else is using the same tools to look into his.

Finally, a brief note about the style of the comic’s art. As you can see from the pages included here, the comic itself includes screenshots of the prototype interface I created early in the process. In addition to acting as background research, the prototype design process also created much more realistic computer interfaces than you’d normally see in fiction.

Another small use of this that I enjoyed was the text of the emails included at the bottom of that finished page. I started with the Enron emails and then altered the text to fit the future world of my story. (See the larger version where you can read the details.) My small tribute to Martin Cuilla and all he did for this project.

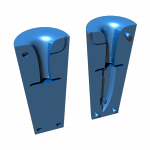

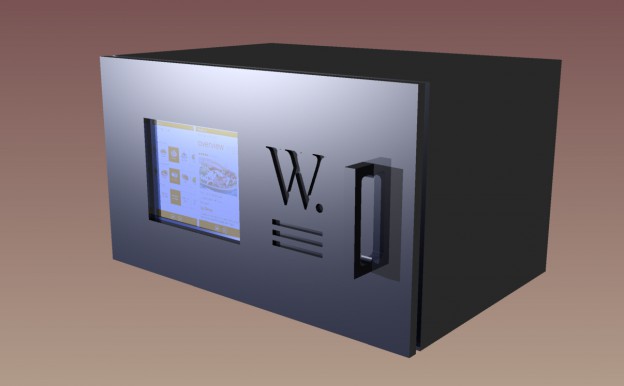

The other thing I’ve been experimenting with in the art style is the use of 3D models. In both the exterior of the building and the server room above, I downloaded or made 3D models (the building was created out of a 3D model of a computer fan, which I thought appropriate for a futuristic data center), rendered them as outlines, and then glued them onto my comics pages where I integrated them (through collage and in-drawing) with hand-drawn figures and other images. This was both pragmatic – radically accelerating the drawing of detailed perspective scenes, which would have otherwise been painstaking to create by hand – and designed to make the technology of this future world feel slightly absent and undefined, a blank slate onto which we can project our expectations of the future. After all, isn’t this how sci-fi props and scenery usually acts?

Lawgorithm.com

Last and definitely least, as a lark I put together a website for the fictional firm described in the story (and whose branding adorned the interface prototype). I was quite proud of the domain I manage to secure for this purpose: lawgorithm.com. I also put an unreasonable amount of time into copying and satirizing the self-presentation style I imagined such a firm using: an unholy mashup of the pompous styling of corporate law firm websites like Skadden’s and the Apple-derivative style so common amongst contemporary tech startups. (I finished it off by throwing in a little Lorem Gibson for good measure.)

Despite a few masterpieces, satirical web design is an under-utilized medium. While comedic news sites like The Onion and The Daily Currant do look somewhat like the genre of news sites they skewer, they don’t take their visual mockery nearly as far as their textual mockery.