Activity Recognition in the Home Setting Using Simple and Ubiquitous Sensors

|

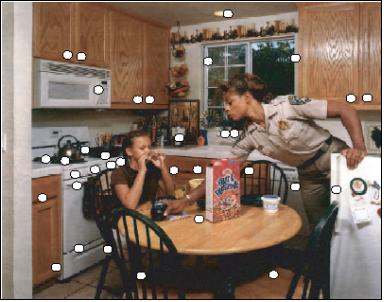

Have you seen The Invisible Man (1933) or Hollow man (2000). Have you noticed that you can tell what the invisible man is doing even though you cannot see him? How are you able to recognize what the invisible man is doing? Right! You can tell what is he doing by the objects he is manipulating. This is the main idea in this work, make the computer infer what you are doing based on sequences of sensor activations that tell the computer which everyday objects are you manipulating. We have developed small wireless sensors that we call MITes that allow us to record this type of information and they are small enough to install in real objects in real homes.

Introduction

There are different approaches to human activity recognition. Some of them involve the analysis of complex sensor signals such as video from cameras in computer vision and audio from microphones in auditory scene analysis. Activity recognition from these sensors is challenging not only because of the complexity in analizyng the signals (feature extraction) and the complexity involved in the pattern recognition and machine learning algorithms used (especially for real-ime performance). Furthermore, cameras and microphones are usually perceived as invasive by people and they don't want them installed in their homes. The objective of this experiment/thesis is to decompose human activities as a sequence of binary sensor activations by installing sensors that sense when everyday objects are being moved or used.

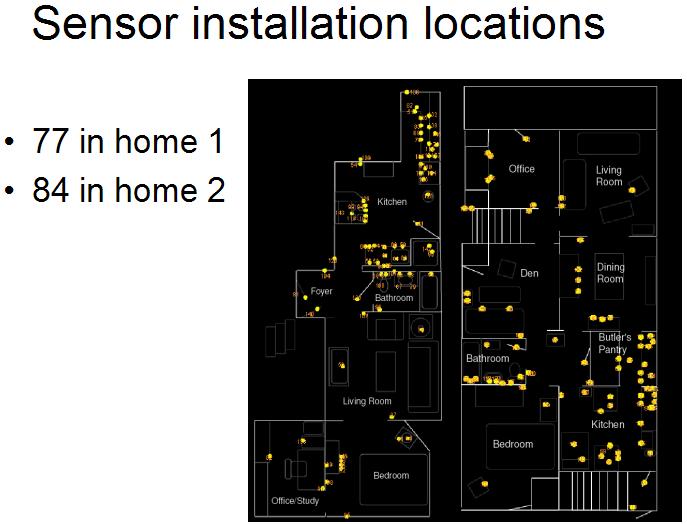

In this experiment, between 77 and 84 sensor data collection boards equipped with reed switch sensors where installed in two single-person apartments collecting data about human activity for two weeks. The sensors were installed in everyday objects such as drawers, refrigerators, containers, etc. to record opening-closing events (activation deactivation events) as the subject carried out everyday activities.

|

Sensor installation examples |

|

|

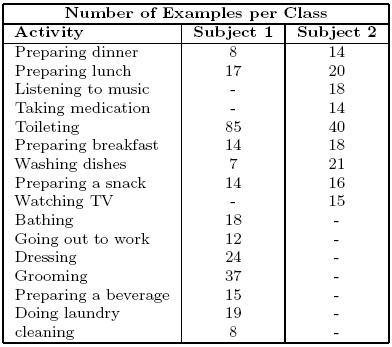

Activities labeled for this experiment

|

CLICK HERE FOR AN EXAMPLE OF THE SENSOR ACTIVATIONS FOR A DAY

Project Ideas

Some quick projects ideas to get you started thinking about the problem

1). Running different algorithms to recognize activities

2).Cluster the sensor activations to predict possible activities

3). Measure changes of human behavior from day to day (what is the right distance measure to use?)

Matlab dataset

The matlab dataset consists of a matrix containing the sensor information and a matrix containing the activities information. Matlab support code for loading, plotting the sensor data and some basic exploration of the data is also provided.

Sensor data

sensor_data: is a matrix in containing the information of all the sensors for the whole duration of the study (~2weeks) in the following format

[year_day week_day activation deactivation interval sensor_id location type]

year_day: is a number representing the day of the year. This number can be converted to the month, day, and year format using the provided matlab function [month,day,year] = yearday2date(year_day,year). The year for this dataset is 2003. usage example: [month,day,year] = yearday2date(88, 2003)

week_day: is a number between 1 and 7 specifying the day of the week (1=monday, 7=sunday) .

activation: represents the sensor activation time in seconds starting from 12:00am. To convert the time to the hour, min, sec format use the provided function[hour,min,sec] = s2hms(seconds); Usage example: [hour,min,sec] = s2hms(57052)

deactivation: represents the sensor deactivation time in seconds starting from 12:00am. To convert the time to the hour, min, sec format use the provided function[hour,min,sec] = s2hms(seconds); Usage example: [hour,min,sec] = s2hms(57052)

interval: represents the time that the sensor was activated in seconds. To convert the time to the hour, min, sec format use the provided function[hour,min,sec] = s2hms(seconds); Usage example: [hour,min,sec] = s2hms(57052). WARNING: this information is noisy due to the nature of the sensors, so do not completely trust it.

sensor_id: is a number representing the sensor ID. Each sensor has its own unique ID.

location: is a number representing the location of the sensor (room) in the house. To find out the room label, use the locations cell provided. For example, to find out the location of the sensor with location number to, execute locations{2} that will return 'kitchen' as an answer.

type: is a number representing the household object in which the sensor was installed. To find out the object label, use the types cell provided. For example, to find out the installation object for sensor with type number 5 execute types{5} and will return 'couch'.

Activity Labels

activities_data: is a matrix containing all the human activity labels for the whole duration of the study (~2 weeks) in the following format

[year_day, week_day, start_time, activity_code, subcategory_index end_time indicator]

year_day: is a number representing the day of the year. This number can be converted to the month, day, and year format using the provided matlab function [month,day,year] = yearday2date(year_day,year). The year for this dataset is 2003. usage example: [month,day,year] = yearday2date(88, 2003)

week_day: is a number between 1 and 7 specifying the day of the week (1=monday, 7=sunday) .

start_time: time at which the activity started in seconds. To convert the time to the hour, min, sec format use the provided function[hour,min,sec] = s2hms(seconds); Usage example: [hour,min,sec] = s2hms(57052).

activity_code: is a number representing the scheme code for the activity. There is a coding scheme which maps human activities to numbers so that it is easier to label human activities and represent them with numbers. You can use this number to represent your activity classes or the subcategory_index.

subcategory_index: is a number that represents the activity label. The activity labels are stored in a string cell called classes. For example, to recover the label of the first activity execute activity = classes(activities_data(1,5),3);

end_time: time at which the activity ended in seconds. To convert the time to the hour, min, sec format use the provided function[hour,min,sec] = s2hms(seconds); Usage example: [hour,min,sec] = s2hms(57052).

indicator: not used for this dataset, always one, but intended to specify if the activity is a primary (important) activity for health related applications.

Download Matlab dataset in zip format

Text dataset

subject one: 03/27/2003

Subject two: 04/19/2003

Each directory contains the data of a different subject. The data is stored in text format and coma separated values (.csv). To visualize the files, you can use Excel or Wordpad.

Each directory contains the following files:

1) sensors.csv

file containing the sensor information in the following forma:

SENSOR_ID,LOCATION,OBJECT

example:

100,Bathroom,Toilet Flush 101,Bathroom,Light switch 104,Foyer,Light switch ... .. .

2)activities.csv is the file containing all the activities analyzed in the following format

Heading,Category,Subcategory,Code

example:

Employment related,Employment work at home,Work at home,1 Employment related,Travel employment,Going out to work,5 Personal needs,Eating,Eating,10 Personal needs,Personal hygiene,Toileting,15

3)activities_data.csv is the data of the activities in the following format:

ACTIVITY_LABEL,DATE,START_TIME,END_TIME SENSOR1_ID, SENSOR2_ID, ...... SENSOR1_OBJECT,SENSOR2_OBJECT, ..... SENSOR1_ACTIVATION_TIME,SENSOR2_ACTIVATION_TIME, ..... SENSOR1_DEACTIVATION_TIME,SENSOR2_DEACTIVATION_TIME, .....

where date is in the mm/dd/yyyy format, time is in the hh:mm:ss format.

NOTE: ACTIVITY_LABEL = Subcategory example: Toileting,17:30:36,17:46:41 100,68 Toilet Flush,Sink faucet - hot 17:39:37,17:39:46 18:10:57,17:39:52 Send any

Download text dataset in zip format

References

For any references or more information about this work please refer to the following

| E. Munguia Tapia, S. S. Intille, and K. Larson, "Activity recognition in the home setting using simple and ubiquitous sensors," in Proceedings of PERVASIVE 2004, vol. LNCS 3001, A. Ferscha and F. Mattern, Eds. Berlin Heidelberg: Springer-Verlag, 2004, pp. 158-175. [PDF] |

| Tapia, E.M. Activity Recognition in the Home Setting Using Simple and Ubiquitous Sensors. M.S. Thesis Media Arts and Sciences, Massachusetts Institute of Technology, September 2003. [PDF] |

| E. M. Tapia, N. Marmasse, S. S. Intille, and K. Larson, "MITes: Wireless portable sensors for studying behavior," in Proceedings of Extended Abstracts Ubicomp 2004: Ubiquitous Computing, 2004. [PDF] |

any questions or comments to Emmanuel Munguia Tapia [emunguia -at- mit -dot- edu]