by Chrisoula Kapelonis, Lucas Cassiano, Anna Fuste, Laya Ansu, Nikhita Singh

SEA is a spatially aware wearable that uses temperature differentials to allow the user to feel the heat map of their interactions with others in space, real-time. By examining a user’s emotional responses to conversations with people and by providing feedback on a relationship using subtle changes in temperature, SEA allows for more aware interactions between people.

Emotional responses are analyzed and interpreted through the voice and the body. We used a peltier module as the base for the temperature change, and use BLE to get the location of users. An iPhone app was developed as well to allow users to get a more detailed view of how their relationships have changed throughout time (the app allows people to examine their emotional responses to people on a day-to-day level). Ultimately, SEA is a wearable that attempts to help people be more mindful of their own actions and responses in relationships and tries to foster and nurture healthier interactions.

______________________________________________________

Idea & Vision

Emotional awareness and relationship nurturing

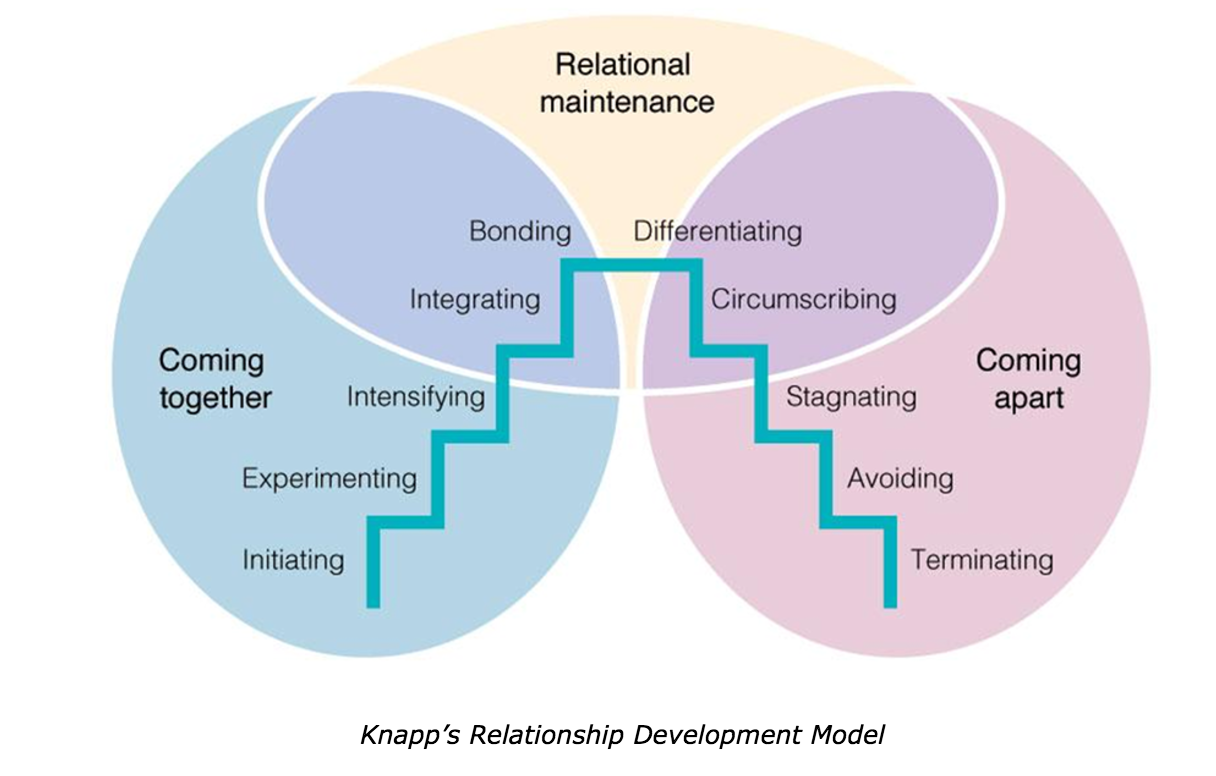

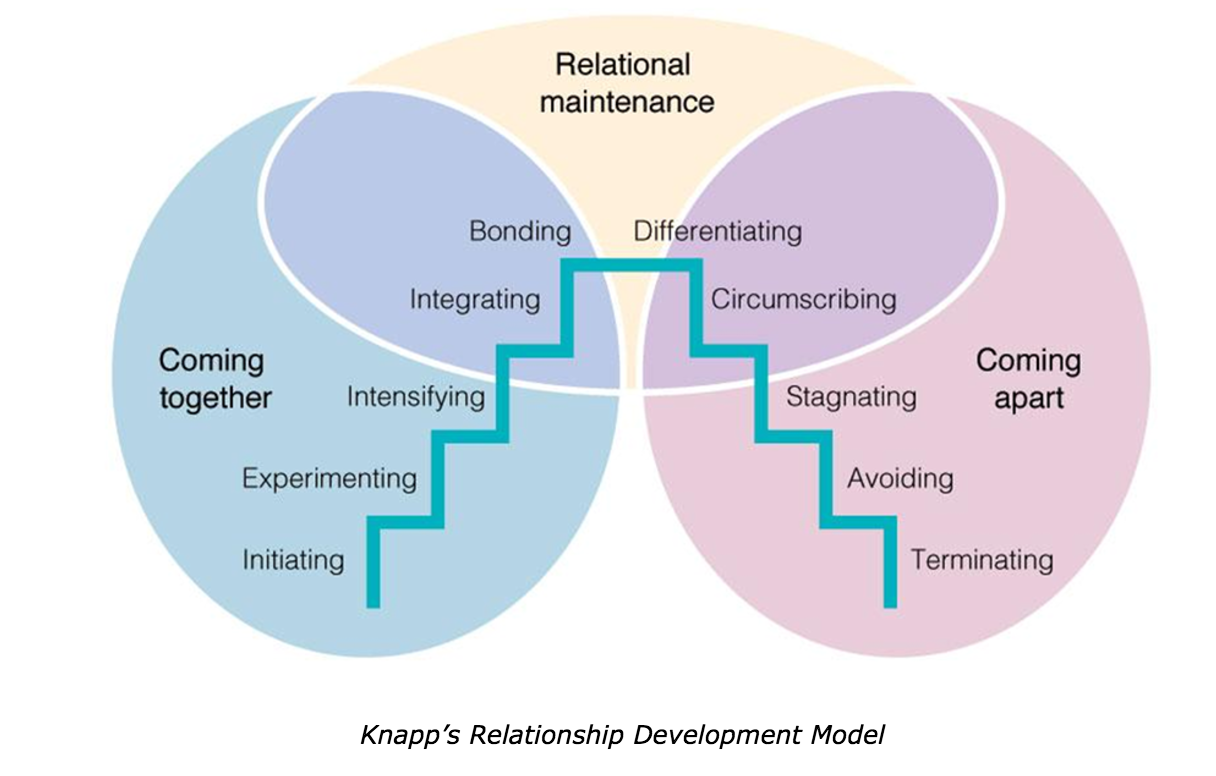

Today, we communicate with others through interpersonal interactions and digital exchanges. And over time, these interactions develop into relationships that fall in the spectrum of positive, negative and everywhere in between. Relationships form after repeated interactions, and it is the reinforcement of qualities of these exchanges that determine the baseline state of that relationship; whether it is a positive, neutral, or negative one. Awareness of these nuances are important for the person to understand their dynamics with another person, and how they can and should further interact with them. Knapp’s relationship development model [ is the reference point by which we have based our relationship metrics around. The model defines relationships in ten stages; five relating to relationship escalation, and the remaining five to relationship termination.

The Relationship Escalation Model consists of: Initiating, Experimenting, Intensifying, Integrating, and Bonding.

Initiating: This is the stage in which people first meet. It’s typically very short and a moment where the interactants use social conventions or greetings to elicit favorable first impressions. This is a neutral state, and one where there is no prior interaction to base a baseline off of.

Experimenting: In this stage, the interactants try to engage in conversation by asking questions and talking to each other to understand if this is a relationship worth continuing and expanding. Often times, many relationships stay at this point if they are passive, or merely not worth continuing. This is also a neutral state, but it is a stage in which a relationship can either desire to go forward, stay neutral, or start to lean in the negative range.

Intensifying: This is the stage where people start to get more comfortable with each other, and claims about the commitment of the relationship start to be conveyed. In this stage, formalities start to break down, and more informal interactions are favored. Here, people are starting to be perceived as individuals.

Integrating: At this point, the interactants become a pair. They begin to interact often, do things together, and share a relational identity. People at this point understand their friendship has reached a higher level of intimacy.

Bonding: This is the highest level of the coming together phase of a relationship. It is the moment where there is a formal declaration of the relationship such as “romantic partner,” “best friend,” or “business partner.” This is the apex of relationship intimacy so very few relationships in an individual’s life actually reach this level.

The Relationship Termination Model consists of: Differentiating, Circumscribing, Stagnating, Avoiding, and Terminating:

Differentiating: In this stage, individuals start to assert their independence. They develop different activities or hobbies and the two actively start to differentiate themselves instead of associate. In this stage it is not too difficult to turn the relationship around.

Circumscribing: Here, communication between the individuals starts to decay. There is an avoidance of certain topics of discussion, in order to prevent further aggression. Here the opportunities for revival are still plenty.

Stagnating: This is the stage where the individuals involved avoid communication and especially topics revolving the relationship.

Avoiding: In this stage, the interactors physically avoid each other, and reduce the opportunities for communication.

Terminating: This is the final stage of the termination model. It is the moment when the individuals devide to end the relationship. It can happen either negatively or positively, depending on the context.

It has been shown that participation in positive social relationships is beneficial for health. Lack of social ties has been correlated with higher risk of death when compared to populations with many social relationships. Social relationships affect health in three major ways, behavioral, psychosocial, and psychological. Strong ties help influence healthy behaviors because they reinforce good habits, whereas negative ones reinforce bad habits, thus having a negative influence. In regards to psychosocial mechanisms, good relationships provide mental support, personal control and symbolic norms, all of which promote good mental health. Negative or toxic ties decay mental health because they degrade these pillars. And finally, physiologically, social processes have a direct relationships with physiological ones such as cardiovascular health, endocrine and immune functions. Thus, the positive or negative influence of our everyday interactions has a significant influence on our health and well-being.

Digital interactions

Through the adoption of social media, and digital communication devices, our relationships have evolved beyond merely face-to-face interactions and diversified to develop further without the person being present. This allows our digital interfaces to gather the data being fed about the specific dynamics of that relationship, and start to make inferences about its state on the spectrum. Our social platforms now have started ranking these relationships over time by bringing positive, more frequented ones to the forefront (such as “Favorites” in Facebook Messenger, or Instagram’s feed algorithm bringing your favorite accounts to the top), and showing ones that are less important to the user, less frequently, often never. This has led to users becoming passively aware of their relationship dynamics.

But for in-person interactions, we only have our perceived emotions as indicators of relationship state. Emotional response is subjective, and often very difficult to decode and interpret in the moment of interaction. Our feelings toward a relationship dictates how we act in the interaction, which greatly influences both how the other person reacts back to us and our perception of them. In real-time, because we are governed by our emotional filter on a situation, it is difficult to understand or control how we are perceiving that interaction. In the long-term, over repeated interactions this also holds true. Often, we feel that a relationship is developing in a positive or negative way, but have no concrete understanding of just how much, until we observe it in retrospect. There is no metric to understand these interaction baselines, deviations, or averages. We are exploring how to use wearables to bring a new sense of awareness to the user to have a more comprehensive understanding of their emotions.

Wearables and self-awareness

Wearables can allow us to understand information about ourselves through the monitoring of the body and self in situ. They are used for a range of biometric tracking needs, and notification experiences. Often times though, wearables are meant to just have a singular, self-to-self relationship. They observe the body, or the communication to the body, and express information relating to that. But what if we could have social wearables that helped us be aware of our state with another person in face-to-face interactions?

Social wearables

“The next wave of wearables promises to usher in a Social Age, which is marked not necessarily by a movement away from information, but towards communication and self-expression.” -Amanda Cosco in wearable.com

This is where the intent of our project lies. The social age of wearables will allow the user to nurture relationships, grow empathy, and improve well-being and self-awareness. Our desire is to bridge the nuanced awareness of wearables, with the complications of face-to-face interactions, and design a wearable for social awareness. We hope to bridge the body and the mind, to link the internal self with the social self.

______________________________________________________

Related work: Interpreting the body and its signals

Our investigations in this project hinged deeply on the relationship between the body and the information it was telling us about the user’s emotional state. So we dove into understanding the current space of interpreting these signals, and understanding how emotions can be analyzed, understood, and conveyed on the body.

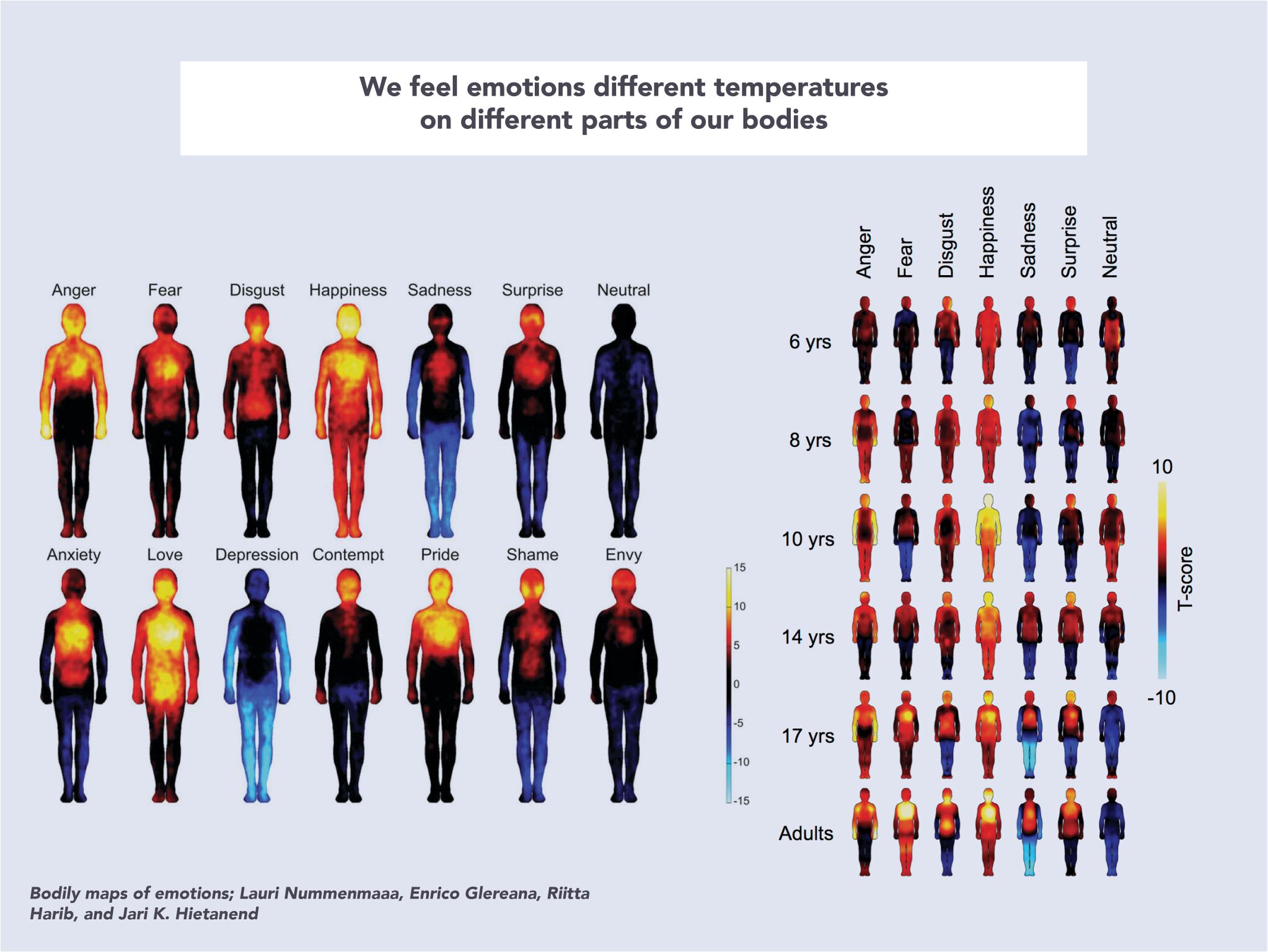

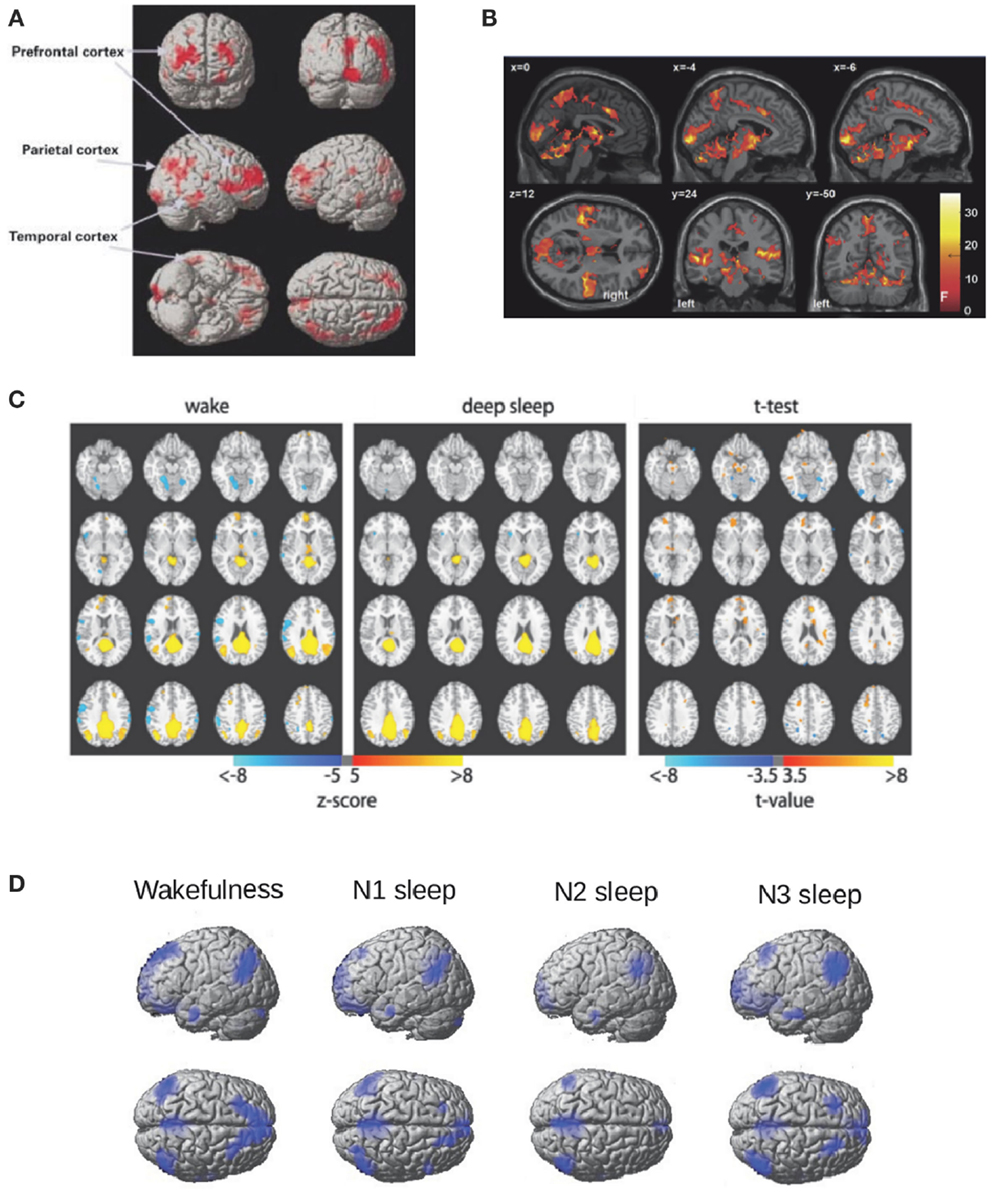

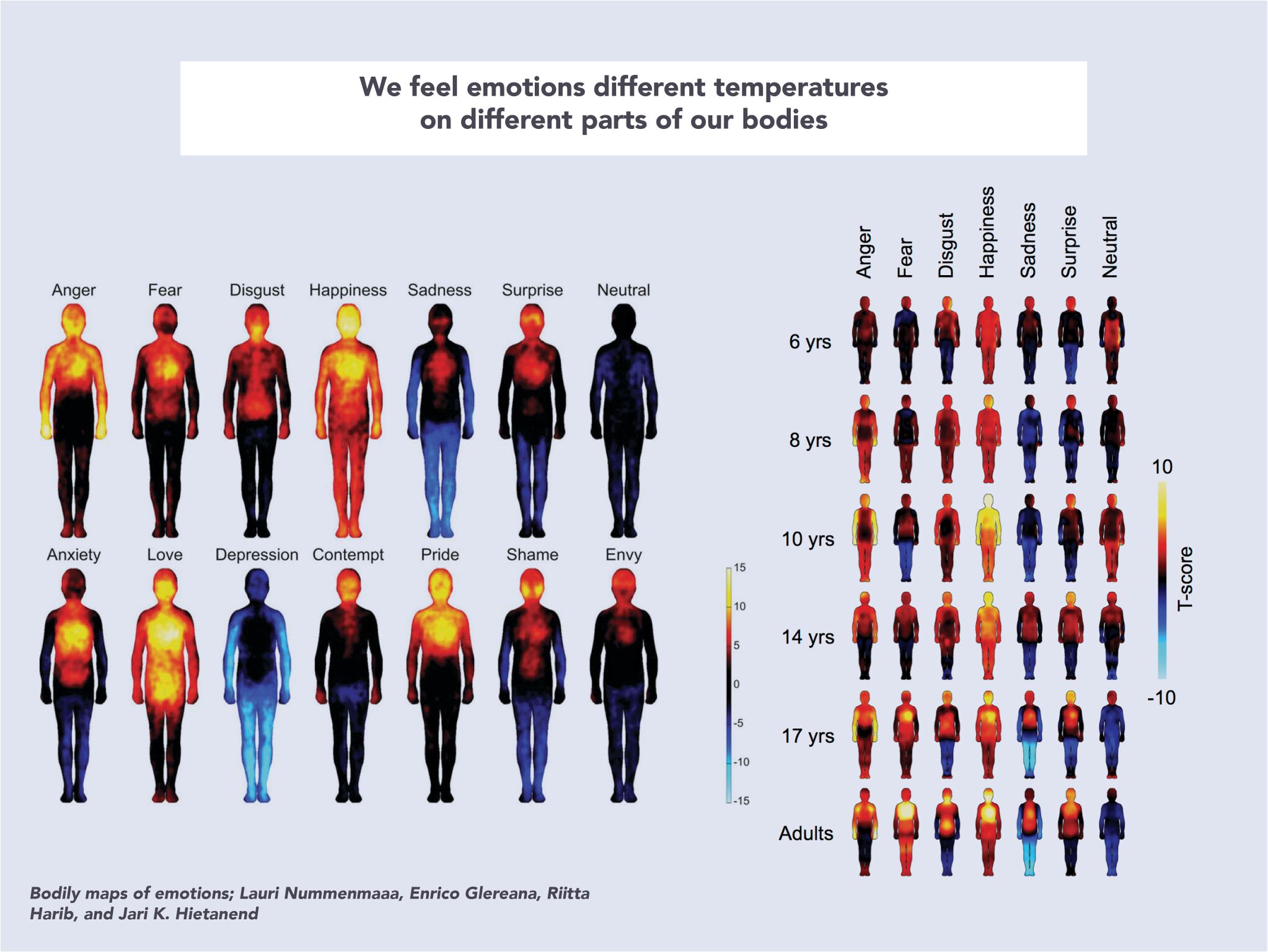

Bodily maps of emotions: relationship between temperature and emotions [9]

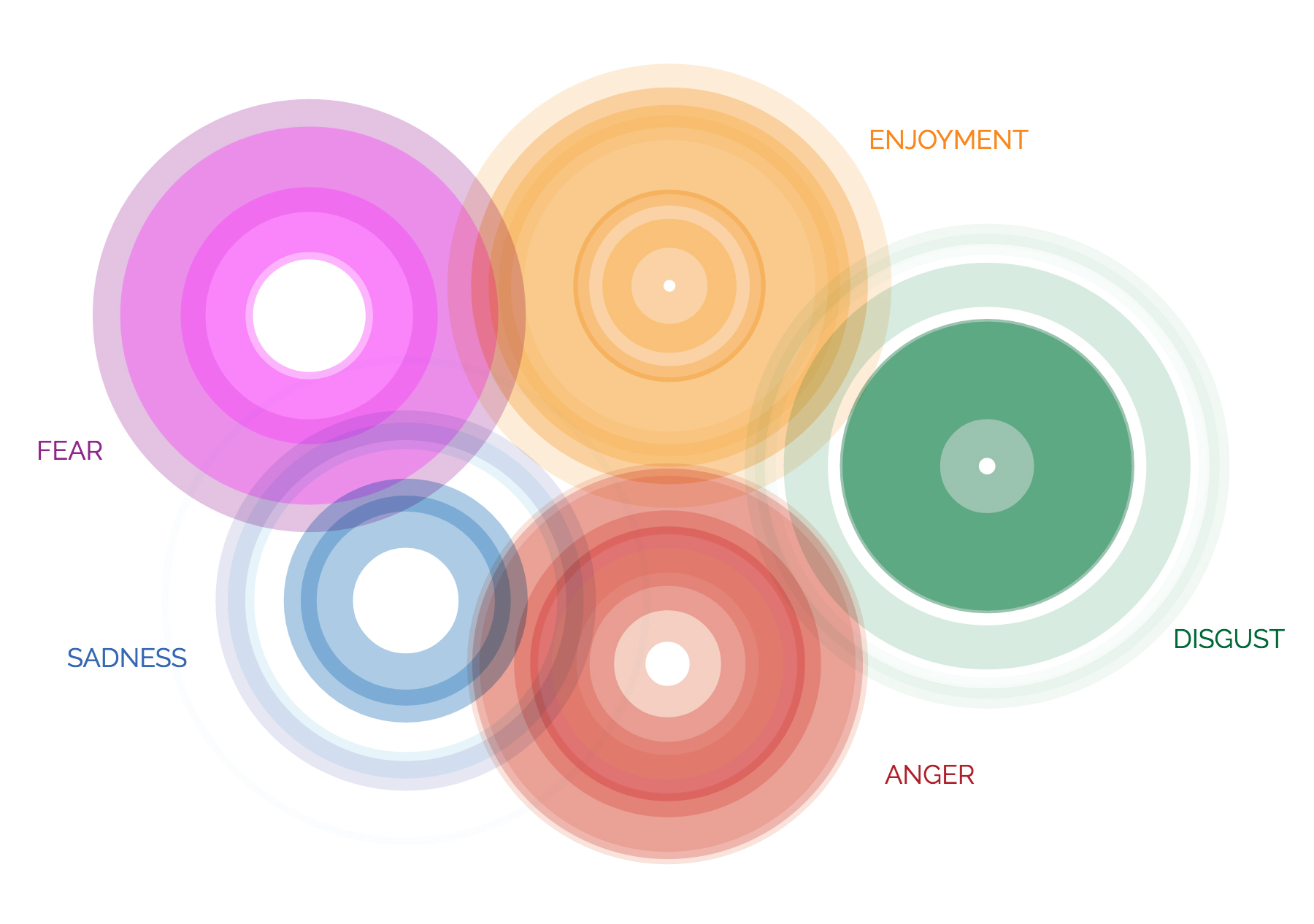

In this paper, researchers asked over 700 individuals from different countries where they felt different emotions on the body after watching clips, seeing images, and hearing snippets of audio, and mapped the answers to a human body silhouette. The researchers found that the bodily maps of these sensations were “topographically different” for different emotions such as anger, fear, happiness, disgust, sadness, and so on. Interestingly enough, the emotions were consistent across cultures. If we were to superimpose all of these sensations onto one body, we see that the chest is the location on the body where emotions are felt most. We kept this in mind when we were thinking about the form factor of our wearable.

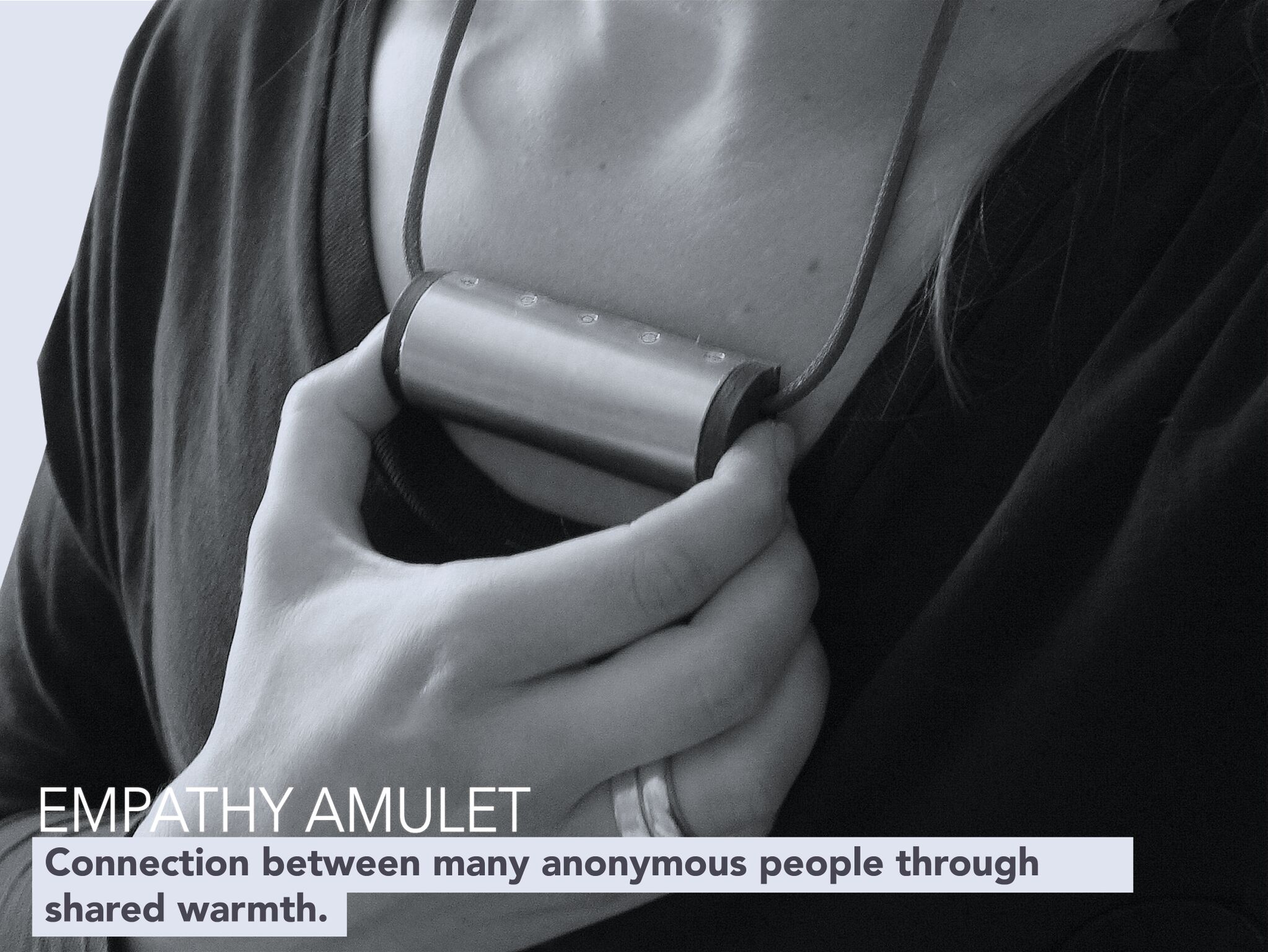

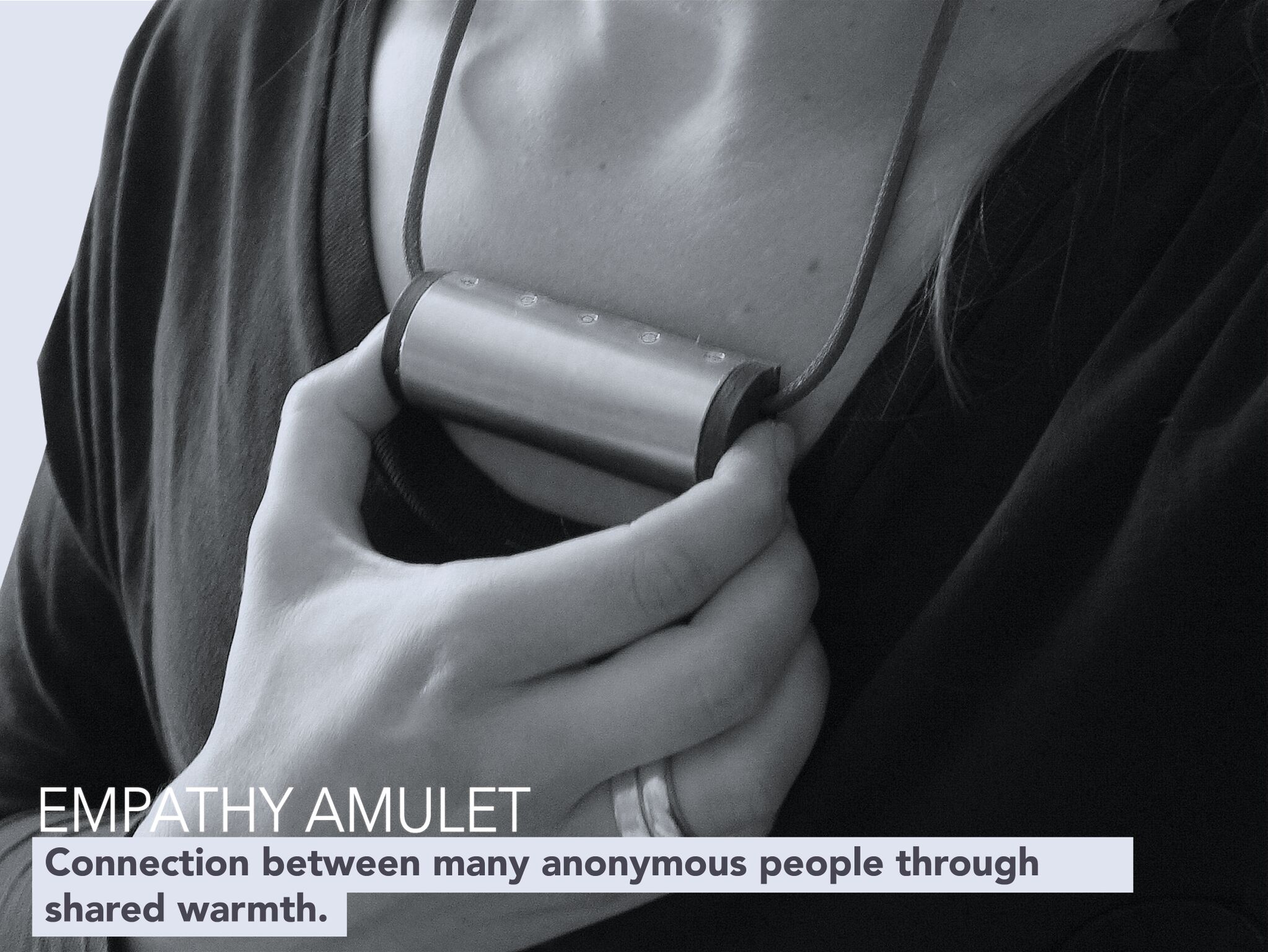

Empathy amulet: connecting people through warmth [4]

The Empathy Amulet is a project designed by Sofia Brueckner at the Fluid Interfaces group at the MIT Media lab. It is a networked wearable device that allows anonymous users to share a moment of warmth with each other. Users hold the sides of the necklace and feel the warmth of someone else around the world who connected with them at the same time. This gives the wearer a momentary connection through temperature, joining them with another person in a moment of desired warmth or loneliness. The form factor of the project, the necklace, translated well as an interface for facilitating connection with warmth, and we were particularly inspired by this.

Fan Jersey (X): amplifying experiences [11]

Wearable Experiments has made a smart jersey that allows sports fans to feel certain physical sensations related to what has happened in the game they are watching. Fans are able to feel the actions of another, the team they root for, translated into haptic vibrations. The experience of watching a game is amplified as all fans, as part of a shared experience, are able to feel these haptic vibrations when football plays such as the 4th Down, Field Goal, and touchdown happen. An interesting aspect of this shared experience is that a mutual emotion of excitement and happiness is translated into very simple haptic vibrations. The idea of simple output from a wearable translating a complex emotion like “excitement” is very interesting, and something we also explored with SEA.

Altruis X: stress and emotion tracking for well-being [12]

Altruis X is a wrist wearable that gives the user insights on stress triggers, sleep disruptions and meditative actions. It monitors the user over an extended period of time, and provides them with useful insights: for example, a notification that email usage after 7PM disrupts their sleep, or conversations with their dad have been lowering their stress levels. This project was particularly useful to us because of the emotional awareness it provided, and also because it allowed the user to only receive the notifications they wanted, and cut out the remaining noise.

OpenSMILE: valence and arousal prediction [15]

OpenSMILE is an open-source feature extraction tool that has the ability to analyze large audio files in real time. This tool allows for extraction of audio features such as signal energy, loudness, pitch and voice quality. This analysis gives us insight on the emotional state of the person speaking, which for SEA, is crucial in understanding how they are feeling about a particular interaction. In analyzing speech, we can link meaning and emotion.

______________________________________________________

Related work: Affecting the body/mind through temperature

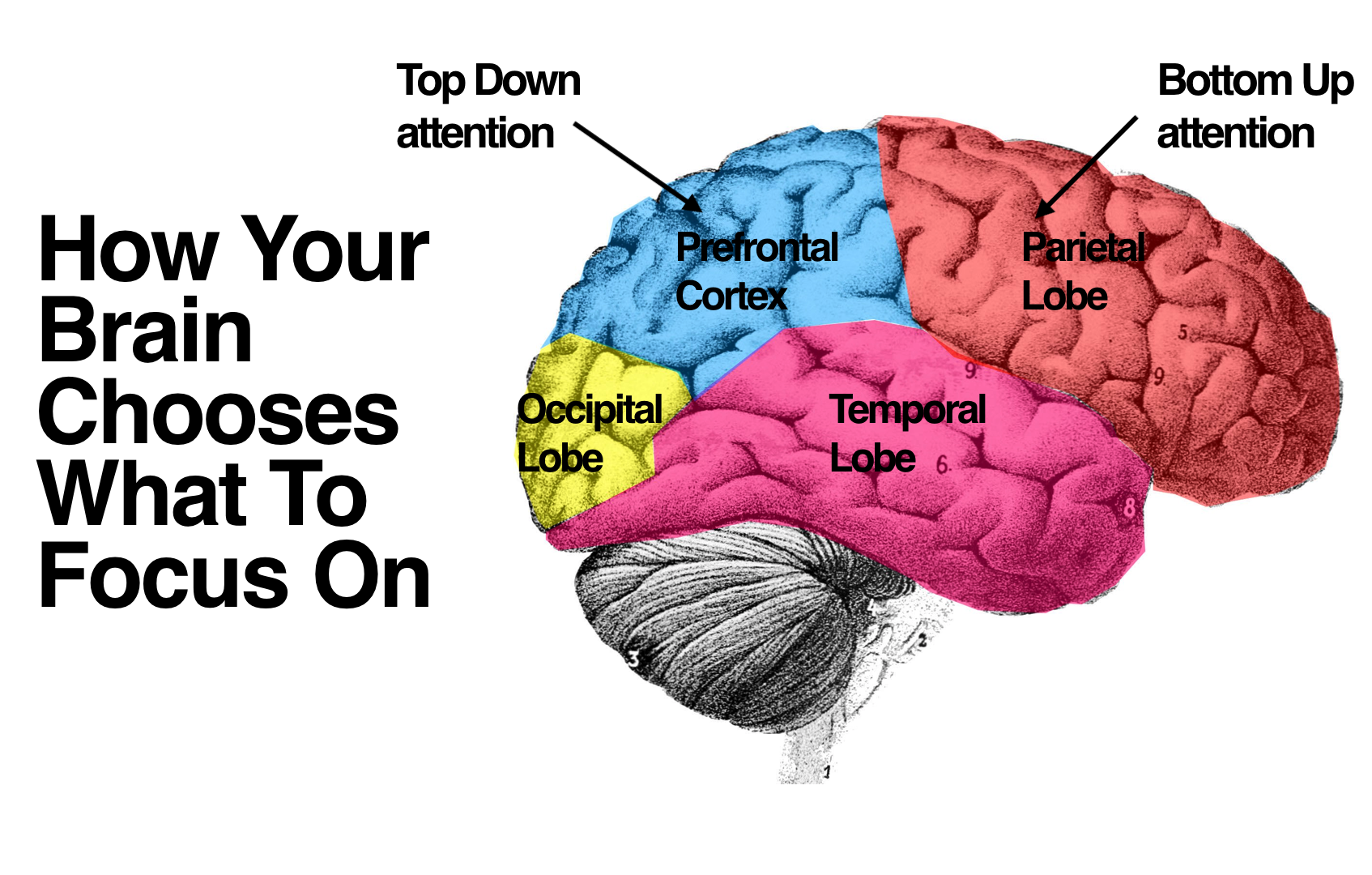

We perceive our environment through our senses and our vision and perception of the world depends on these channels that allow us to understand what is happening around us. The body receives external stimuli and can process different kinds of inputs: visual, tactile, olfactory, etc. Each of these inputs provides us with the information we need to understand and define our own reality. The smallest change of these perceptions can make us perceive the world slightly different and thus cause us to behave in different ways even without us being aware. Thalma Lobel has been exploring the effects of the environment through our senses and how can this transform our actions and interactions.

For this project, we explored different sensory modalities and decided to focus on temperature on the body as a means of enhancing and modulating emotions. Various research demonstrates how temperature has a high effect on emotions and our perception of the world. Temperature is a very strong stimuli that can let us enhance perception or use it as a medium for an interaction.

Cold and Lonely: Does Social Exclusion Literally Feel Cold? [2]

Zhong and Leonardelli demonstrate in this research paper how social exclusion literally feels cold. In their first experiment ‘participants who recalled a social exclusion experience gave lower estimates of room temperature than did participants who recalled an inclusion experience’. In their second experiment, subjects that were socially excluded in an online experience reported greater desire for warm food and drink than those who were included.

We can see with this work how there is a clear link between warmth and inclusion/friendliness and a link between cold and exclusion. In their own words:

‘These findings are consistent with the embodied view of cognition and support the notion that social perception involves physical and perceptual content. The psychological experience of coldness not only aids understanding of social interaction, but also is an integral part of the experience of social exclusion.’

Experiencing Physical Warmth Promotes Interpersonal Warmth.[5]

In these experiments, Williams et Al. explore the relationship between physical warmth and interpersonal warmth. They demonstrate how an experience of physical warmth has an effect on a perception of another person and the interaction that one can establish with others without the person’s awareness of such influence. They performed two studies to demonstrate such effects. In the first study, subjects that held a hot cup of coffee judge a target person as having a warmer personality (generous and caring). In the second study, participants that held a hot pad would choose a gift for another person instead of keeping it for themselves.

This research brings up the question: can adding a physical warmth factor to a relationship actually enhance friendliness? And does adding a cold factor to an interaction actually make it less friendly?

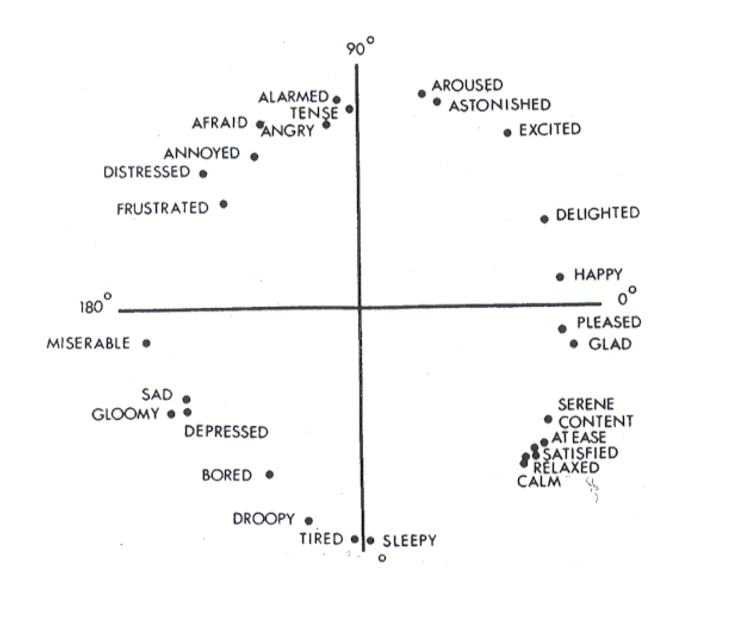

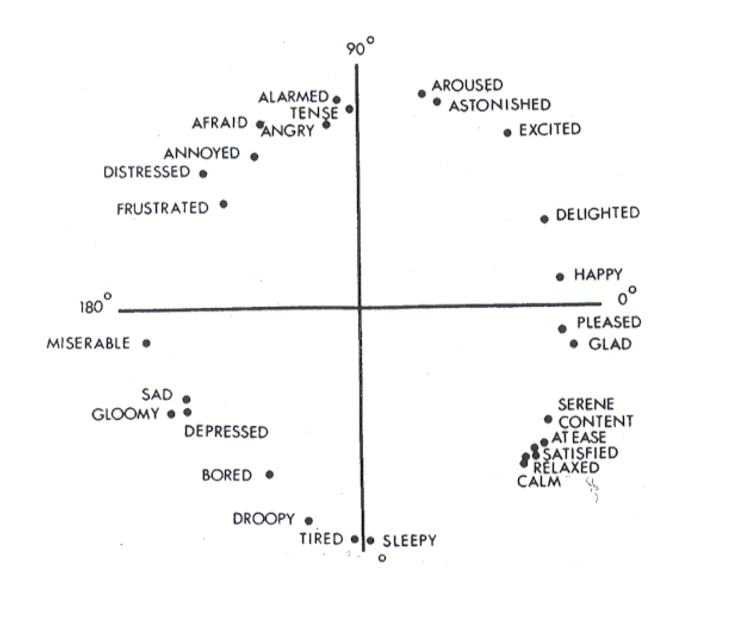

Hot Under the Collar: Mapping Thermal Feedback to Dimensional Models of Emotion [6]

Wilson et Al. explore in their research how can we map thermal feedback to dimensional models of emotions to be able to use it as a tool in human-computer interaction. Based on the association between temperature and emotion in language, cognition and subjective experience, they tried to map subjective ratings of a range of thermal stimuli to Russell’s circumplex model to understand the range of emotions that might be conveyed through thermal feedback. Based on this mapping, they questioned the effectiveness of thermal feedback to translate a range of emotions and posed the question: can thermal feedback convey the full range of emotions? They finally concluded that the mapping of thermal feedback better fits a vector model than Russell’s circumplex model.

AffectPhone: A Handset Device to Present User’s Emotional State with Warmth/Coolness [7]

Iwasaki et Al. present the AffectPhone which is a good example of how to convey emotional state with others on a remote interaction such as talking on the phone.

The AffectPhone uses a peltier module on the back of a mobile phone to present the user with an augmentation of their emotional state. The device tracks GSR (Galvanic Skin Response) from the user to process the level of arousal when having a conversation on the phone. The levels of arousal are sent to the mobile phone of the other person on the conversation and modulate the temperature on the peltier module. The system is designed to convey non-verbal information in an ambient manner. Bringing temperature to the forefront as awareness of emotional state can serve as a way of sensory augmentation and social interaction modulation.

Heat-Nav: Using Temperature Changes as Navigational Cues [8]

In Heat-Nav, Tewell et al. explore how temperature can be used as an interaction modality. They point out the fact that temperature changes are perceived over the course of seconds and that thermal cues are typically used to communicate single states, such as emotions.

They discuss how continuous thermal feedback may be used for real world navigational tasks and how to use temperature as a tool for spatial awareness.

Several research experiments have tried to integrate new sensory modalities in our perception system. However, as O. Deroy and M. Auvray mention in their article ‘Reading the world through the skin and ears: a new perspective on sensory substitution’, most of the systems developed have failed to live up to their goals as they assume that a sensory device can create a new sensory modality. In our case, we try to think about ways of enhancing current senses and augmenting their capabilities by expanding the input channel to the body and mind. Our aim for this project is not to create a new sensory system but rather to expand and enhance the interactions by using our existing sensory systems.

______________________________________________________

SEA – Spatial Emotional Awareness Wearable

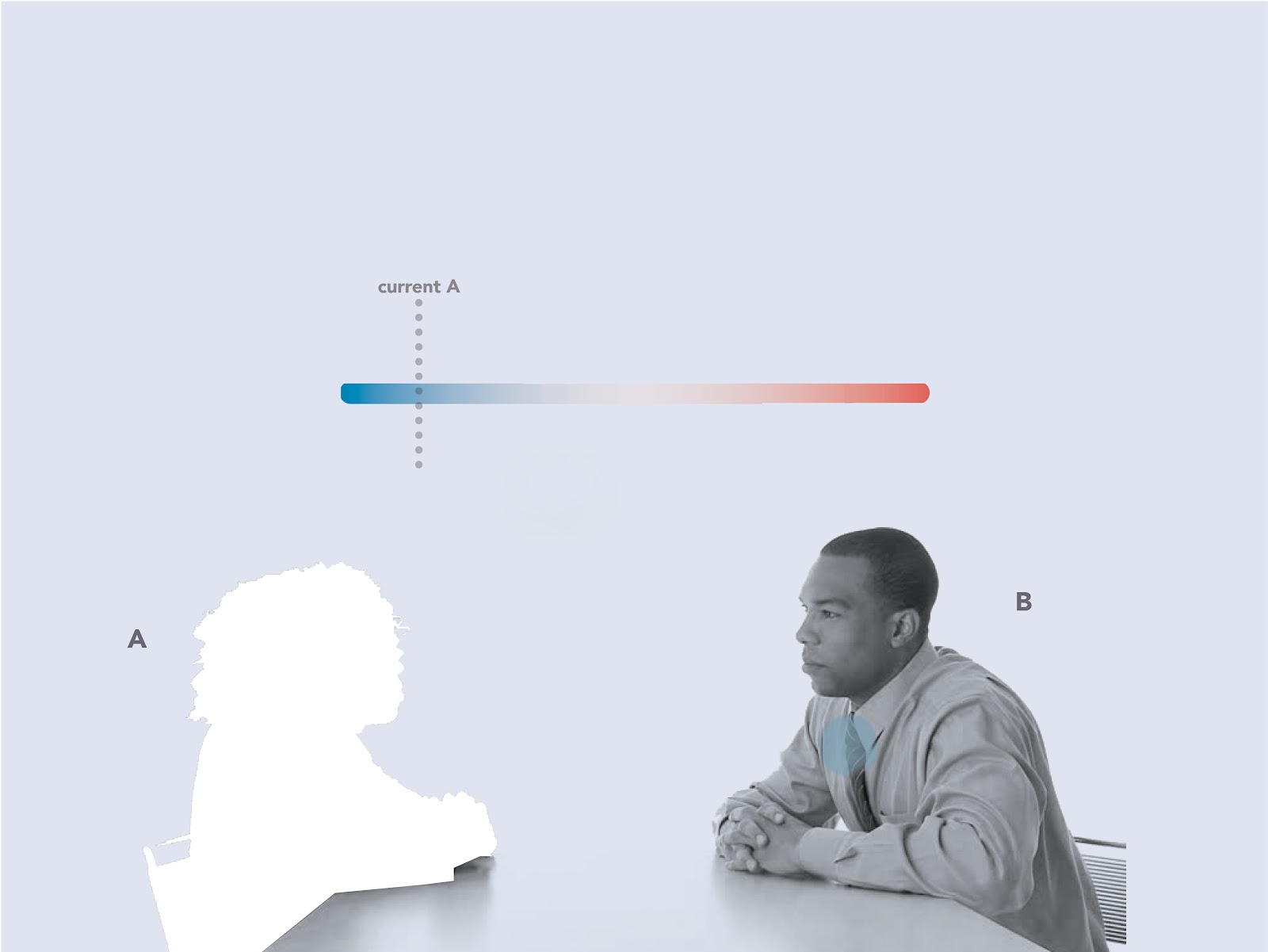

SEA is a wearable for interpersonal emotional awareness and relationship nurturing. It analyzes the wearer’s voice during in-person interactions and provides them with thermal feedback based on the warmth or coldness of that interaction. It also establishes a baseline temperature for a specific relationship based on repeated interactions, so the user can feel their friendships while navigating through space.

The spatial experience

SEA’s capabilities allow the wearer to engage in two scales of awareness; the state of a relationship baseline, and real-time conversation. Each one of these scales plays a role in providing the user useful information about relationship dynamics. One is passive, and merely feeling the map of current states, and the other is active, listening and analyzing in real-time conversational data.

SEA affords the wearer the opportunity to experience a heatmap of their relationships while they are navigating through space. They can feel the warmth of a good relationship around them, and can also be aware of the presence of a negative influence in their space. In environments where relationships are undeveloped, and still in the early stages of their emergence, the user will feel no temperature difference from room temperature. The device will not provide additional heat or coldness. But in spaces where there are more fully formed relationships, the wearer will feel a more defined temperature gradient that deviates from the neutral room state. This lets the user become aware of the direction of the interactions they have in a particular space. SEA becomes a thermal navigator for physical space.

In terms of instantaneous personal relationships, you can feel when a conversation is going really well, and developing past its previous state, and you can feel when a conversation is going back.

Temperature as translator

We were trying to understand if temperature could allow for both internal awareness (emotion) and external awareness (social and spatial). Instead of trying to load our wearable with all possible types of feedback (output), we attempted to create a wearable that was very subtle in the way it revealed information to a person. As discussed earlier in the related work section, the experience of temperature–heat and coldness– can impact how people behave in interactions with others. Something as subtle as holding a normal, iced-coffee as opposed to a warm cup of coffee can change how warmly someone behaves when talking with another person. We wondered if we could use temperature as a translator of positive feelings and emotions or negative feelings and emotions. We wanted to focus on subtle cues that could hint at something being different in a relationship, as opposed to outright letting someone know: “your relationship is suffering” or “your relationship is wonderful”. Relationships are complex: there will always be a mix of hot and cold feelings. Using a slight difference of temperature allows us to scale the perception of a relationship over time. This is while tuning the general feeling of the relationship throughout time. We wanted to emphasize the time element of the relationship and used temperature as a way to notice subtle deviations in the relationship that had been something over time.

______________________________________________________

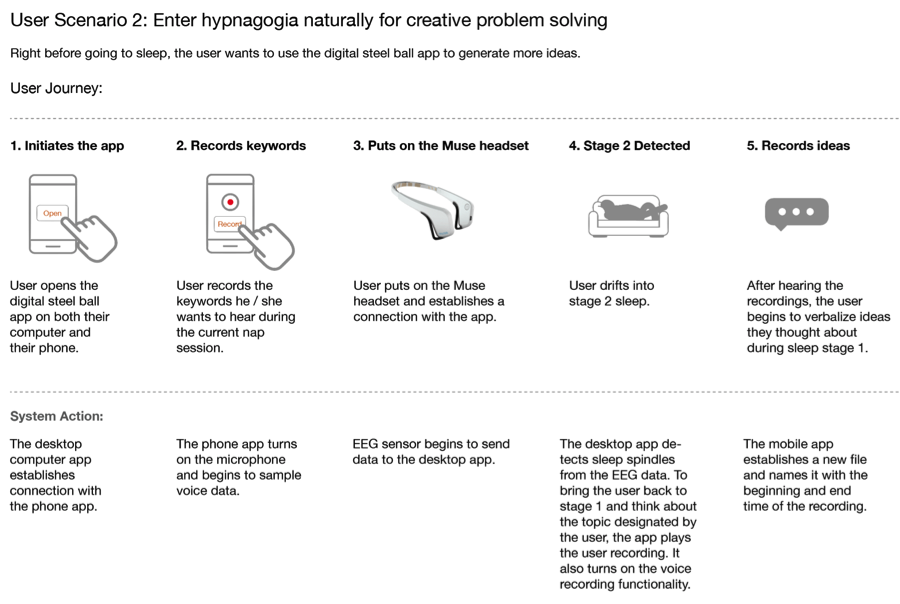

Usage Scenarios

SEA can be used in many different scenarios that reveal how a person is feeling in the context of relationships and location. Below are some usage scenarios that the interface can afford the wearer.

Find warmth when you need it

There are many times when a user is in need of close relationships to improve an emotional state. In these moments, most people seek the warmth of people they care about and who you know care about them. A wearable like SEA allows a person to move throughout space and feel the warmth people around them based on locational proximity. As the user navigates in one direction, they can seek the warmth presented by the presence of a close friend, and use it to help navigate to them through space. SEA allows the user to specifically find people who will support them them in difficult moments, or even just on days where they are looking to find a good friend to chat with.

Avoid a toxic relationship

On rare occasions, there are relationships that sometimes head in the negative range for various reasons. Whether there was a conflict, a difference of opinions, or personality differences, a negative undertone is associated with the relationship. Sometimes, because of legal reasons, personal reasons, or for the integrity of a work environment, people tend to avoid these kinds of relationships. But sometimes, they are difficult to avoid because of proximity. SEA gives the user the chance to sense when a toxic relationship is nearby, and give them the ability to divert their path in order to avoid a bad interaction. This is mostly useful for relationships that have reached the termination phase, and are far from repairable/a dangerous presence.

Make bad relationship better

Because of the time element SEA, a user is able to feel the progression of a relationship. If the relationship has always been very warm but is going through a rough patch and starts to get colder, SEA allows a person to notice this transition. Making people aware of fluctuations in relationships provides them with the opportunity to try and do something about a potential negative change. When a person starts to feel a mildly cold presence, it makes them aware of the change, and allows them to take action, and repair a decaying friendship.

Group scenario

When people interact with groups of friends, SEA considered the culmination of people in that setting as a group entity. Because different chemistries combine to create a different group chemistry, friends tend to act differently in specific group settings than with each person on an individual basis.

Understanding the temperature of an environment:

With SEA, the wearer can use temperature as a spatial navigator. Different environments we interact with on a daily basis hold different kinds of relationship dynamics. When a work setting is not the most positive environment, the user can understand the average of all interactions in a space and become aware that the environment there is toxic and could drive them to take action. And the opposite is possible as well. When a user feels continuous warmth, such as in a home setting or a dinner with friends, they have the ability to understand the supportiveness of that space, and perhaps could drive them to visit those spaces more.

Understanding the average temperature of interactions over a day:

Good and bad interactions influence your well-being and your perception about yourself. The companion app gives the user a snapshot of the average temperature of their interactions over the course of each day, so they can understand if their positive demeanor could be associated with multiple good interactions that day, or a depression was triggered by one really bad interaction that day, with very few others. This can help people keep track of their emotional well-being and take control of who they choose to interact with, and how they can help improve their states.

Feeling the relationships of another

Because SEA is customizable and detachable, users could switch their data sets by physically switching the bottom piece and inputting someone else’s data. This can have them either feel the relationships and interactions another person feels in space. It allows the user to develop empathy for the other person and their experiences.

Feeling the stresses of a person on your necklace

SEA can pick up the emotions/happiness/stresses of another person like a spouse to allow them to be empathic to the experiences of the other. When the user can feel the emotional state of a person they have switched their data with, they can start to be aware of why they are feeling as they are when they interact, for example when a partner is having a bad day, the user can be supportive. This is especially helpful, for example, for couples who want to understand how their partner is doing, or what they are feeling.

Awareness of a negative emotion like aggression

For a person that has issues with anger or depression, SEA can help them become aware of moments when they are acting in a way that is detrimental or negative and take action to change it. For example, when a user is becoming angry or starting to become negative, they can feel the necklace becoming cold, which could take them out of that state, and give them the chance to change the course of the interaction into a more positive one.

Awareness of topics of conversation and their emotional response

Because SEA analyzes the user’s voice in the interaction, it can also indicate when a certain topic of conversation is positive or negative as well. For example, when a user gets happy talking about a project they are working on, they can feel the happiness on their chest. The same goes for a difficult topic of conversation. This gives them the opportunity to avoid the topic or confront it with someone that’s supportive. They can start to become aware when a topic of conversation makes them happy often, or makes them sad.

______________________________________________________

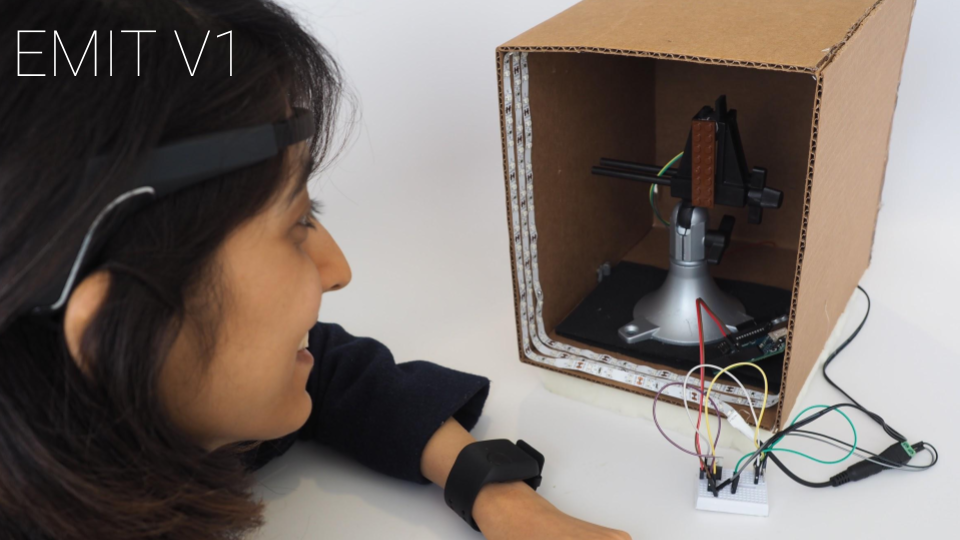

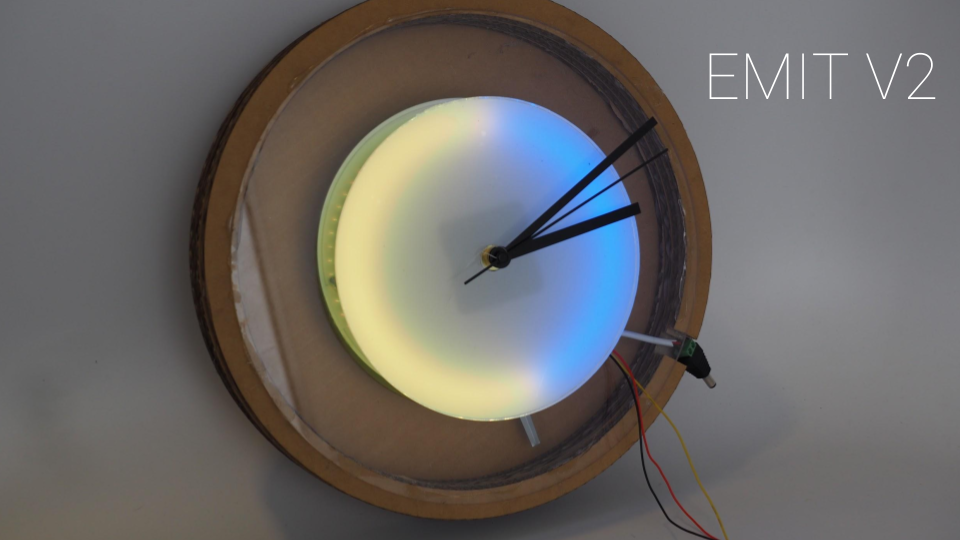

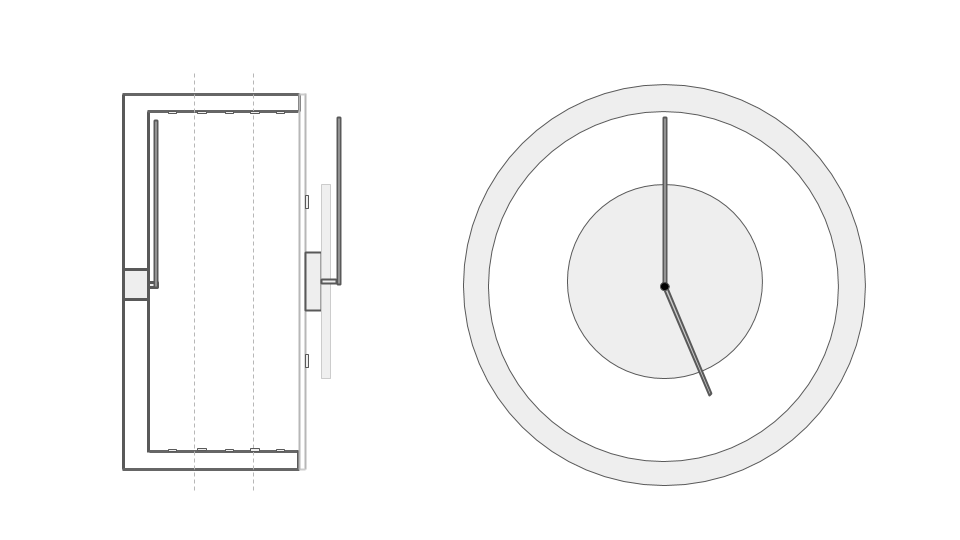

Prototype Development

Hardware

Prototype Components

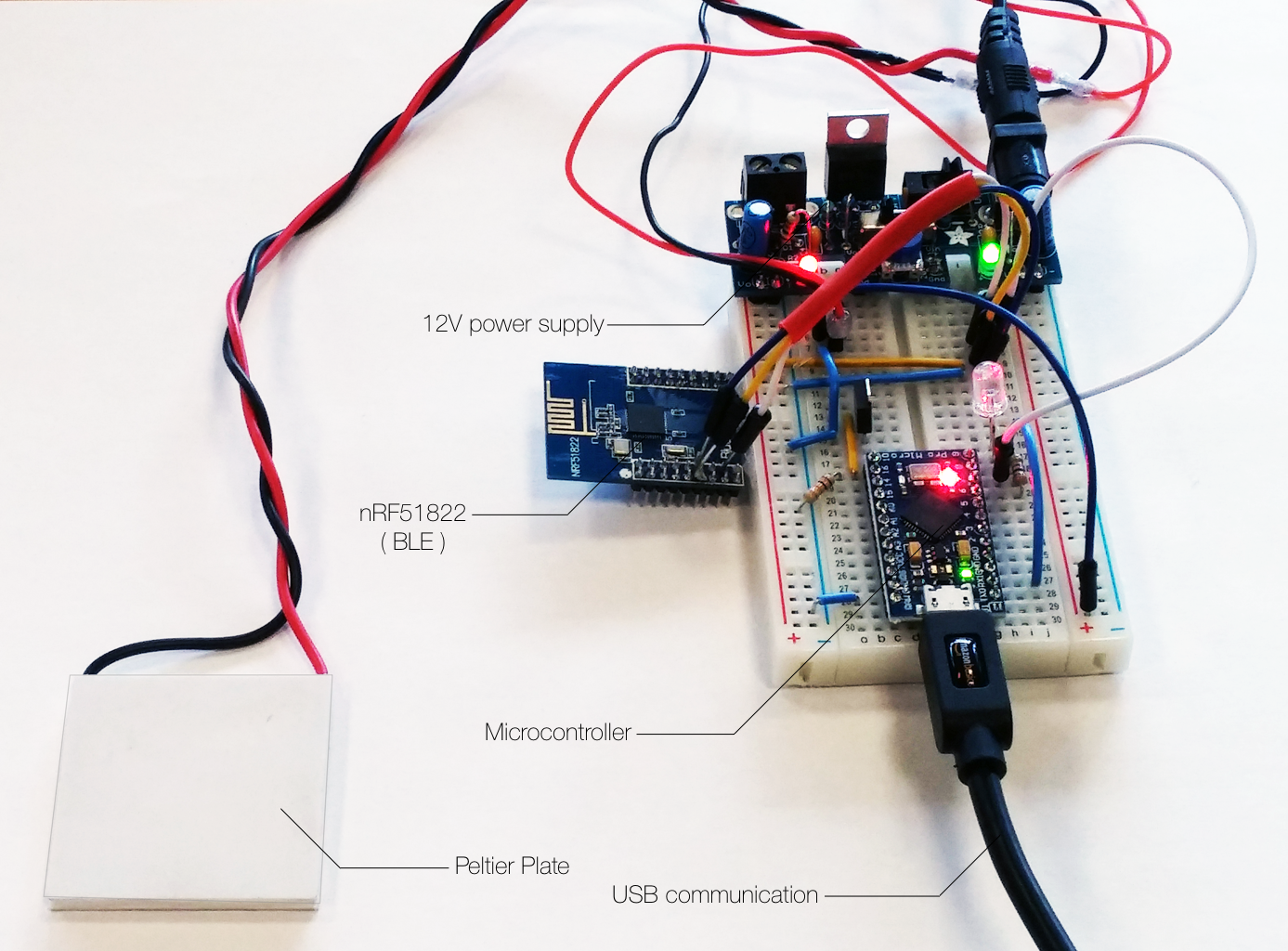

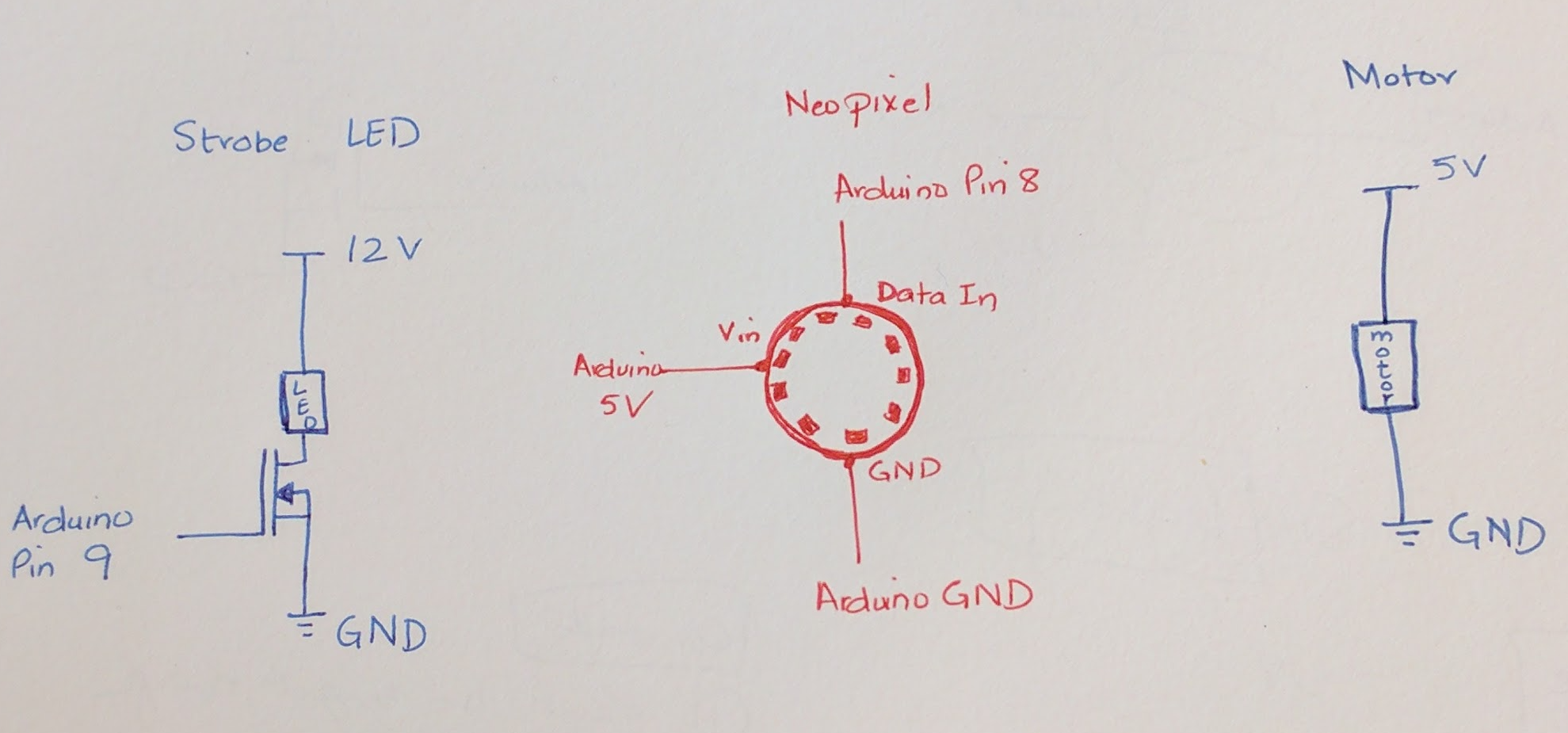

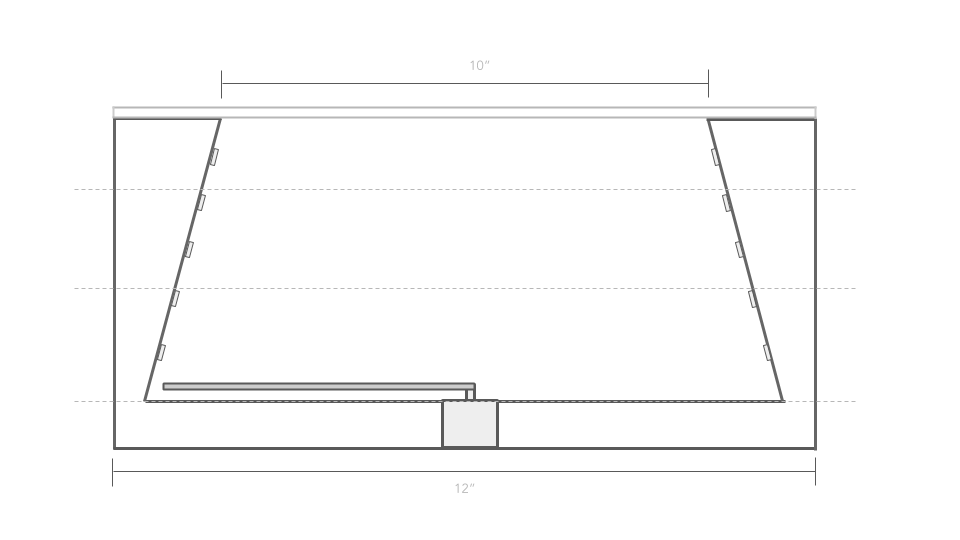

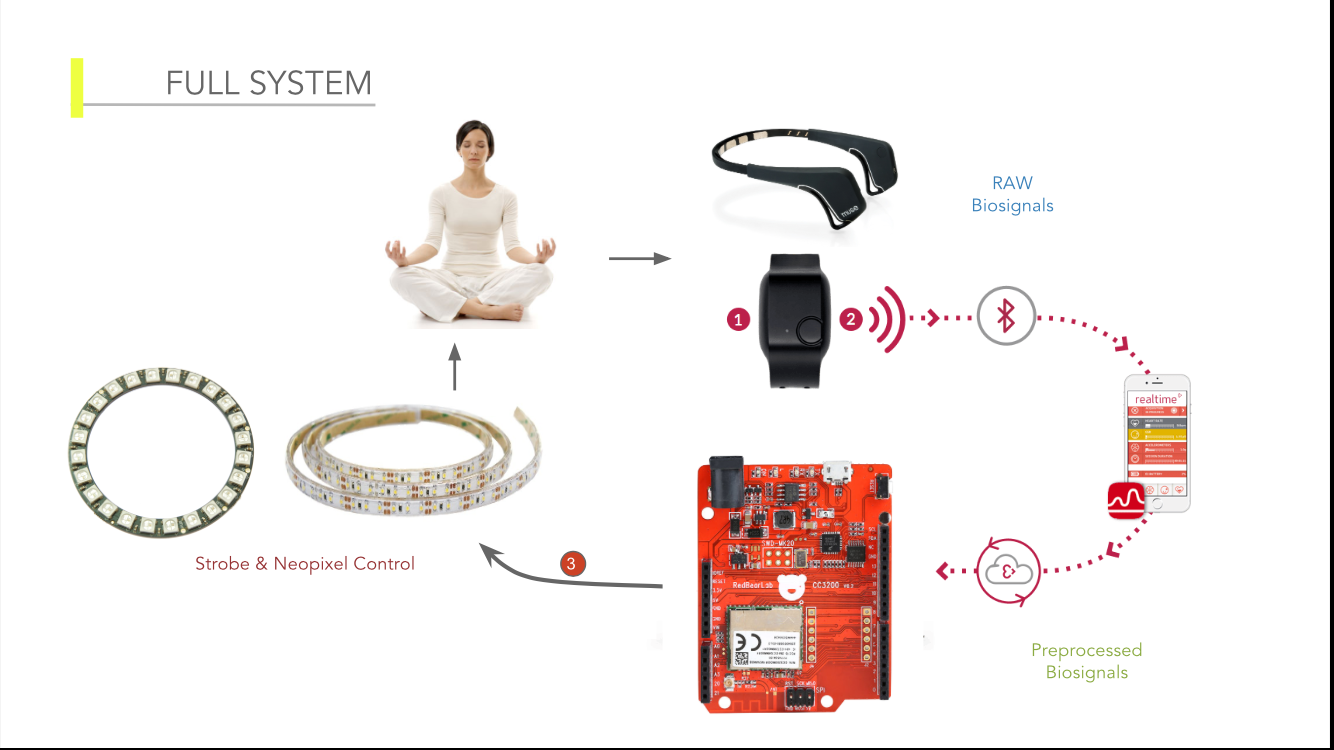

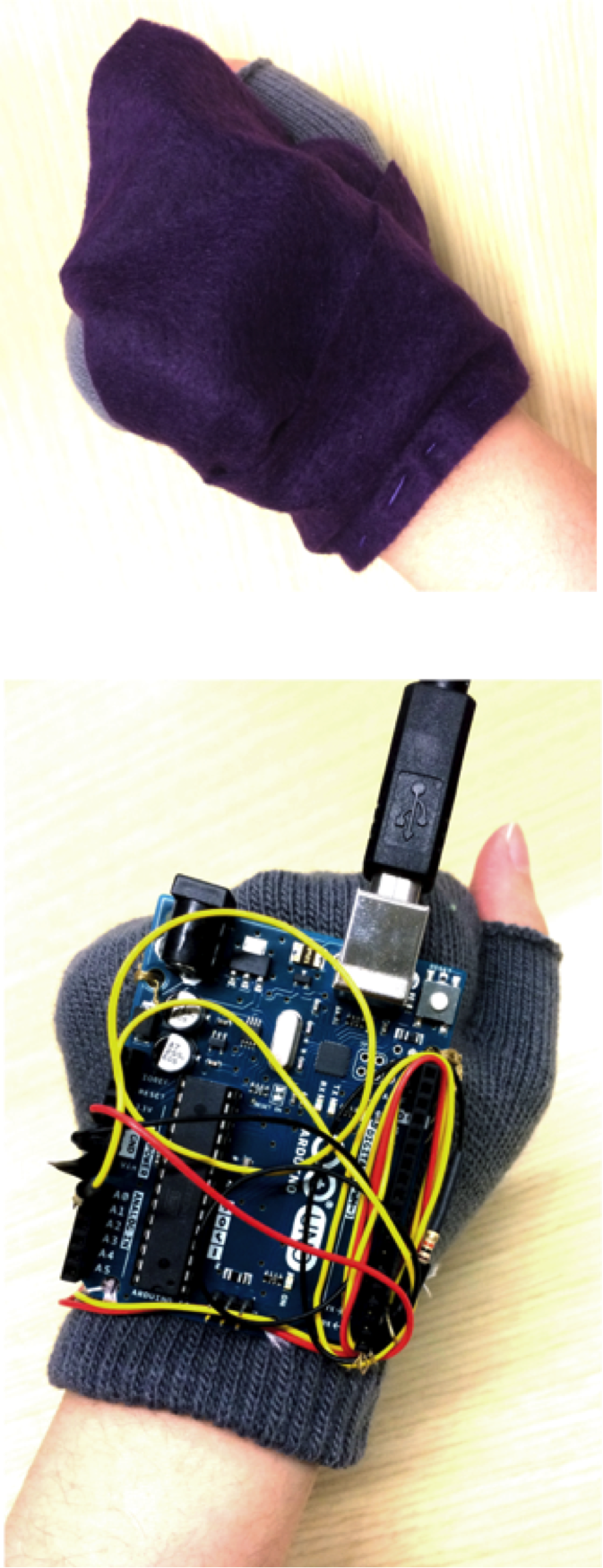

In order the keep the project complexity low and also make it possible to use the same circuit structure (data and electrical flow) to fit inside the SEA form factor presented here, we used one microcontroller (Arduino Micro Pro) receiving data from a Bluetooth Low Energy module (BLE), the last one is reading received signal strength information (RSSI) from other nearby BLE enabled devices, e.g. another SEA device. One of the main issues is power management and current flow. For this reason we added couple voltage regulators on the system, to supply 5V and 3.3V for the Microcontroller and BLE Module respectively. The Peltier Plate drains too much current (around 2A), which means it can’t be controlled directly by the Microcontroller I/O pins. Therefore we built a temperature controller system composed by MOSFET transistors driving a H-Bridge circuit (this will be better explored in the next topic).

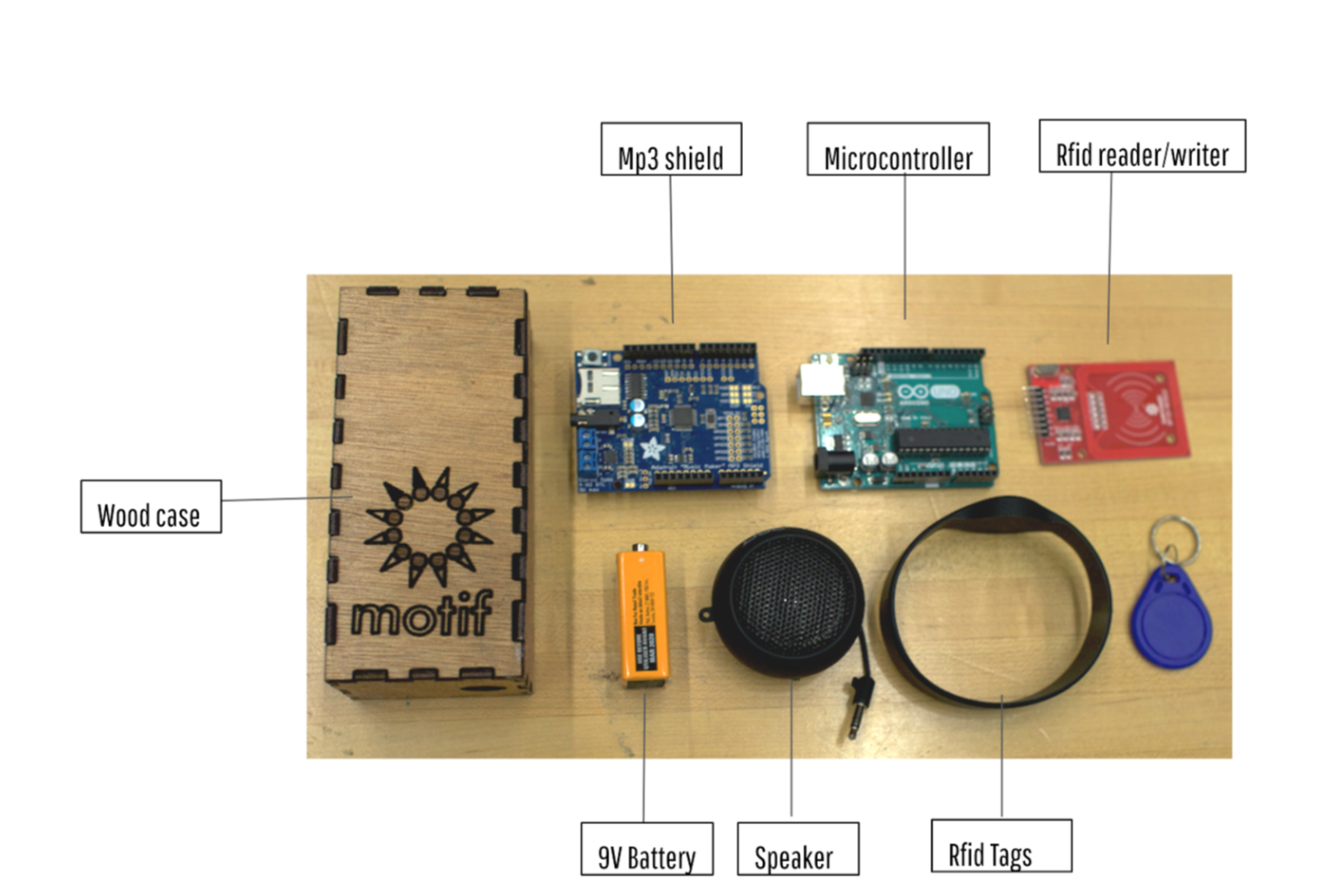

Prototype 1 – components

System’s Control Diagram

Temperature controller

The device uses a thermoelectric cooler and heater component based on Peltier effect, creating a heat flux between the two sides of a plate. Using a H-bridge circuit driven by MOSFET transistors we can control the heat flux, therefore the device’s temperature.

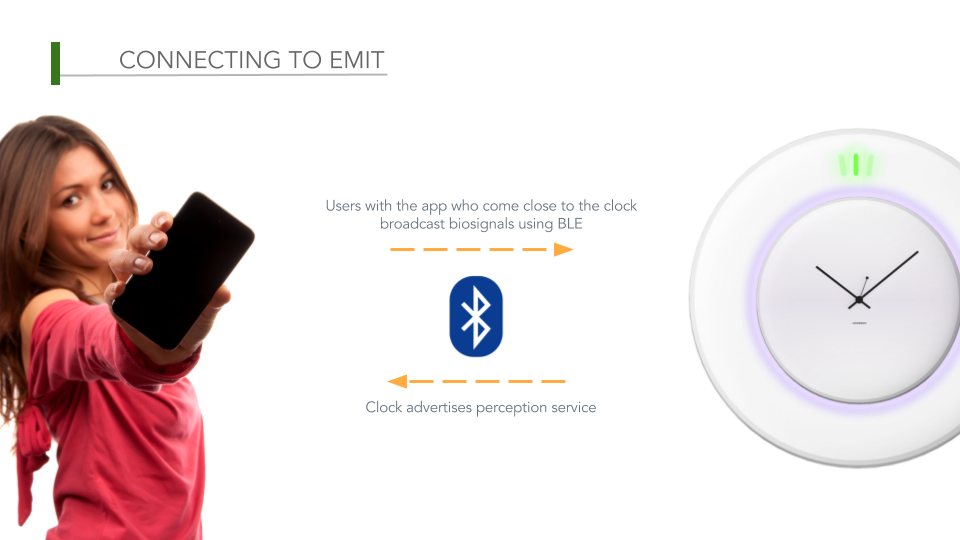

Bluetooth Low Energy (BLE) Scanning System

As explained before, the distance tracking system is based on Bluetooth Low Energy Modules, i.e. inside each SEA device there is a BLE module that can work as a scanner or beacon (advertising BLE packages with informations like device name, type and manufacturer). Based on the exchange of BLE information the device can calculate the RSSI (received signal strength information – in dBm –power ratio in decibels (dB) of the measured power referenced to one milliwatt).

BLE Modules communication / scanning test

Software

The Mobile Interface

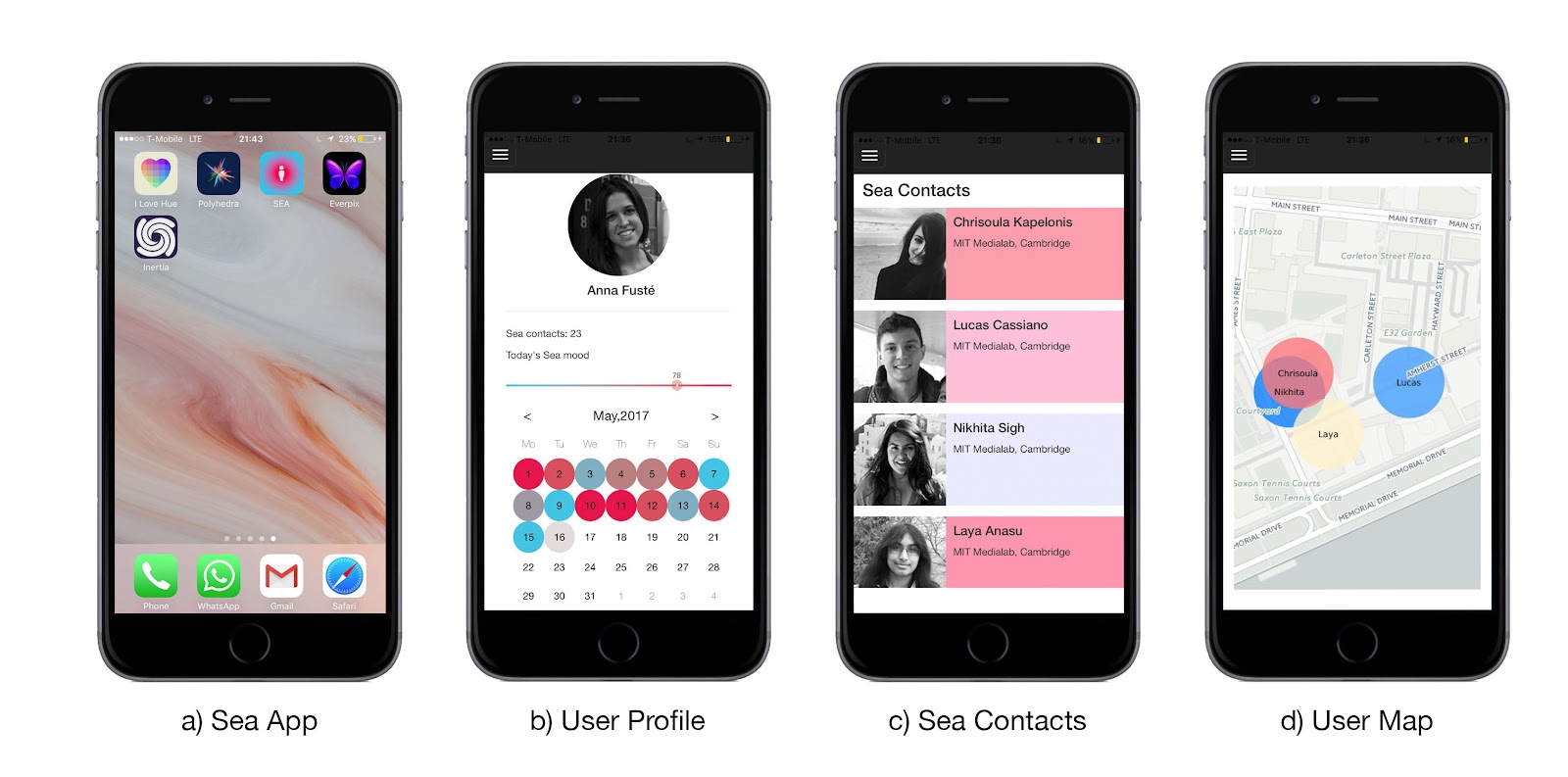

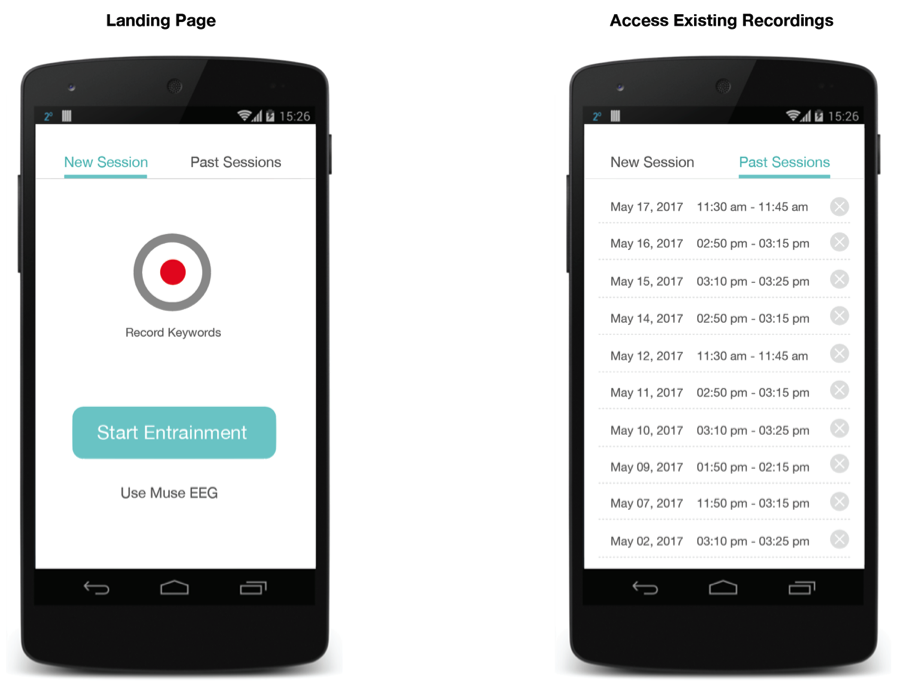

SEA is controlled by a mobile phone application where the user is able to configure and visualize the interactions with others (a). The user can check his/her profile where a display of the current day SEA level will be displayed. The user is also able to see the interactions in time on a calendar (b). The calendar shows an evolution in time of the SEA interactions. A list of contacts is displayed on the Contacts section (c). All the users are listed and they will be displayed with a background colour according to the past interactions that the user had with each one of them. If the interactions with a user were not friendly, the user will be displayed in blue, if the interactions were warm interactions, the user will be displayed in red. It gives an average of the temperature felt with each one of the subjects for all the interactions had to that moment in time. Finally, a map of the spatial location of each user is displayed (d). The map also displays the colour of the average temperature per user and their influence in space around the main user.

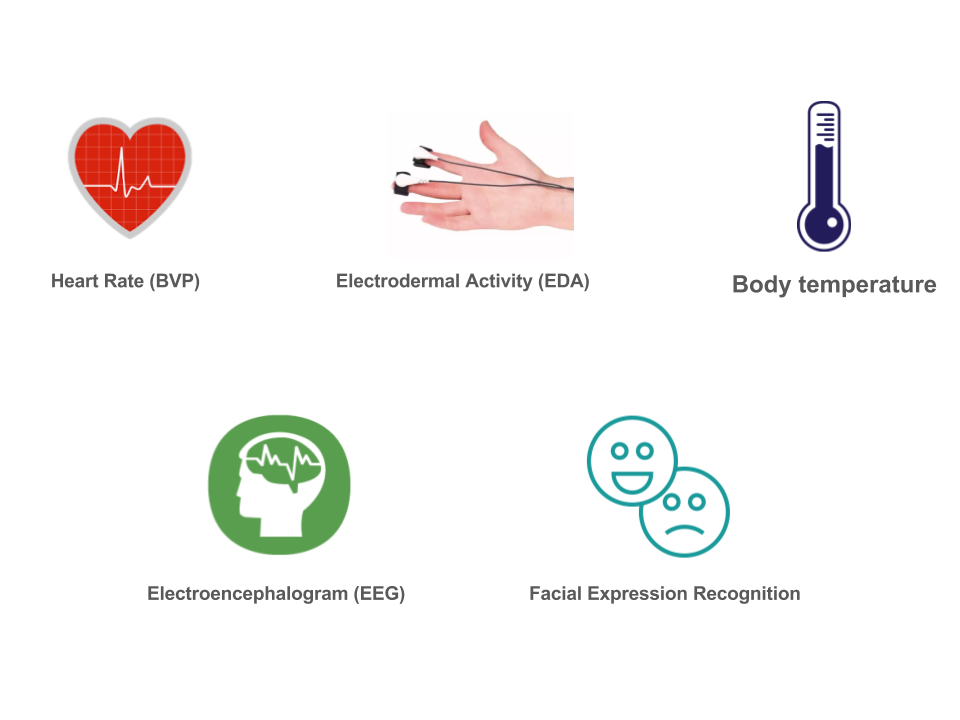

Detecting emotions

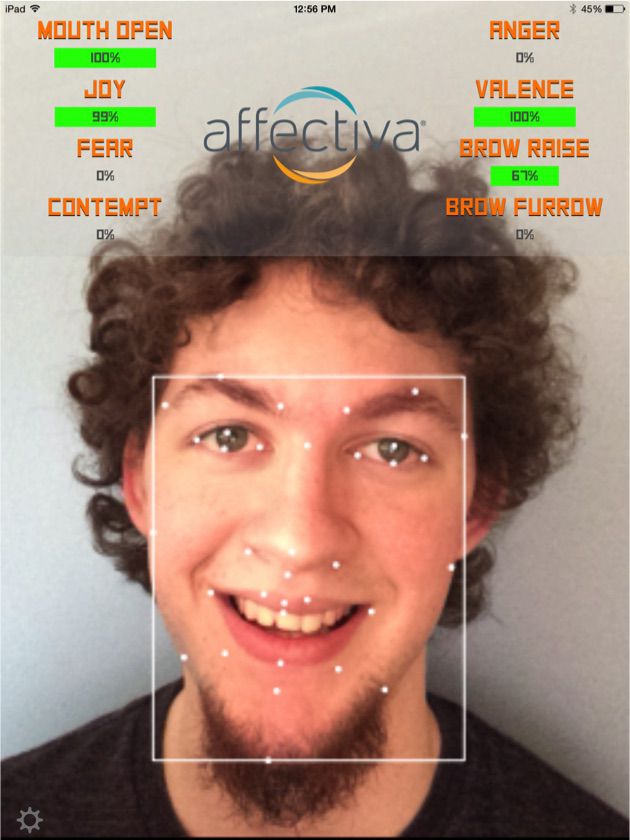

The on-the-body form factor of SEA affords the ability to detect multiple inputs as a means to understand the emotions of the user. Both speech data and physical data (i.e. skin conductance and heart rate) are meaningful metrics for detecting the emotional state of an individual in an interaction. In addition to these modalities, semantic analysis can also be used to understand the digital footprint of a relationship through messages. Affective analysis using visual inputs from a camera could also be useful, but requires the user to be visible from a camera.

For the current prototype of SEA, we explored and implemented the ability to derive a subset of features that can be utilized to build a unified model for interpreting user emotions.

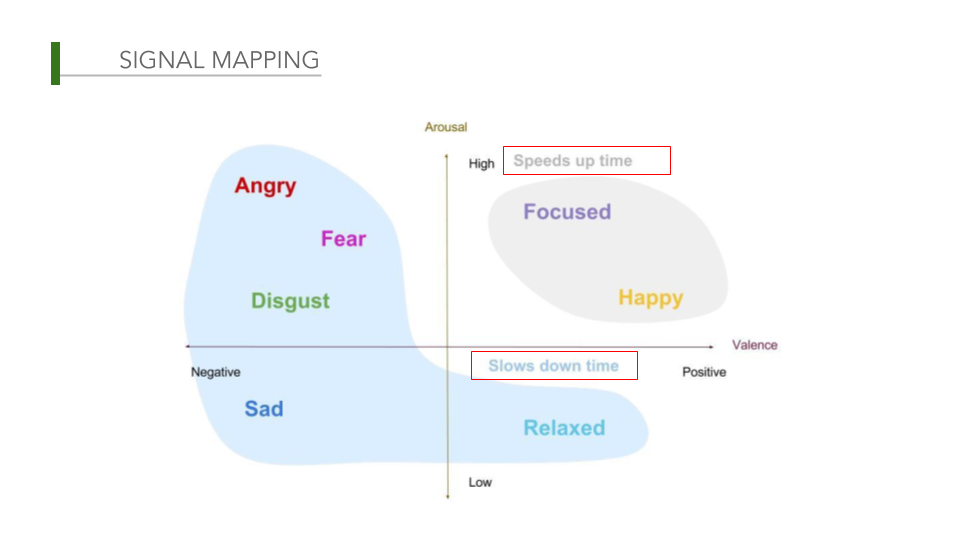

- Valence & Arousal are powerful mechanisms for mapping emotions. We utilized the Python library pyAudioAnalysis to extract values for both valence and arousal using a SVM (support vector machine) classifier (13)

- Emotional Prosody is characterized by fluctuations in pitch, loudness, speech rate and pauses in the user’s voice. We utilized OpenSmile to explore how to extract these features programmatically from audio files.

- Spectrograms have recently been used with Deep Convolutional Neural Nets (14) in order to do speech emotion recognition. We utilized the Python script paura (13) to generate and play with spectrograms of sounds in real time.

- Semantic Analysis can be used to process interactions over text including messages and Facebook. We also explored the ability to classify positive and negative values given a snippet of text. Enabling this at a larger scale to go through message blocks could be included in a future iteration of SEA.

______________________________________________________

Future Work & Conclusion

SEA’s technology and platform can be utilized in alternative ways relating to health, mindfulness, self-awareness, and empathic connections.

Future versions of SEA could allow a person to share their “feelings” with others simply by exchanging their “data” modules on their necklaces. This experience of being able to share “feelings” will potentially help establish more empathic connections between people by letting people understand how others might have interpreted the same situation or conversation. There is also the potential to experience the dynamic shifts of relationships.

Although SEA is currently in the form of a necklace, future versions can be located on other parts of the body: we can imagine a wrist form factor, for example.

The current version of SEA only provides one output on the body: temperature. Although this was a very conscious decision, there is the potential to add haptic vibration to the device. As the wearable is located on the chest, we could get heartbeat during conversations, events, physical activities, and playback the heartbeat as haptic vibrations while someone is going through a similar experience. This would allow for a connected experience between people, helping people understand what others might have gone through perhaps.

Conversely, future work could also include expanding on what modes of communication we are able to analyze as inputs on our platform. Currently, we have shown the ability to output features from speech and some basic text. It would be powerful to expand this to texts, social media messages, potentially even emails, and other forms of communication. In the digital age we are living in, interpersonal interactions extend beyond person to person conversations and enter the realm of social media and digital messages. These digital interactions can have just as much of an effect on people, and so we think it would be important if we can capture these interactions as well and integrate the information with SEA. Additionally, it would be meaningful to capture variabilities in heart beat and skin conductance of the user as a means to create a more robust model for emotional experience. In order for SEA to serve as a cohesive platform, work needs to be conducted to unify the various input and output modalities. A personalized machine learning model that is able to do this would be an important component of future development.

Alternative Usage Scenarios

Because SEA reveals how a person feels about an interaction, the possibilities are endless for it to be used as an interventional, awareness device in many different scenarios. Imagine a hospital setting with a caretaker/caregiver and a patient. Patients might not always be able to say what is on their mind or how they are feeling about how they are being treated by the caretaker or nurse or doctor. SEA would allow caretakers and caregivers to be more mindful of how they are treating their patients by giving them a sense of when words they say or actions they do influence their patients in a negative way.

We can also imagine SEA being used in the context of psychology. Psychologists and psychiatrists can train better using SEA and understand when their clients feel better or worse based on the types of words and advice they give. This could translate to relationship counselors as well.

In general, SEA could be used as a tool for people who are in professions that require them to speak and interact with many others: physical trainers, guidance counselors, teachers to name a few.

Ultimately SEA is a wearable that aims to provide people with a better understanding of how they feel in their interpersonal interactions.

Conclusion

SEA is a spatially aware wearable that provides people information about their interactions with others by using changes in temperature. A user’s voice during interactions with others is analyzed and translated into positive or negative emotional state using very subtle changes in temperature. Although currently a wearable + app that examines voice and vocal tone, SEA can become part of a larger platform that gathers emotional response and state from digital communication tools as well (Facebook, text, Skype, etc). The platform of emotional state and feeling awareness can be used in many different settings to help people be mindful of their actions and behaviors and the things they say. SEA can be used in a variety of ways, from a very personal, self-awareness way or in a very professional, caregiver/patient setting. SEA is the main component of a platform that will aim to help people be more mindful of how they are in relationships with others. Ultimately, SEA hopes to nurture healthier interactions between people.

______________________________________________________

References

[1] Avtgis, Theodore A., Daniel V. West, and Traci L. Anderson. “Relationship stages: An inductive analysis identifying cognitive, affective, and behavioral dimensions of Knapp’s relational stages model.” Communication Research Reports 15.3 (1998): 280-287.

[2] Chen-Bo Zhong and Geoffrey J. Leonardelli. Cold and lonely: does social exclusion literally feel cold? Psychol Sci. 2008 Sep;19(9):838-42. doi: 10.1111/j.1467-9280.2008.02165.x.

[3] Beebe, Steven A., Susan J. Beebe, Mark V. Redmond, and Lisa Salem-Wiseman. Interpersonal Communication: Relating to Others. Don Mills, Ontario: Pearson Canada, 2018. Print.

[4] Brueckner, Sofia. “Projects | Fluid Interfaces.” Fluid Interfaces Group, MIT Media Lab. N.p., n.d. Web. 20 May 2017.

[5] Williams LE, Bargh JA. Experiencing Physical Warmth Promotes Interpersonal Warmth. Science (New York, NY). 2008;322(5901):606-607. doi:10.1126/science.1162548.

[6] Graham Wilson, Dobromir Dobrev, and Stephen A. Brewster. 2016. Hot Under the Collar: Mapping Thermal Feedback to Dimensional Models of Emotion. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16). ACM, New York, NY, USA, 4838-4849. DOI: https://doi.org/10.1145/2858036.2858205

[7] Iwasaki, K., Miyaki, T., & Rekimoto, J. (2010). AffectPhone: A Handset Device to Present User’s Emotional State with Warmth/Coolness. B-Interface.

[8] Jordan Tewell, Jon Bird, and George R. Buchanan. 2017. Heat-Nav: Using Temperature Changes as Navigation Cues. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17). ACM, New York, NY, USA, 1131-1135. DOI: https://doi.org/10.1145/3025453.3025965

[9] Nummenmaa, Lauri. “Bodily Maps of Emotions.” Proceedings of the National Academy of Sciences of the United States of America 111.2 (2014): 646-51. JSTOR. Web. 19 May 2017.

[10] Umberson, Debra, and Jennifer Karas Montez. “Social Relationships and Health: A Flashpoint for Health Policy.” Journal of health and social behavior 51.Suppl (2010): S54–S66. PMC. Web. 19 May 2017.

[11] WearableX. “Fan Jersey (X).” Let’s Xperiment. N.p., n.d. Web. 19 May 2017.

[12] Vinaya. “ALTRUIS X: FINDING STILLNESS IN THE CITY.” VINAYA. N.p., n.d. Web. 19 May 2017.

[13] Giannakopoulos, Theodoros. “pyaudioanalysis: An open-source python library for audio signal analysis.” PloS one 10.12 (2015): e0144610.

[14] Badshah, Abdul Malik, et al. “Speech Emotion Recognition from Spectrograms with Deep Convolutional Neural Network.” Platform Technology and Service (PlatCon), 2017 International Conference on. IEEE, 2017.

[15] AudEERING | Intelligent Audio Engineering – OpenSMILE.” AudEERING | Intelligent Audio Engineering. Audeering, n.d. Web. 19 May 2017.

The rail is too steep to climb (visual materials of this gif are from YouTube)

The rail is too steep to climb (visual materials of this gif are from YouTube)

Salvador Dali’s The Persistence of Memory (1931)

Salvador Dali’s The Persistence of Memory (1931)

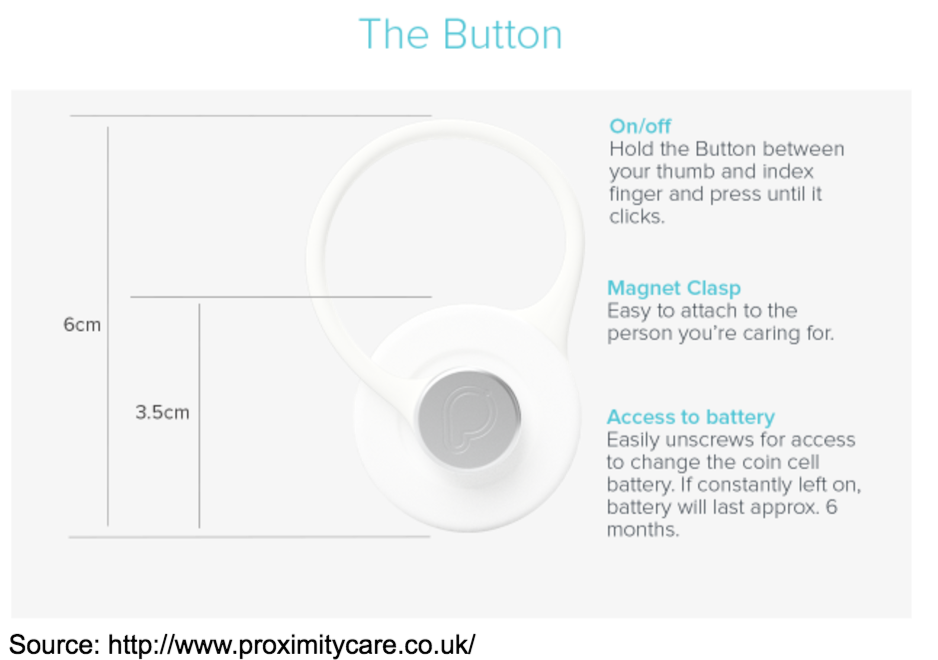

Source: http://www.proximitycare.co.uk/

Source: http://www.proximitycare.co.uk/

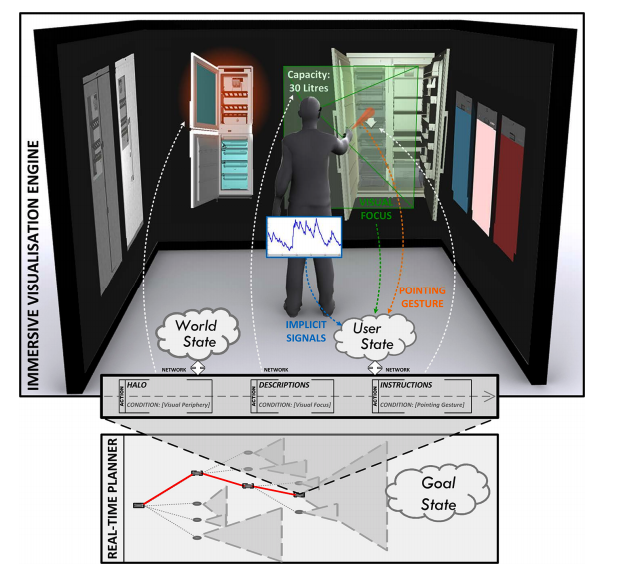

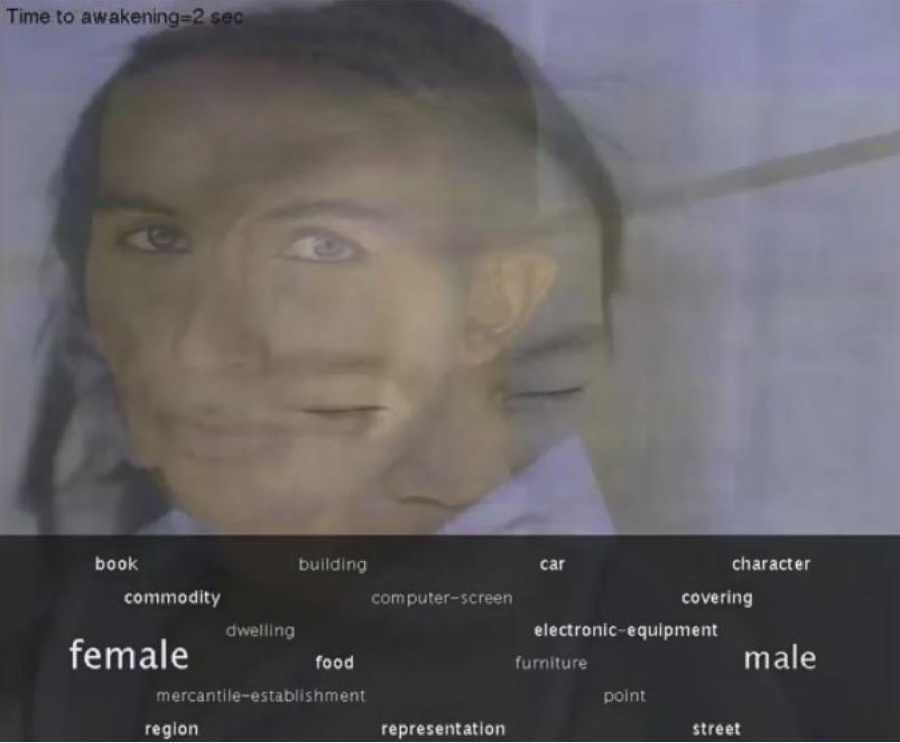

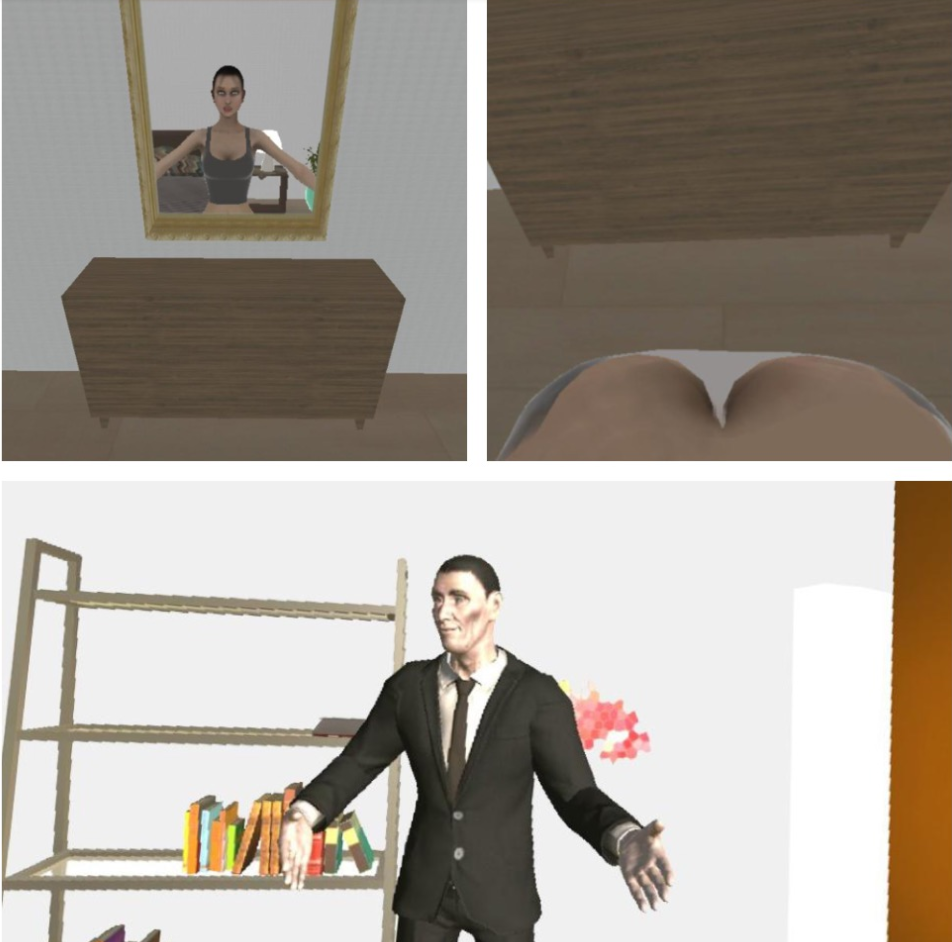

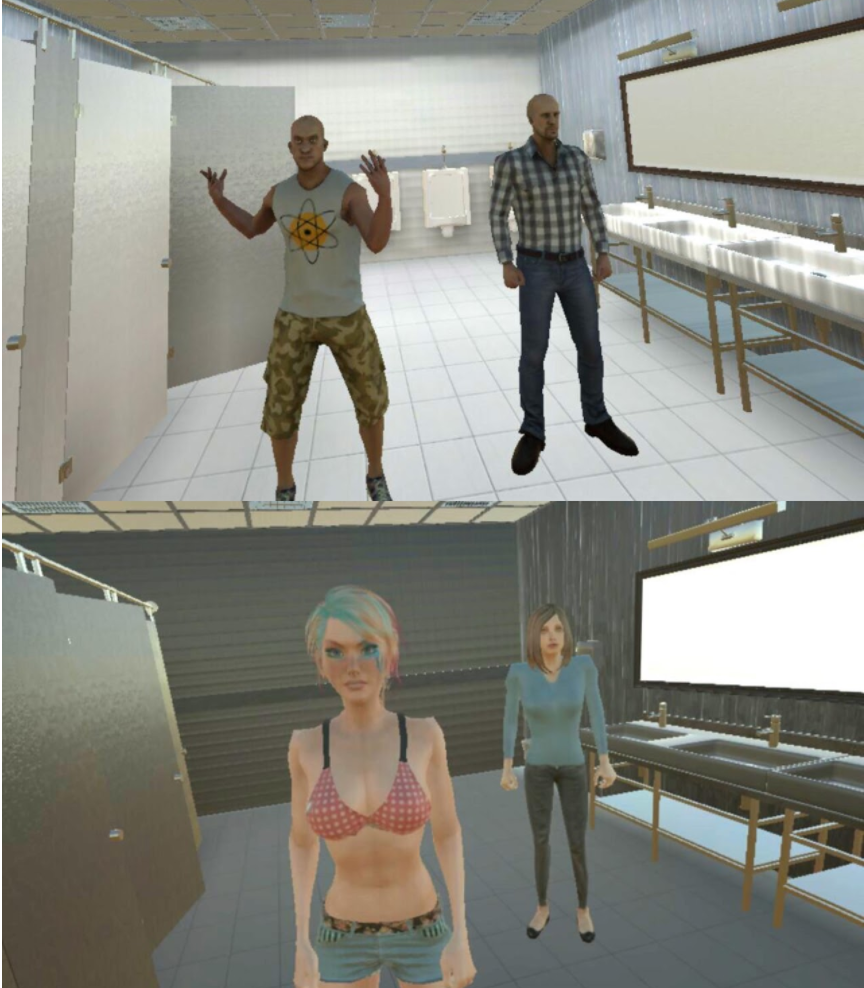

Another project and closer to our topic is Incorporating Subliminal Perception in Synthetic Environments. An interactive visualization of a synthetic reality platform that, combines with psychophysiological recordings, enables the researchers to study in realtime the effects of various subliminal cues.

Another project and closer to our topic is Incorporating Subliminal Perception in Synthetic Environments. An interactive visualization of a synthetic reality platform that, combines with psychophysiological recordings, enables the researchers to study in realtime the effects of various subliminal cues.