Concept and Motivation

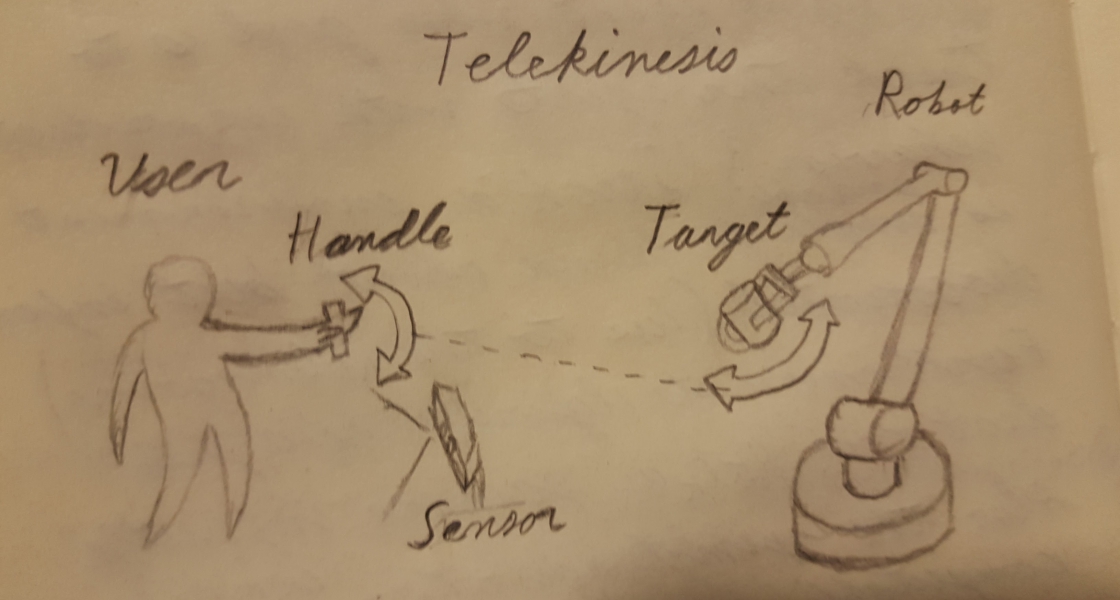

The natural way of changing the pose (position and orientation) of an object in space is through direct physical manipulation, namely with our hands. However, we are limited to manipulation of nearby objects by the length of our reach. This project aims to extend that natural ability with arbitrarily long range by transducing the manipulation through robots, which have no such locality constraint. This allows the user to naturally manipulate objects at a distance. The basic mode of interaction involves the gestural selection of a target object that a robot picks up, and pose manipulation through a “handle” analog object. What the user does to the handle, the robot does to the target object. The result is telemanipulation, but unlike other robotic manipulation concepts, the user maintains their own reference frame. They carry out the interaction from their perspective, as if they simply had longer arms, not as if they were teleported to whatever position the robot happens to be in. It it more like or non-psycic “telekinesis”.

Implementation

The technical implementation is rather straightforward. A gesture sensor (Microsoft Kinect) interprets the user pointing to an object they want to manipulate. Once the object is selected, pose input via the “handle”can be done with accurate Inertial Measurement Unit (IMU) – a smartphone will suffice. The position and state of the robot does not matter in this framework, as long as the robot is close enough to the object to grab it. The job of the robot, then, is to map the pose of the handle to it’s own end effector, using standard inverse kinematics.

Extension

By transducing physical manipulation through robotic technology, many more capabilities are enabled. The robot can handle objects that would be too large or heavy to manipulate by hand, yet because the interface is consistant, their manipulation is just as intuitive to the user. The robot can also have a much larger workspace than the human arm, so the translations of the user can be scaled upbeyond what the user could do in person. There is not requirement that the robot be stationary, or even grounded (quadcoptors), so the user can select any target objects within their line of sight and manipulate them without moving themselves, as long as the robot(s) can get to the objects. The inverse is also true – because the robot could be more precise than human hands, the user could use the same interface to scale down the manipulation into micro-manipulation with fine control of translation on very small scales. The objects can still be large however – you can position a shipping container with millimeter precision as easily as you could a marble.