Introduction:

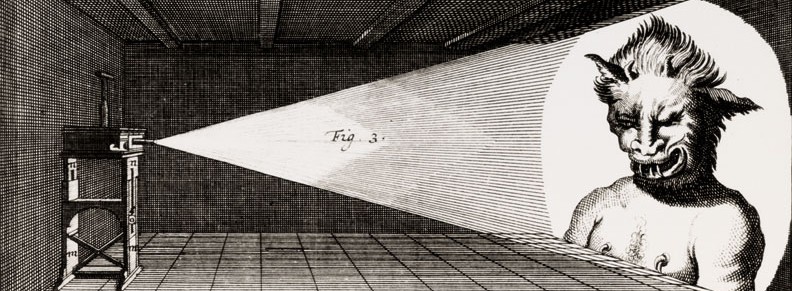

The Magic Mirror is a spin off of my previous work with facial animation from last week. However I made a massive jump from purely digital art, modeling and animation to a full on software application for live facial recognition. This project is inspired by the sassy character in Dreamwork’s Shrek, but any image with a clear picture of a face in it could be used with the facial recognition algorithm I’m using.

Resources:

The open source github of what I have done can be found at: https://github.com/emvanb/MagicMirror

This work builds upon Kyle McDonald’s ofxFaceTracker and aturo’s FaceSubstituion libraries.

My Experiences:

This project was a pretty extreme stretch for me. Especially since when I proposed this idea, I didn’t yet know that facial image recognition and capture would be such a niche field with software that costs up to $10,000. Me being a naive but passionate CS undergrad at MIT, I should have recognized that I was a little in over my head.

when I started this project, I figured it would be difficult, but not infeasible. After many hours of researching on the web, and asking companies for free trials of their software, I realized that this mirror might be closer to infeasible than difficult.

I had done work with capturing body movements before with the XBOX Kinect for another Media Lab project called BodyPaint:

https://www.youtube.com/watch?v=Em9zXr30LlE

It was my mistake to assume capturing the motion of an arm or foot would be similar, since facial recognition is in the art of detail. Instead of using the XBOX Kinect, it seemed like just focusing on detailed images from a camera would be my best shot.

I eventually gave up on looking for pre-made applications online, as none of it was free and all of it required some elaborate set up. It was during this point that I stumbled across an openFrameworks add-on that I could use for facial tracking, along with a project that was being used to map on peoples faces over a camera. It wasn’t quite what I had in mind, but it was close enough. I dug around in the code to turn off all possibility of the camera showing a face, and changed the shader such that the mask didn’t attempt to blend it’s color with my skin. I also increased the strength of the masked color and turned the background black. I then fixed the modeling of my mask and took a picture of it so that I could import it into the software. After numerous tests I deemed it the best it could be at for presentation, and started training my voice actor to use the app. For some reason, the software was better at picking up my smaller rounder face than his longer one, but after some careful calibration we found what light source was needed, distance to screen necessary, and head movements would be best in order to not loose tracking.

For the monitor display I went to Blick to buy fancy gold paper and cardboard to frame a large 48″ HD screen with a half silvered mirror on top (Most of the frame was packing and duct tape from the back, but hey it worked). I then took some black cloth and draped it over the front bottom part of the mirror, so the audience couldn’t see the set up behind. The set up behind the mirror consisted of two lamps with extremely carefully dampened light in various positioning, a laptop connected to the HD TV with an HDMI cable, and my friend Yousef Alowayed who would serve as the voice behind the mirror. An extremely special thank you to Yousef. He’s an undergrad just like me, and took time out of his extremely busy finals week to help me with my Grad class project and listen to my attempts at directing him on how to move his face and where to sit.

Conclusion:

Overall this project was (in my opinion) a pretty big success. I was able to manipulate a program that was very difficult for me to understand to somewhat follow what some insanely expensive software does in industry. It was a very valuable learning experience, and quite the hilarious portfolio piece. I would like to thank my professors and mentors Dan Novy and V. Michael Bove for helping and encouraging me throughout this project, especially since they probably already knew this was going to be a long shot. Also special thanks to Anthony Kawecki and Anthony Occidentale for helping me throughout this class and for listening to me nonstop rant about how my mesh was detaching from my rigging, Maya was failing, and facial tracking was glitching for the past several weeks.

Note: The second movie referenced in the above video is Finding Dory to be released by PIXAR, not Disney.