Sound of Shape

Caroline Rozendo, Irmandy Wicaksono, Jaleesa Trapp, Ring Runzhou Ye, Ashris Choundhury

Concept

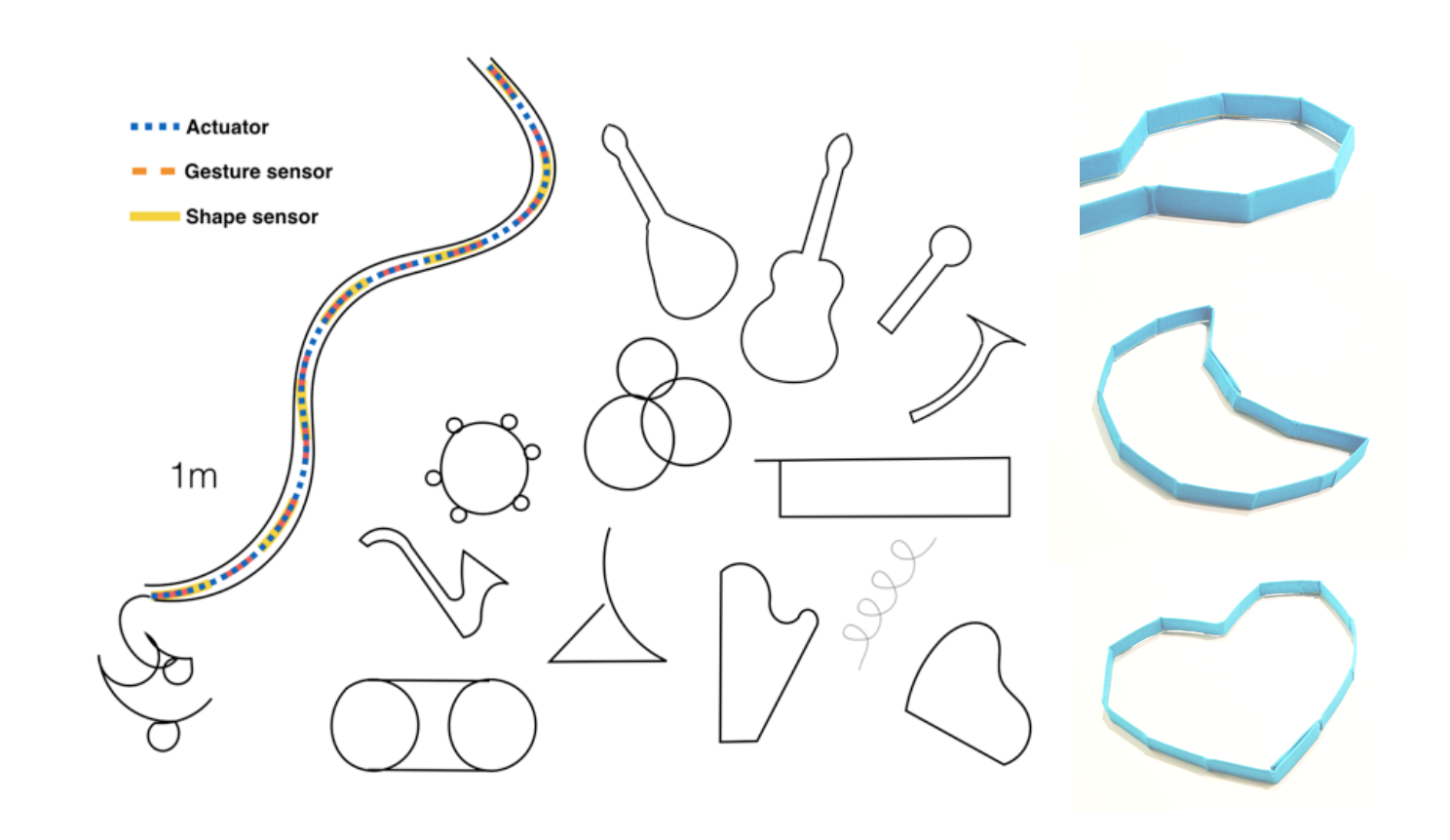

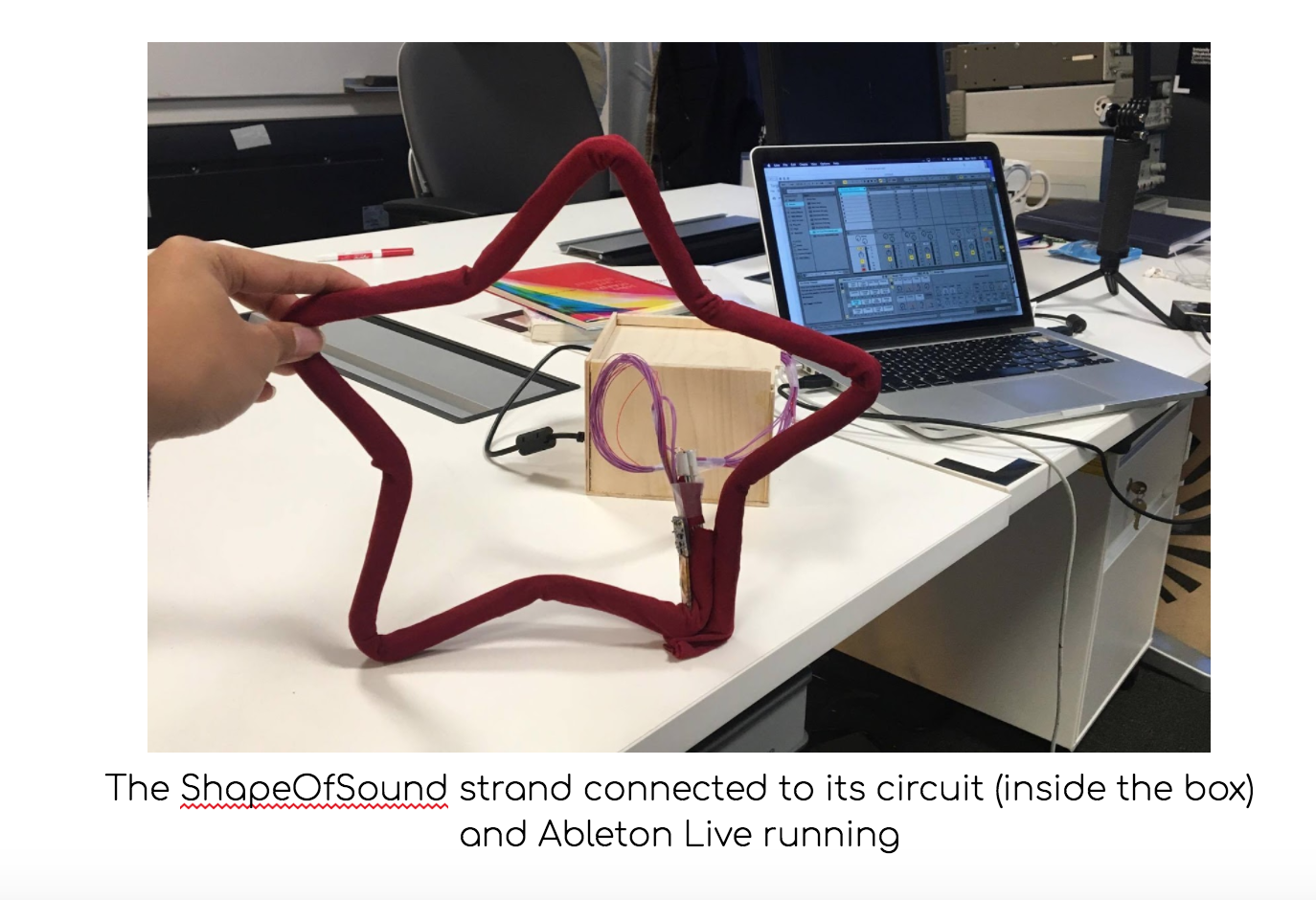

A strand-like object that enables users to explore sound through creating shapes. The user is allowed to explore the possibilities with the signifiers and model both iconic instruments and their own ideas of playable things.

Inspiration

“Music is not limited to the world of sound. There exists a music of the visual world.” – Oskar Fischinger, 1951

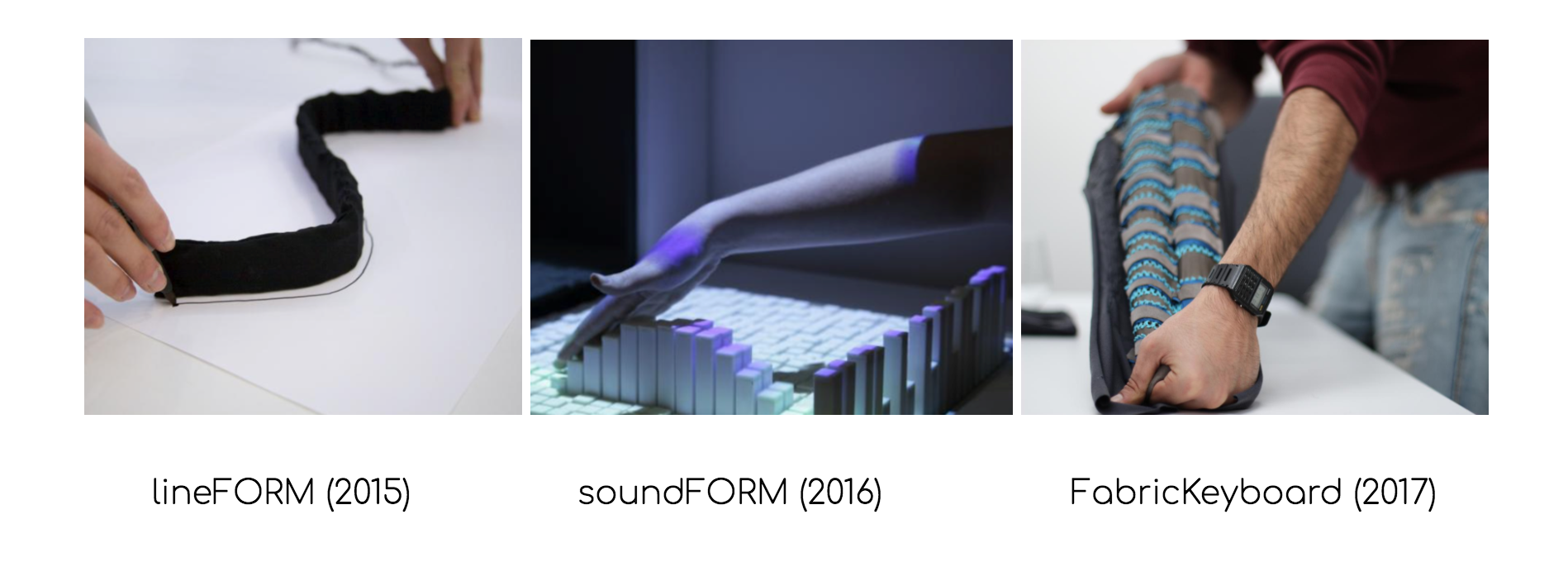

The quote by Oskar Fischinger motivated us to explore the music of the visual world and extending the exploration to the physical world. Projects that we looked to for inspiration include lineFORM – which inspired the shape of our product, soundFORM – which inspired us to manipulate sound through shapes, and the Fabric Keyboard – which inspired us to build a multi-functional deformable musical interface for sound production and manipulation.

Affordances

The long, bendable strand allows the user to form it into various shapes. The sensitivity of this sound-generating instrument to proximity, tap, and shakes prompts the user to immediately realize its musical behaviour. As the user explores different shapes, different sounds will be created by the instrument, inviting them to explore various shapes and their relationship to sound.

Constraints

We used physical sensors embedded inside the strand instead of computer vision, as using vision-sensing will limit the working area of interaction and reduce its expressiveness and functionality to the users. We would like the user to have freedom in shaping and interacting with this tangible musical interface. However, since each of the sensors is currently wired to the circuitry in our initial prototype, the mobility of this instrument is limited to 50cm.

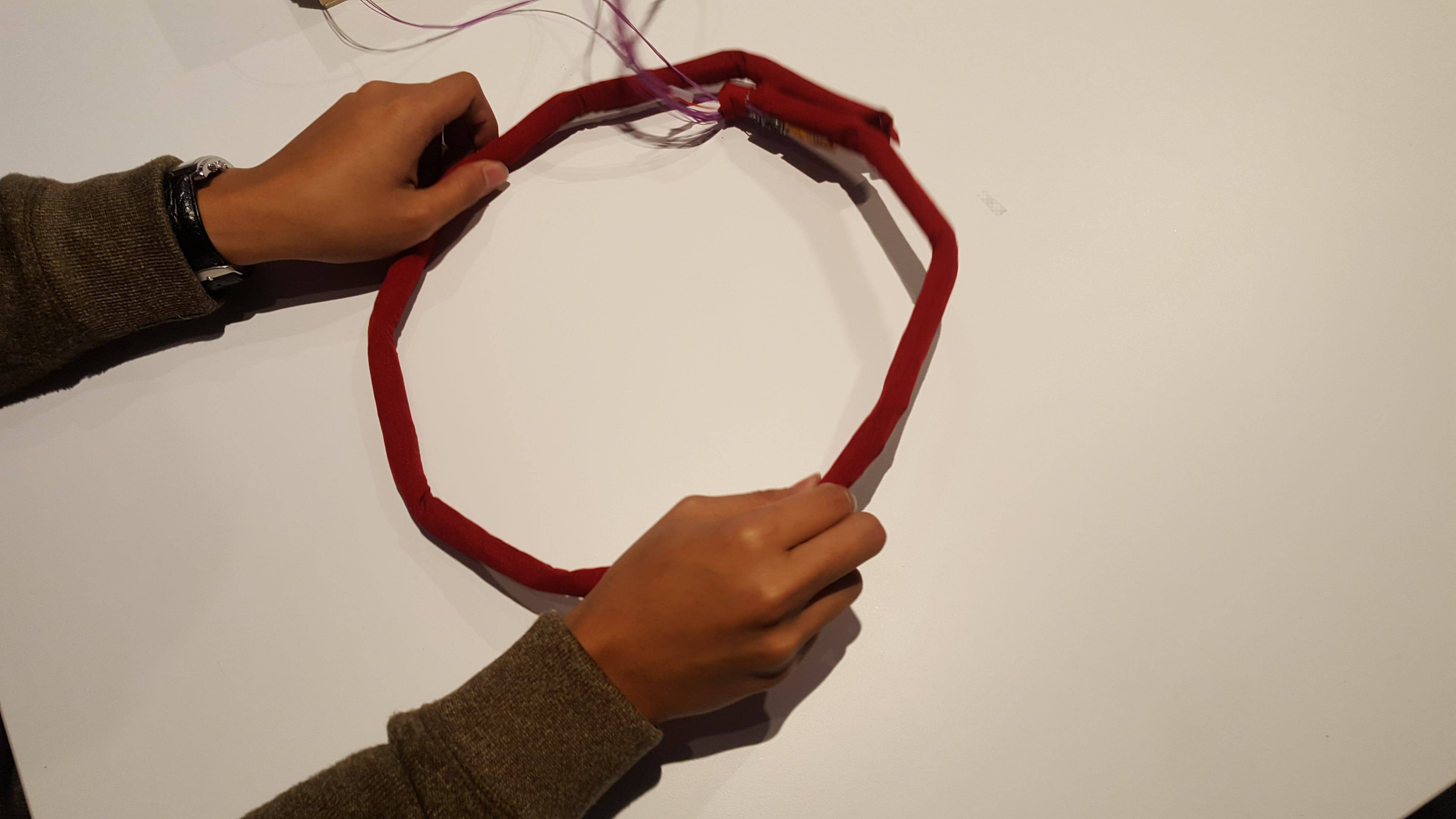

Since an array of uni-directional bend sensors are used on both sides of the strip/strand, this instrument is constrained for 2D shape-making. In addition, the amount of hinges, which are currently ten, limits the variety of shapes that can be formed.

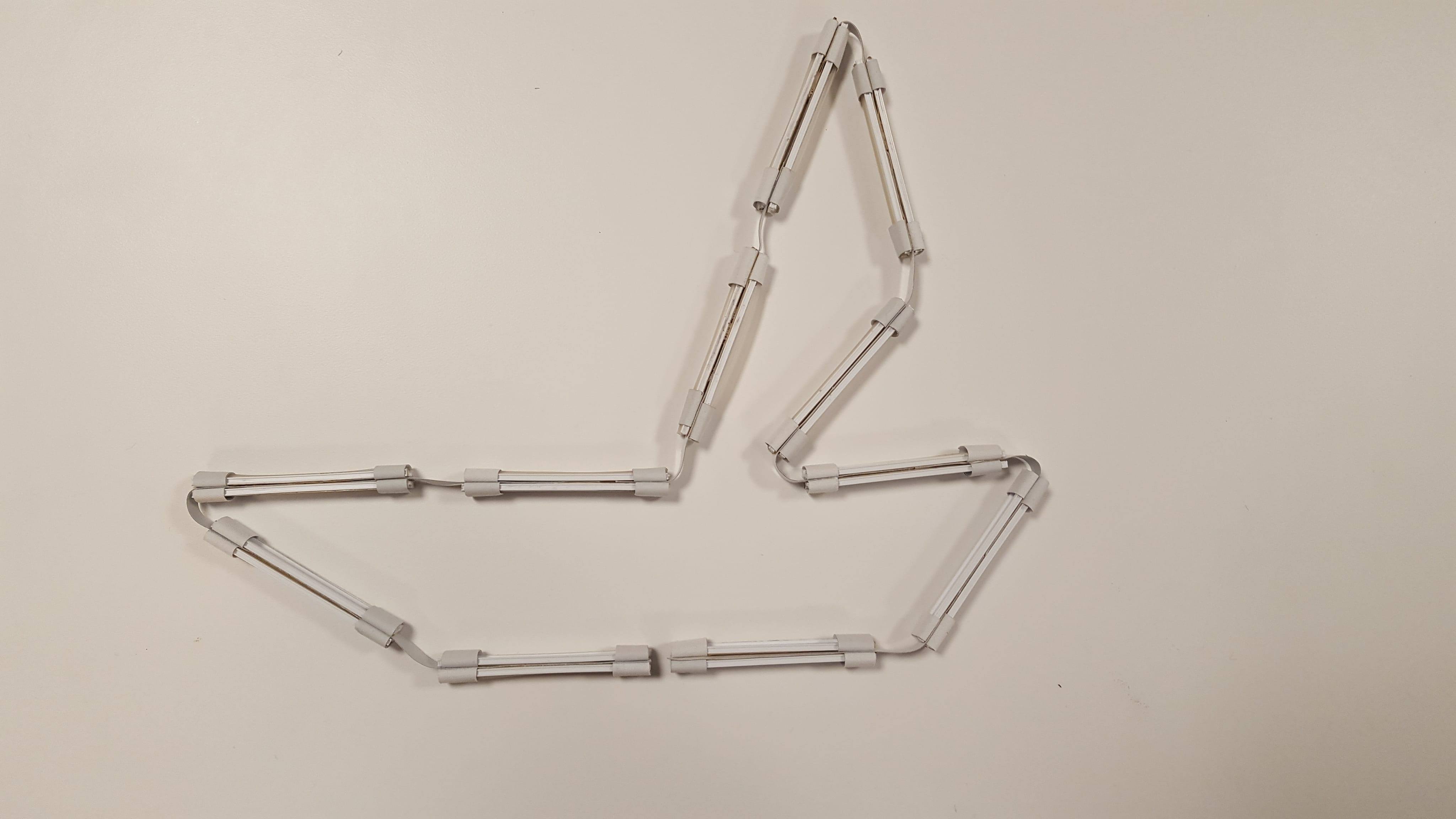

Implementation

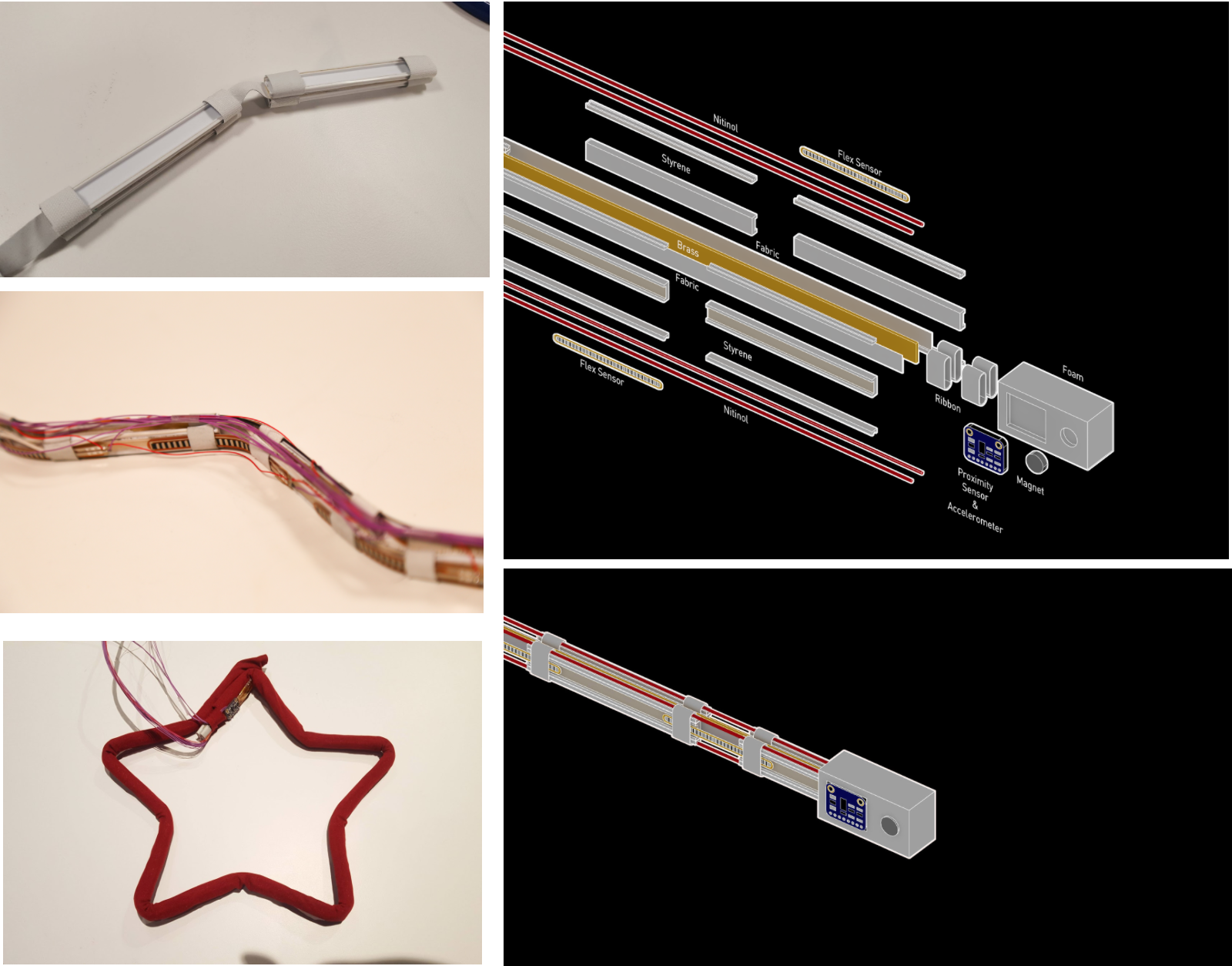

As illustrated in the figure above, the strand consists of multiple layers encapsulated in a soft fabric. A long brass strip is used as the base layer and retains its shape under different bending. An array of short styrene blocks on each side of the strip provides structural support and for the 20 flex sensors and their wirings, as well as side grooves for the Nitinol wires, two on each side. At the end of the strip, accelerometer, proximity sensor, and magnet are attached and protected by a foam.

The usage of flex sensors enable this instrument to detect its own shape, the accelerometer enables this instrument to detect taps and shakes (i.e. tambourine, triangle, drum) ,the proximity sensor allows the exploration of touch sensing around the inside of the 2D-shape (i.e. piano, harp). Magnets facilitate snapping of two ends of the strand to complete the 2D-shape.

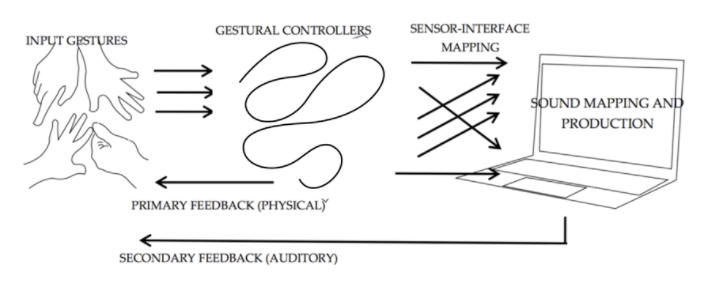

Musical Mappings

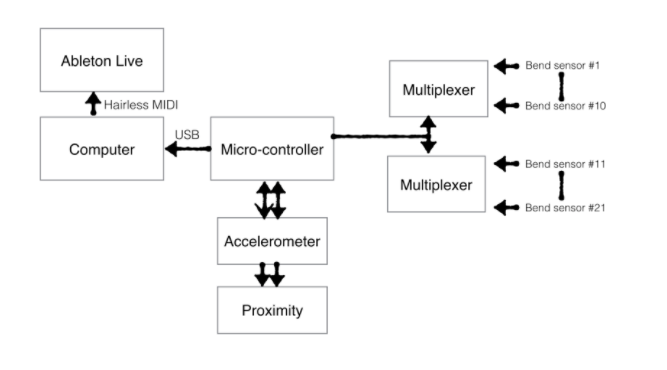

The sensor data is continuously read and if triggered, is converted to match the specification of a MIDI protocol. A message will be then populated using these data and an address containing a status, channel, note on/off, amplitude, and expression value. In our case, for the proximity-mode, note on/off are triggered by initial proximity and the pitch of this note is controlled by the discrete proximity value, for the vibration-mode, note on/off are triggered by accelerometer through light vibration (tap) and strong vibration (shake), the amplitude is also set by the strength of the vibration. This message is then received by either an audio synthesis environment, such as PureData or Max/MSP, or audio sequencer framework such as Ableton Live or GarageBand. In this project, we used Ableton Live. The software will then process this message and generate or control a particular sound based on its mappings and settings, providing a sound feedback to the performer. This enables musicians, sound artists, and interaction designers not only to play and explore the capability of this instrument, but also to change the functionality and express themselves by mapping and experimenting different sound metaphors.

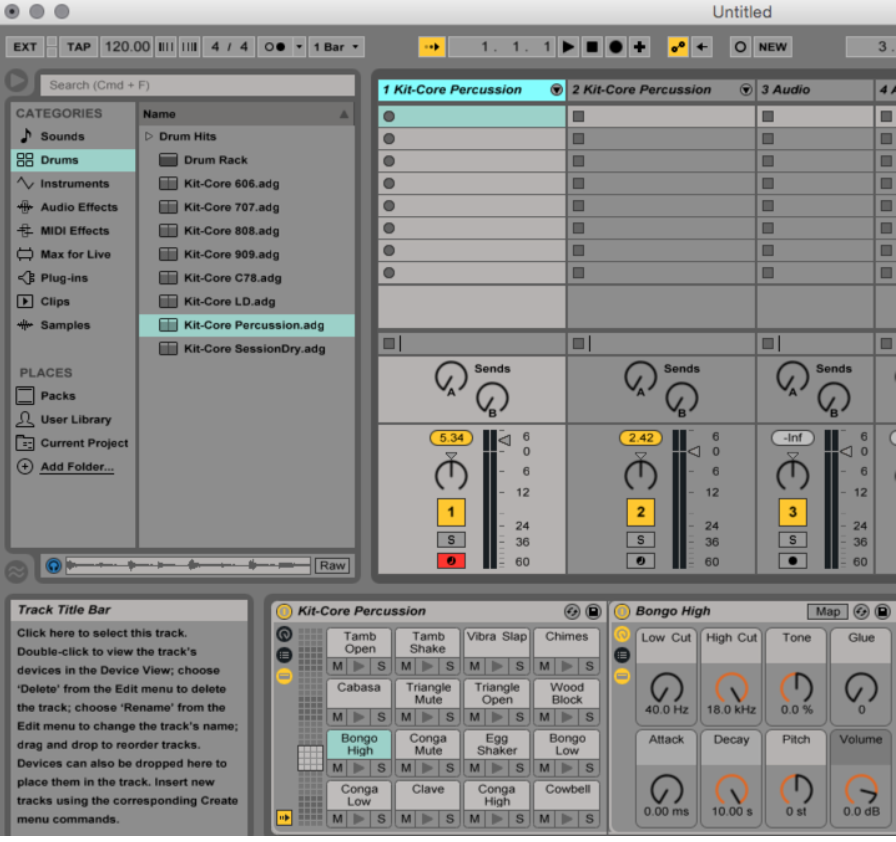

In below Ableton Live screen capture, a percussion instrument is activated, by dragging and dropping the subset or changing the shape of the strand, the user can play different types of percussion instrument, such as tamborine, vibra, cabasa, chimes, conga, cowbell, etc. Current setting is shown to play a high-frequency bongo.

System Design

Arduino DUE was used as the microcontroller that processes the sensor data from the flex sensors, proximity sensor, and accelerometer to digital inputs, converts them into MIDI messages, and sends these MIDI messages to Ableton Live 9 through Serial USB.

Since we used a large number of flex sensors ~ 20 pieces, two multiplexers (CD74HC4067) are used. Each of the multiplexer takes each side (10) of the array of flex sensors. The microcontroller addresses each input pin of both multiplexers simultaneously and subsequently read them through two ADC pins. Since we need approximately 3A to power the Nitinol wires for shape changing effects, a transistor circuit that connect an external supply (6V Battery) is built and can be controlled digitally by the microcontroller.

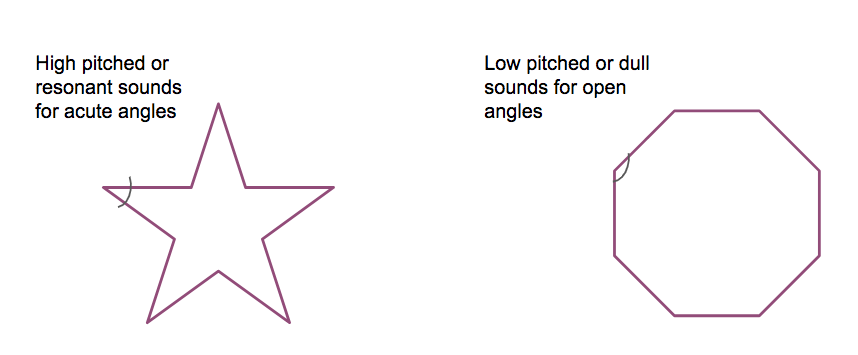

Sound-Shape Association

The mapping of shapes into different instruments takes inspiration from a number of articles and research experiments which suggest that the way how we connect auditory and visual-tactile perception is not completely arbitrary.

In the “Kiki-Bouba” experiment, conducted for the first time by Kohler in 1929 and replicated a few times afterwards, people were asked to match a spiky angular shape and a round, smooth shape to the nonsense words “kiki” and “bouba”. There was a strong preference to associate “bouba” to the round, smooth shape, and “kiki” to the angular shape. In a repetition of Kohler’s experiment conducted in 2001 by Vilayanur S. Ramachandran and Edward Hubbard, this preference accounted for at least 95% of the subjects.

On an article published by Nature in 2016, Chen, Huang, Woods and Spence go a little further, investigating the assumption that this correspondence would be universal and analyzing the associations of frequency or amplitude to shapes.

By allowing the user to shape the objects in search of the pitch and sound they would like to play with, we use a characteristic of perception to improve the intuitiveness of the interface.

Future Work

As a future work, we would like to extend our strand to allow more joints and possible shapes. We are also interested in integrating more sensors to expand the functionality of this strand as a musical instrument. We will also explore the use of shape memory alloy in our instrument and incorporate shape-changing feature, which will enable the instrument to automatically shape itself, help the users to reach their final or iconic shape, or even more exciting, to adapt its shape and learn its mapping based on a given sound. We also plan to improve the usability of this instrument, by making the instrument wireless, the users will be able to fully express themselves and explore the relationship between sound and shape. Finally, we would like to collaborate with musicians and sound artists to demonstrate the capability of this novel deformable instrument through musical performance or composition.

Process Pictures

Video

https://youtu.be/TEZTWOAdeP4

Attach your report here by end of day Sunday Dec 10