| AffIE: Affective Interactive Environment || Randy Rockinson || MAS630 | |||||||||||

|

AffIE

Implementation

|

Implementation

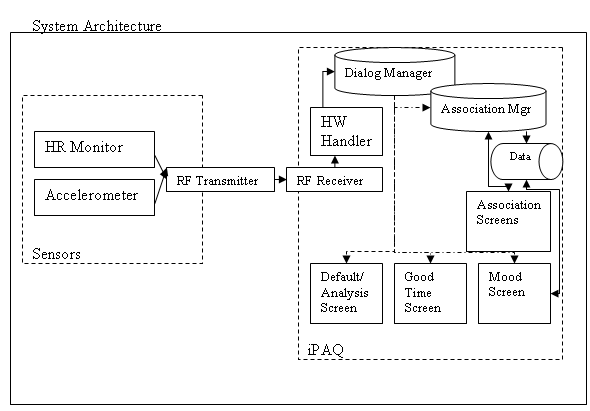

That said, let's start with an overview of the system. System Overview The following diagram is the high level architecture of my system.

The sequence is quite simple, the HR Monitor and the Accelerometer transmit to the PDA via an RF link. This data is then processed and the Dialog Manager displays the correct screen based on timing and events. The association manager handles the word association feature of the device.

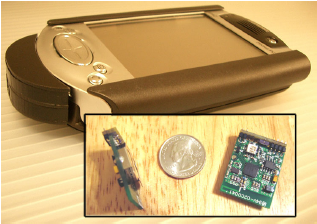

Hardware: The following are images and brief descriptions of the hardware used:

These three components provide the personal environment for my experiment.

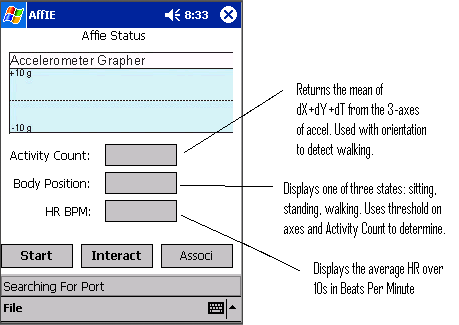

Dialog: The dialog was done on the iPAQ using C#. The logic for the dialog is very simple. In the rest state, the dialog displayed is a diagnostic screen. Buttons are provided for demo purposes.

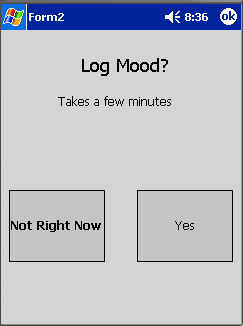

From this screen, an event of a change in heart rate that is greater than 10 BPM and the user is not walking causes the dialog to change to it's annotation mode. This is set to only be able to happen twice an hour max so to alleviate the burden to the user. The next screen is a courtesy screen that allows the user to choose if they want to be interrupted or not. It is labeled in the system architecture picture as Good Time Screen and looks as follows:

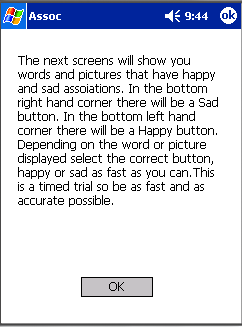

By selecting no, the user is taken back to the default screen, otherwise the measure of valence then begins through the word/picture association dialogs. The instructions are as follows:

and the interface looks like:

The user goes through three sets (one word , one picture, and one mixed) of ten associations. The first two trials are thrown away as practice. The central tendency of the times of the positive and negative, weighting positively for congruent association time if correct answer and positively for incongruent answer if answer incorrectly, for the third trial is scored. Sample words include:

"laughter", "horrible", "glorius","awful","love", "joy","painful","terrible","wonderful","nasty" And sample pictures include:

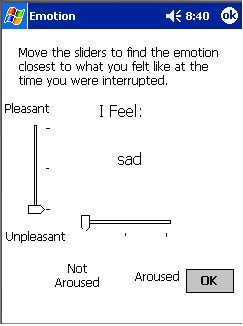

After the three sets of associations, the user is then given the opportunity to validate the hypothesis by choosing the valence and arousal that correlates to their mood. The screen in two different states looks as follows:

The emotions are drawn from Russel's Circumplex model. The full 3X3 array is as follows:

Notice that since the dialog triggers only changes in arousal, my hypothesis is that I will only be able to get the middle and positive aroused states and not the low arousal states. Also, this model has many different textual emotion assignments for each emotion, that is two different people will place angry in different places in the continuum, thus the model is imperfect but serves the purpose here. In the future I should probably make a more careful mapping.

|